The Case Against PGVector: When Embedded Vector Databases Bite Back

Why cramming vector search into PostgreSQL looks great on paper but falls apart in production - a hard look at scalability, real-time search, and operational nightmares.

The promise sounds perfect: keep everything in Postgres. One database, one backup, one system to rule them all. But when you actually run PGVector in production, that convenience quietly turns into a trap.

The Unspoken Reality of PGVector Indexing

PGVector gives you two main indexing options, and neither is particularly friendly when you’re dealing with production-scale data.

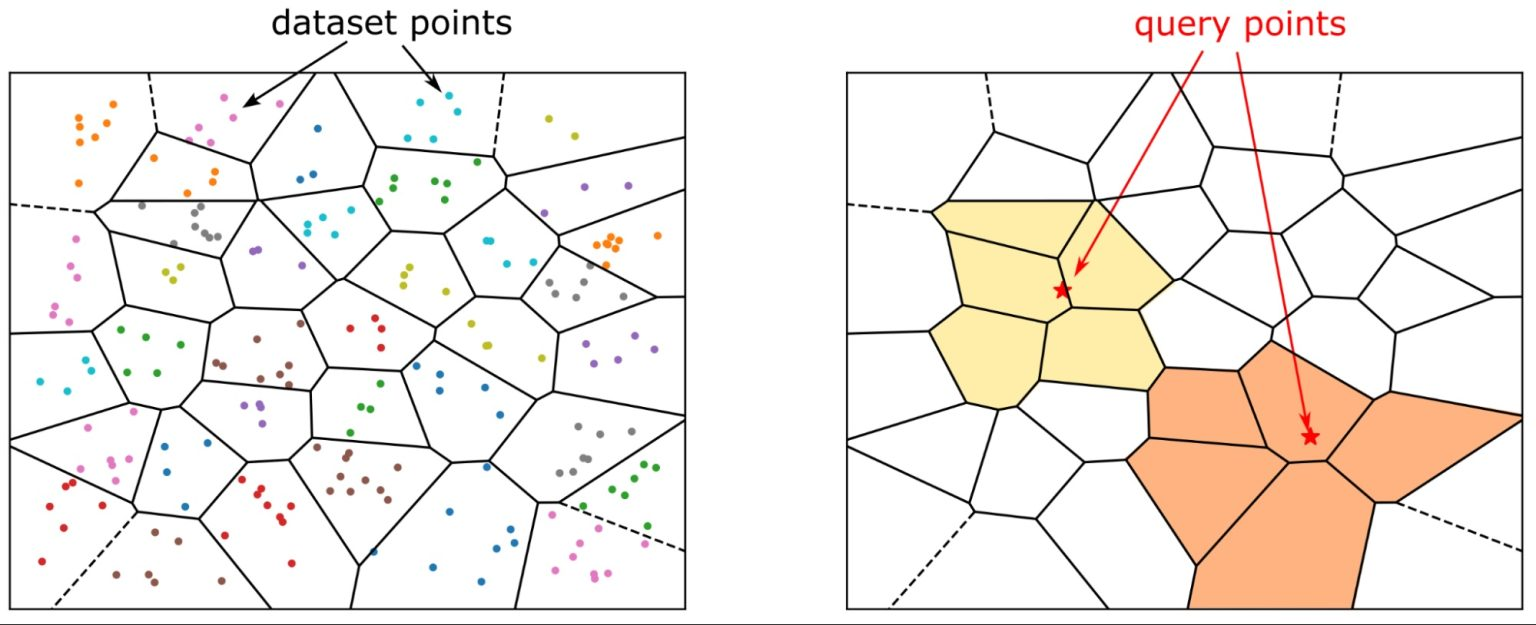

IVFFlat: The Aging Workhorse

IVFFlat partitions your vector space into clusters, searching only within the nearest ones. While it has lower memory requirements and faster index creation than HNSW, it comes with significant trade-offs. You must specify the number of lists upfront, and that choice significantly impacts both recall and query performance. The commonly recommended formula, rows divided by 1000, is barely a starting point.

The real killer in production? New vectors get assigned to existing clusters, but clusters don’t rebalance without a full rebuild. Your recall gradually degrades as data distribution shifts, requiring periodic index rebuilds that can take hours on large datasets.

HNSW: The Memory-Hungry Perfectionist

HNSW builds a multi-layer graph structure that delivers better recall and more consistent performance than IVFFlat. It scales better to larger datasets, but the operational costs are substantial.

The problem isn’t theoretical, it’s operational reality. Building an HNSW index on a few million vectors can consume 10+ GB of RAM on your production database ↗, potentially taking hours to complete while your database struggles to serve regular traffic.

Real-Time Search: The Impossible Dream

In modern applications, users expect newly uploaded data to be searchable immediately. They upload a document, you generate embeddings, insert them, and they should appear in search results immediately. Simple, right?

The Index Update Nightmare

With IVFFlat, new vectors get assigned to existing clusters based on the initial structure. Over time, this leads to suboptimal cluster distribution, forcing periodic index rebuilds. The operational question becomes: Do you queue new inserts, write to a separate unindexed table, or accept degraded search quality?

HNSW handles this better with incremental insertion, but it’s far from free. Each insertion requires updating the graph, memory allocation, graph traversals, and potential lock contention. Under heavy write load, this becomes a significant bottleneck, slowing down both writes and reads.

The operational reality gets worse when you consider metadata synchronization. You’re not just storing vectors, you have document titles, timestamps, user IDs, categories. That metadata lives in other tables or columns, and everything needs to stay in sync. While Postgres transactions handle this beautifully for regular data, index builds that take hours complicate consistency management tremendously.

The Filtering Trap That Breaks Your Queries

Let’s say you’ve solved your indexing problems. Now you have millions of vectors with metadata. A user searches for something, and you only want to return published documents:

This innocent-looking query hides a massive performance pitfall. Should Postgres filter on status first (pre-filter) or do the vector search first and then filter (post-filter)?

Pre-filter works beautifully when your filter is highly selective, finding 1,000 documents out of 10 million. But it falls apart when filtering isn’t selective enough.

Post-filter creates a different problem: PGVector finds the 10 nearest neighbors, then applies your filter. If only 3 of those 10 are published, you return 3 mediocre results even though there might be hundreds of relevant published documents slightly further away in the embedding space.

Users get incomplete, low-quality results without knowing they’re missing better matches. Your workaround becomes fetching more results than needed (LIMIT 100) and filtering, which means doing way more distance calculations and guessing at the right oversampling factor.

When Multiple Filters Multiply Your Problems

Add another dimension to filtering:

Now the combinatorial complexity explodes. Should you apply all filters first, search first then filter, or apply some filters before searching? The decision becomes critical to performance.

Postgres’s query planner, designed for traditional workloads, often gets this wrong because its cost model wasn’t built for vector similarity search. You end up with query patterns that take seconds instead of milliseconds, and the issue becomes invisible until you’re dealing with production-scale data.

The Scaling Lessons Nobody Tells You

As Anup Jadhav observes ↗, Postgres was built for structured queries, not high-dimensional vector search. This fundamental mismatch creates friction that becomes painful at scale.

The operational overhead becomes staggering:

- Index management is brutal: Rebuilds are memory-intensive, time-consuming, and disruptive

- Real-time indexing has real costs: Either in memory overhead, search quality degradation, or engineering time

- Query planning becomes witchcraft: You spend weeks tuning patterns that should work out of the box

- Cloud limitations bite hard: PGVectorScale, Timescale’s improvement, isn’t available on AWS RDS

The Hidden Cost of “Simplicity”

The appeal of PGVector seems obvious: consolidation reduces operational complexity. But this assumes that complexity disappears rather than shifts. In reality, complexity transforms from managing multiple systems to solving architectural problems within a single system.

Dedicated vector databases like Pinecone, Weaviate, or Turbopuffer provide what PGVector forces you to build:

- Intelligent query planning for filtered searches

- Hybrid search out of the box

- Real-time indexing without memory spikes

- Horizontal scaling without complexity

- Monitoring designed for vector workloads

When you factor in engineering time spent tuning queries, managing index rebuilds, and debugging performance issues, dedicated vector databases often end up being cheaper than the hidden costs of PGVector.

When PGVector Actually Makes Sense

PGVector shines in specific scenarios:

- Small-scale applications: When you’re dealing with thousands, not millions, of vectors

- Prototyping: Fast iteration without infrastructure overhead

- Simple retrieval: When you don’t need complex filtering or real-time updates

- Tight integration: When your vectors genuinely need ACID transactions with your relational data

But these use cases represent a fraction of production AI applications. Most teams eventually hit the scaling wall.

The Better Alternative: Specialized Tools

Managed vector databases exist for the same reason GPU databases, time-series databases, and graph databases exist: specialized workloads benefit from specialized tools. Postgres is incredible, but it can’t be optimal for everything simultaneously.

Teams using dedicated vector databases report spending significantly less time on database tuning and more time building actual features. As Jacobs notes ↗, “managed offerings exist for a reason.”

The Real Decision Framework

The question isn’t “can PGVector handle my workload?” The real questions are:

- What’s your tolerance for operational overhead? Are you prepared to manage index rebuilds and query tuning?

- How real-time does your search need to be? Can you tolerate eventual consistency?

- What’s your team’s database expertise? Do you have Postgres experts available?

- What’s your true total cost? Include engineering time spent fighting the database, not just infrastructure costs

For teams willing to invest the operational effort, PGVector can work. For everyone else, which includes most teams building modern AI applications, dedicated vector databases provide better performance with less headache.

The “single database” promise looks tidy on a whiteboard, but production systems don’t run on whiteboards. They run on infrastructure that needs to deliver consistent performance under real-world conditions. Sometimes, the simplest choice isn’t the simplest solution.