Database Scaling Isn't Magic. It's a Tax on Simplicity

The 10 database scaling techniques every architect must master, and why most teams implement them too late, too wrong, and at too high a cost.

Here are the 10 database scaling techniques every software architect must understand, not to pass an interview, but to keep their system alive when the traffic hits 10x.

1. Start With Indexing (Yes, Really)

Before you reach for sharding, before you spin up Redis, before you even consider microservices, optimize your queries.

This isn’t 2010. You don’t need a PhD to use EXPLAIN ANALYZE.

But you do need discipline.

The average application spends 60, 70% of its database time on a handful of poorly indexed queries. The rest? Cache hits, idempotent reads, or irrelevant noise.

“We added a composite index on

(user_id, created_at)and reduced our login latency from 800ms to 45ms.”, Engineering lead, fintech startup, 2024

Don’t index everything. Index what you query. Use tools like pg_stat_statements (PostgreSQL) or Performance Schema (MySQL) to find the slowest 5% of queries, then crush them.

The tax: You pay it in insert/update latency. But if you ignore it, you pay it in downtime when your app grinds to a halt at 8 PM on Black Friday.

2. Vertical Scaling Isn’t Stupid, It’s Strategic

Let’s be clear: vertical scaling is not the enemy of architecture.

It’s the only sane choice for startups and early-stage systems.

You don’t need Kubernetes to serve 100 users. You need a db.t3.medium with 2x CPU and 16GB RAM.

Azure SQL’s Hyperscale tier, for example, lets you scale from 100 GB to 100 TB without changing your code, while maintaining ACID compliance and read replicas.

The problem isn’t vertical scaling. The problem is refusing to acknowledge its limits.

When you hit the 32 vCPU ceiling on your largest VM, or your SSD IOPS max out, then you start thinking horizontally.

The alternative? You’re still running on a single node during peak traffic, praying your backup script doesn’t fail.

3. Caching Isn’t a Bonus, It’s Non-Negotiable

Caching doesn’t “help performance.” It enables performance.

At 10,000+ users, every query to the database is a potential failure point.

Enter Redis.

Deploy it early. Not as a “nice-to-have”, as your read-layer.

- User profiles? Cache them.

- Product catalog? Cache it, with a 5-minute TTL.

- Session state? Don’t store it in the DB. Store it in Redis.

“We reduced our database load by 70% with a single Redis instance. Our team didn’t even need to touch the app code.”, SRE, e-commerce platform

But cache invalidation is the real challenge. It’s the second hardest problem in computer science.

Use TTLs, write-through caching, and pub/sub invalidation (Redis Streams or Pub/Sub) to keep data fresh. And never trust “eventually consistent” caches for financial transactions.

4. Replication Is Your First Line of Defense

You don’t need sharding to handle 500k reads per second.

You need read replicas.

Azure SQL, PostgreSQL, MySQL, all support synchronous or asynchronous replication.

- Writes go to the primary.

- Reads? Load-balanced across 3, 5 replicas.

The trade-off? Replication lag.

Typically 1, 5 seconds. That’s fine for product listings. Not fine for “confirm payment.”

Rule: Never use a replica for operations requiring strong consistency.

Use it for user profiles, blogs, search results, anything where stale data won’t break the business.

This is how you scale reads without touching your application logic.

5. Sharding Is a Last Resort, And It’s a Nightmare

Sharding isn’t a scaling technique. It’s a cost center.

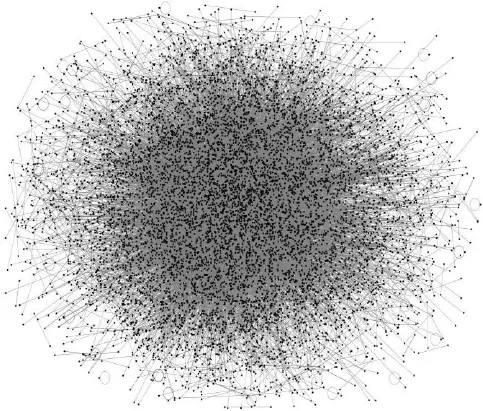

It turns your beautiful relational schema into a Frankenstein of routing logic, cross-shard joins, and distributed transactions.

You don’t shard because you’re “growing fast.”

You shard because your single database can’t handle the write load anymore.

And even then, ask yourself:

- Can you offload data to a NoSQL store? (e.g., log events to Cosmos DB)

- Can you partition by tenant? (SaaS apps)

- Can you use Azure SQL Elastic Pools to manage 100s of databases as one?

If you must shard, use consistent hashing with virtual nodes. Avoid range-based sharding, hot keys will destroy your cluster.

And never, ever shard by user ID unless you’re building a social network with billions of users.

Most apps don’t need it. But every team thinks they do.

6. Polyglot Persistence Isn’t a Trend, It’s Survival

Stop trying to make your relational database do everything.

You’re not a hero. You’re just stubborn.

- User sessions? Redis or Cosmos DB.

- Audit logs? Write them to Azure Blob Storage or Time Series Insights.

- Product catalogs? Sure, PostgreSQL. But if they’re JSON-heavy and frequently updated, go Cosmos DB.

- Analytics? A data warehouse like Azure Synapse or Databricks.

This isn’t “architecture for the sake of complexity.” It’s selecting the right tool for the data access pattern.

- Need ACID? SQL.

- Need schema flexibility and global writes? Cosmos DB.

- Need to store petabytes of logs? S3 + Glue.

The DBA of the future doesn’t manage one database. They orchestrate ten.

7. Denormalization Is the Hidden Superpower

Normalization is a textbook ideal. Denormalization is production reality.

At scale, joins are expensive.

Instead of joining orders, users, and products on every API call, duplicate the user name and product title into the order record.

You’re trading 100GB of storage for 400ms of latency saved per request.

At 10 million daily requests? That’s 1.1 million hours of latency eliminated.

“We denormalized user data into our transactional tables. Our checkout page went from 1.2s to 280ms.”, CTO, travel booking platform

Yes, you now have data duplication. Yes, you need to update it in two places. But if your system is read-heavy, this isn’t a bug. It’s a feature.

8. Materialized Views: Precompute or Perish

You have a dashboard that runs a 12-table complex aggregation every 30 seconds?

Stop.

Use materialized views.

In PostgreSQL, they’re just CREATE MATERIALIZED VIEW, and you refresh them with REFRESH MATERIALIZED VIEW.

In BigQuery, it’s built-in.

Precompute expensive aggregations. Cache them. Serve them instantly.

This isn’t caching. It’s pre-emptive computation.

At 500k users, your analytics queries are killing your OLTP database.

Materialized views turn those queries from “O(n²)” nightmares into O(1) lookups.

9. Async Messaging: Decouple or Die

Your app has a “send welcome email” step after sign-up?

Don’t do it synchronously.

Don’t even think about it.

Use Azure Service Bus ↗ or Event Grid ↗.

Publish a UserRegistered event. Let a background function handle the email, the analytics, the Slack alert, the new license key generation.

This isn’t about performance. It’s about resilience.

If your email service is down? The user still signs up.

If your analytics pipeline crashes? Your UI stays snappy.

You’re not building a monolith anymore. You’re building an event-driven organism.

10. Observability: You Can’t Scale What You Can’t See

You’re not going to fix a bottleneck if you don’t know it exists.

Deploy Azure Monitor ↗ and Application Insights ↗ on day one.

Set alerts for:

- P99 latency > 500ms

- Error rate > 1%

- Queue depth > 10,000 messages

- Database DTU > 80%

Use distributed tracing to follow a request across services.

Build an application map. See the choke points.

Without this, scaling is just guesswork.

You’re not an architect. You’re a firefighter.

Database scaling isn’t about picking the right database.

It’s about making trade-offs before you’re bleeding out.

The teams that scale gracefully aren’t the ones with the fanciest tech.

They’re the ones who:

- Started with a single database and indexed wisely.

- Used caching before they thought they needed it.

- Replicated before they had outages.

- Decoupled before they had spikes.

- Monitored before they had complaints.

And when they did hit the wall?

They didn’t panic.

They didn’t ask for “a better database.”

They asked: “What part of this system is actually broken?”

And then they fixed it, slowly, deliberately, with data, not dogma.