Java Streaming is Dead: Why Your 75GB CSV Ingestion Strategy Belongs in 2015

A brutal case study comparing Java streaming approaches against modern tools like DuckDB and Spark for massive data ingestion, revealing why traditional methods are costing you time and sanity.

When someone tells you they’re streaming 75GB CSV files into SQL using Java, you’re either talking to a masochist or someone who hasn’t checked the data engineering landscape since Hadoop was cool. One engineer’s recent battle with 16 massive CSV files reveals exactly why clinging to Java streaming approaches might be the most expensive mistake you’re making.

The 8-Day Nightmare: When Traditional Approaches Collapse

The scenario sounds familiar to anyone who’s dealt with enterprise data: 75GB CSV files needing ingestion into Microsoft SQL Server. The initial attempts read like a horror story of modern data engineering:

- Python/pandas: Immediate memory explosion

- SSIS: Crawling at unusable speeds

- Best case: 8 days per file

That’s 128 days of continuous processing for the full dataset. At that rate, your data is stale before it even lands in the database. The engineer’s solution? A custom Java streaming approach using InputStream, BufferedReader, and parallel threads that brought processing time down to 90 minutes per file.

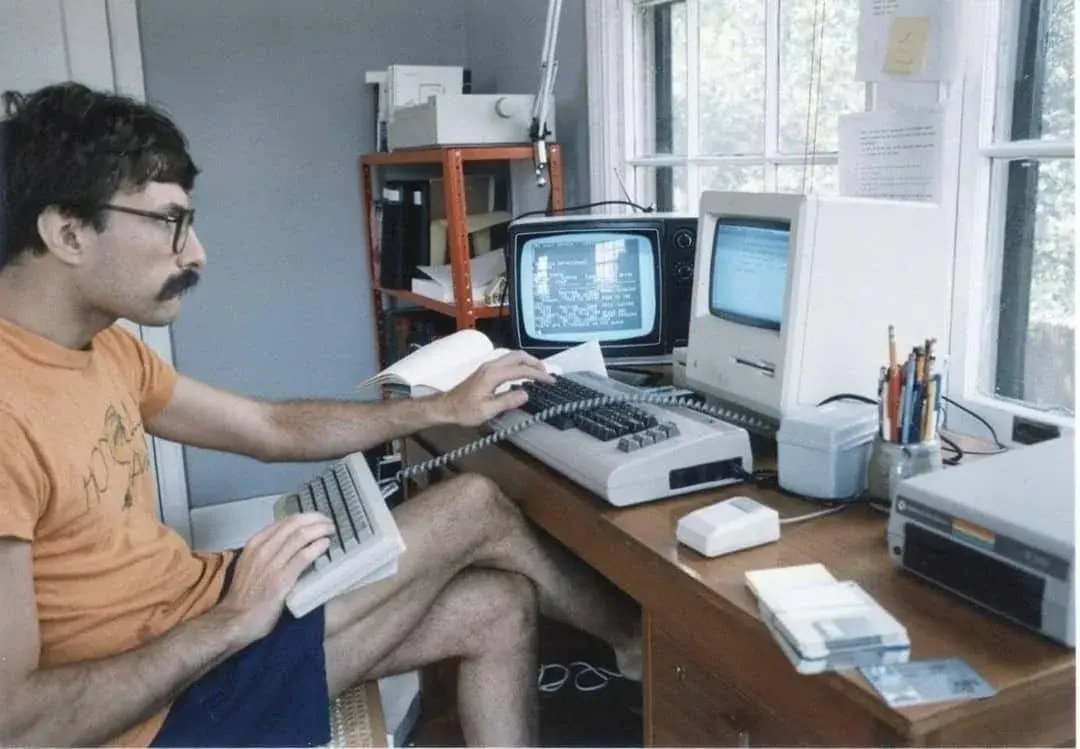

But here’s the uncomfortable truth: spending weeks building custom Java streaming solutions in 2025 is like building your own database from scratch. It might work, but you’re solving problems that the industry already solved years ago.

The Modern Tool Stack: Where 90 Minutes Becomes Minutes

While our Java hero was optimizing thread pools and buffer sizes, the data engineering world moved on. The recent AWS Glue Zero-ETL integration with Salesforce Bulk API ↗ demonstrates what modern ingestion looks like: processing 10 million records in 6 minutes and 20 seconds compared to 28 minutes and 53 seconds with traditional REST APIs.

That’s a 4.6x performance improvement without writing a single line of streaming code. The Bulk API processes data in batches that can be parallelized, with limits up to 150,000,000 API calls per 24-hour period. Each batch handles up to 10,000 records, making the 75GB CSV problem look almost quaint.

DuckDB: The Silent CSV Assassin

The alternative approaches surfaced the tool that’s quietly revolutionizing file-based data processing: DuckDB. While our Java engineer was implementing custom streaming logic, DuckDB can handle this with a few SQL commands:

DuckDB’s vectorized columnar execution engine processes CSV files at speeds that make traditional row-by-row streaming look archaic. It handles memory management automatically, spills to disk when necessary, and provides full SQL functionality during ingestion.

The irony? DuckDB is written in C++, but you’d never know it from the interface. The days of worrying about InputStream implementations and buffer sizes are over for most data ingestion scenarios.

Spark: When You Really Need Nuclear Options

For the “16 more files like it” scenario, Spark remains the industrial-grade solution. While often considered overkill for single files, Spark shines when you have:

- Multiple large files needing parallel processing

- Complex transformations during ingestion

- Need for distributed fault tolerance

- Future scalability requirements

The key advantage isn’t just raw speed, it’s the built-in resilience and monitoring. When your Java streaming solution fails at record 45,678,234 of a 75GB file, you get to start over. When Spark fails, it recovers from the last checkpoint.

The Hidden Costs of Custom Java Solutions

The subtle traps in custom streaming approaches:

Parsing bottlenecks: As one commenter noted, “Did you benchmark where the bottleneck is? I’d assume that such massive bulk inserts start to also hit performance quite a bit.” Most CSV parsers add significant overhead compared to optimized bulk operations.

Network limitations: “At some point you will be limited by network speed as well, there is only so much data you can transfer with a single connection to your db.” Modern tools handle connection pooling and parallel transfers automatically.

Error handling: “It also lets you checkpoint as you go because there are always messed up lines.” Building robust error handling and recovery in custom code adds weeks to development time.

When Java Streaming Actually Makes Sense

Before we completely bury Java streaming, there are scenarios where it still wins:

- Extreme custom formatting: When CSVs contain non-standard encoding or bizarre formatting that off-the-shelf tools can’t handle

- Real-time streaming: When you need to process data as it arrives rather than batch processing

- Embedded systems: Where you can’t install additional tools or dependencies

- Legacy environment constraints: When you’re stuck with JDK 8 and no permission to install anything new

But for most enterprise data ingestion? You’re better served by modern tools.

The Verdict: Stop Reinventing the Wheel

The 75GB CSV ingestion problem represents a classic case of engineers reaching for familiar tools rather than appropriate ones. The Java solution worked, but at what cost?

- Weeks of development time versus minutes of configuration

- Ongoing maintenance burden versus managed services

- Limited scalability versus built-in distributed processing

- Custom error handling versus battle-tested resilience

The data engineering landscape has evolved past the point where building custom ingestion pipelines from scratch makes economic sense. Between DuckDB for single-machine processing, Spark for distributed workloads, and cloud services like AWS Glue for managed solutions, the tools exist to make 75GB CSV files feel routine rather than heroic.

The next time you face a massive data ingestion challenge, ask yourself: are you solving a data problem or exercising your programming skills? The answer might save you weeks of development time and your company thousands in cloud bills.