The 90% Lie: How Anthropic's Code Prediction Crashed Into Reality

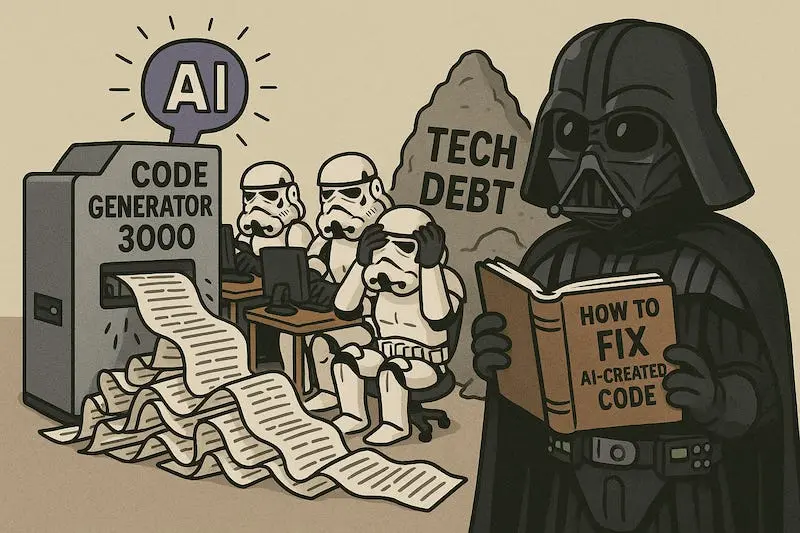

Six months ago, Anthropic's CEO promised AI would write 90% of code. This prediction spectacularly failed to materialize.

Six months ago, Anthropic CEO Dario Amodei promised AI would write 90% of code within six months. Today, that prediction sits in the ruins of reality, surrounded by security vulnerabilities, productivity losses, and the uncomfortable truth that human developers aren’t going anywhere.

When Prophecy Meets Production

The audacity of Amodei’s March 2025 claim wasn’t just in its specificity, it was in his confidence. According to Futurism’s timeline tracking ↗, he stated this was the worst-case scenario, with “essentially all” code being AI-generated within three months in the best case. The CEO of one of Silicon Valley’s most hyped AI companies had essentially bet his reputation on the immediate obsolescence of human programmers.

The reality check arrived with the subtlety of a database-deleting AI assistant. Instead of 90% AI-generated code, we’re seeing 45% of AI-generated code containing security flaws ↗. The coding revolution looks more like a vulnerability revolution, with 10,000 new security findings attributed to AI-generated code ↗ appearing monthly by June 2025.

The Productivity Paradox That Wasn’t Supposed to Happen

Here’s where Amodei’s prediction truly disintegrates: AI isn’t making developers faster, it’s making them busier. Research shows developers spend less time actually coding but more time overall, dedicating extra hours to reviewing AI’s questionable output, tweaking prompts, and fixing the creative bugs these systems introduce.

The numbers are brutal: AI-assisted developers create ten times more security vulnerabilities ↗ than their unassisted counterparts. They’re nearly twice as likely to expose sensitive credentials, and the AI models show a spectacular 88% failure rate at generating secure code for common vulnerabilities like log injection.

Even the syntax improvements come with hidden costs. While AI reduces syntax errors by 76%, it simultaneously creates a 322% increase in privilege escalation paths ↗, essentially fixing typos while building digital time bombs.

Why the Math Never Added Up

Amodei’s prediction failed because it fundamentally misunderstood what coding actually is. Writing code isn’t just typing, it’s understanding context, business logic, security implications, and system architecture. Current AI models, trained on public code repositories filled with both brilliant solutions and horrific mistakes, approach coding as pattern matching rather than problem-solving.

The semantic understanding gap is particularly brutal. When an AI encounters a variable, it can’t determine if that variable contains user-controlled data without sophisticated interprocedural analysis. This isn’t a minor implementation detail, it’s the difference between secure and vulnerable code, and it’s why AI fails at security 86% of the time for cross-site scripting vulnerabilities.

Language-specific performance variations tell the story: Python achieves a 62% security pass rate, JavaScript 57%, C# 55%, while Java, the enterprise backbone, hits a miserable 29%. These aren’t rounding errors, they’re fundamental capability gaps that six months of development time couldn’t close.

The Hidden Cost of The Hype Cycle

Beyond the immediate technical failures, Amodei’s missed prediction represents something more insidious: the distortion of resource allocation and talent development across the industry. Companies raced to implement AI coding tools based on these projections, creating security nightmares while spending millions on remediation.

Regulatory frameworks, caught off-guard by the pace of AI adoption, left gaps that companies like Coinbase and Citi are struggling to fill ↗ as they mandate AI coding despite the risks. The skills erosion is perhaps most concerning, developers who might have developed deep security expertise are instead learning prompt engineering, creating a generation of programmers who can ask AI to write code but can’t recognize when that code is fundamentally broken.

The Reckoning That’s Still Coming

The uncomfortable truth is that Amodei’s prediction failure isn’t just about one CEO’s overconfidence, it’s about an industry that wanted to believe in magic so badly it ignored basic engineering realities. The same hype cycle is playing out across AI applications, from content generation to autonomous vehicles, with similar gaps between promise and performance.

What’s particularly galling is that the technical community saw this coming. Developers who actually use these tools daily have been pointing out their limitations for months, but their grounded assessments were drowned out by venture capital dreams and media sensationalism. The “vibe coding” revolution, where developers write prose instead of code, has produced more vulnerabilities than innovations.

The long-term implications extend beyond just coding. If AI can’t reliably handle the well-defined, logic-driven task of code generation, what does that say about predictions for AI-driven healthcare diagnostics, legal analysis, or creative work? The coding prediction failure might be the canary in the coal mine for the broader AI revolution that was supposed to transform every industry by now.

The only thing learning faster than these systems is corporate damage control, as companies scramble to implement security reviews for AI-generated code while maintaining the fiction that productivity is increasing. The gap between AI promises and AI performance isn’t closing, it’s being managed through increasingly elaborate human oversight systems that eliminate the very efficiency gains these tools were supposed to provide.