Apple M5 Drops the Gauntlet in On-Device AI

M5's 3.5x AI performance leap and 153GB/s memory bandwidth reshape local LLM economics, but is it enough to dethrone PC builds?

Apple’s M5 announcement didn’t just evolve their silicon lineup, it set fire to the competitive landscape for local AI workloads. The base M5 chip brings a claimed 3.5x AI performance improvement over the M4, coupled with 153GB/s memory bandwidth ↗, forcing a hard look at whether PC builds still dominate the local LLM arena. The real question isn’t whether Apple improved their hardware, they clearly did, but whether these gains actually change the practical equation for developers running models locally.

Memory Architecture: The Silent Winner

The M5’s unified memory bandwidth jump to 153GB/s ↗ represents more than just a 30% bump over its predecessor. This architectural advantage eliminates the CPU↔GPU transfer bottlenecks that plague discrete GPU setups, where precious milliseconds evaporate shuffling model weights between components.

For local LLM inference, memory bandwidth isn’t just another spec, it’s the primary constraint. When you’re feeding 7-billion parameter models through inference engines, the speed at which you can move weights between storage and compute determines whether your chatbot responds in seconds or leaves users staring at loading indicators. The M5’s unified approach means the entire chip pool accesses the same memory space without penalty, something discrete GPU setups can’t match without expensive HBM configurations.

The Practical Math: M5 vs. Discrete GPUs

Let’s do the real-world comparison that matters. A mid-range PC build with a modern GPU might offer more raw TFLOPS, but the economics tell a different story:

- Power Consumption: An M5 MacBook Pro sips 20-30W under full AI load versus 300-450W for a desktop GPU build

- Total Cost: $1,600 for the base M5 MacBook Pro versus $2,500+ for a competitive AI workstation

- Portability: The MacBook Pro works anywhere versus being tethered to a desk

- Thermal Management: Passive cooling handles most workloads versus liquid cooling complexity

The prevailing sentiment among developers acknowledges that while the base M5’s 16GB memory ceiling feels limiting for serious workloads, the Pro and Max variants promise to deliver the real punch. As one forum participant noted, serious inference enthusiasts should probably wait for those higher-tier configurations rather than jumping on the entry-level offering.

Performance Reality Check

Apple’s benchmark showing a 3.5x speedup in prompt processing comes with important caveats. The testing methodology reportedly includes model loading times, which means the actual computational improvements might be less dramatic than the headline numbers suggest. Still, even accounting for measurement quirks, the performance-per-watt advantage remains substantial.

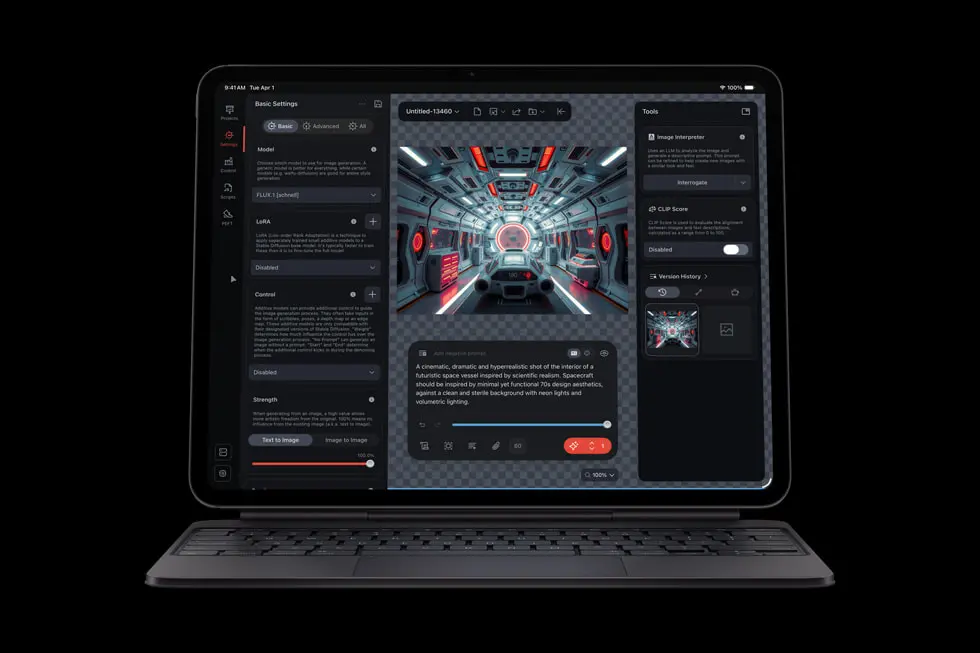

The GPU compute improvements, a claimed 4x peak performance uplift, suggest Apple is finally taking AI inference seriously enough to dedicate silicon specifically to matrix operations. This isn’t just recycling gaming hardware repurposed for AI workloads, it’s purpose-built architecture optimized for the transformer models dominating contemporary AI applications.

The Waiting Game for Pro Variants

The most telling reaction across technical communities has been the collective hesitation about the base model. At $1,600 for a 16GB configuration, the entry M5 feels like Apple’s way of teasing what’s coming rather than delivering the definitive solution. Developers working with 30B+ parameter models need more breathing room, and the consensus suggests waiting for M5 Pro and Max variants with higher memory ceilings.

This strategic product segmentation reveals Apple’s play: they’re establishing the M5 as the baseline for mainstream AI applications while reserving the truly transformative capabilities for professional tiers. It’s a familiar Apple move, but one that makes business sense, why sell a $3,000 workstation when you can sell a $1,600 laptop first?

The Developer’s Dilemma

The M5 launch forces a fundamental rethink of local AI development economics. Do you invest in a power-hungry desktop rig that dominates raw benchmarks but costs substantially more to purchase and operate? Or do you opt for Apple’s integrated approach that sacrifices some peak performance for dramatically better efficiency and portability?

For most developers working with sub-30B parameter models, the M5 represents a sweet spot where adequate performance meets practical convenience. The ability to run reasonably sophisticated models locally without heating your entire workspace or tripping circuit breakers isn’t just a convenience, it’s a productivity multiplier.

As the AI development ecosystem continues maturing, Apple’s hardware strategy appears increasingly prescient. While competitors chase raw TFLOPS, Apple is optimizing for the complete experience: memory architecture, power efficiency, and thermal management. The M5 might not win every benchmark, but it wins where it matters most, making local AI development accessible rather than esoteric. The coming Pro and Max variants will likely cement this advantage, potentially making Mac Studios the default choice for serious local inference workloads.