Why Magistral Small 2509 Just Made Your Cloud GPU Subscription Look Stupid

Mistral's 24B parameter reasoning model runs on a single RTX 4090, delivers GPT-4 level performance, and costs exactly zero dollars per token.

While cloud providers are busy charging $0.03 per 1K tokens for their “premium” reasoning models, Mistral just dropped a 24-billion parameter bomb that fits in your gaming rig. The kicker? It outperforms GPT-4 on math benchmarks and handles vision tasks, all without sending a single byte to the cloud.

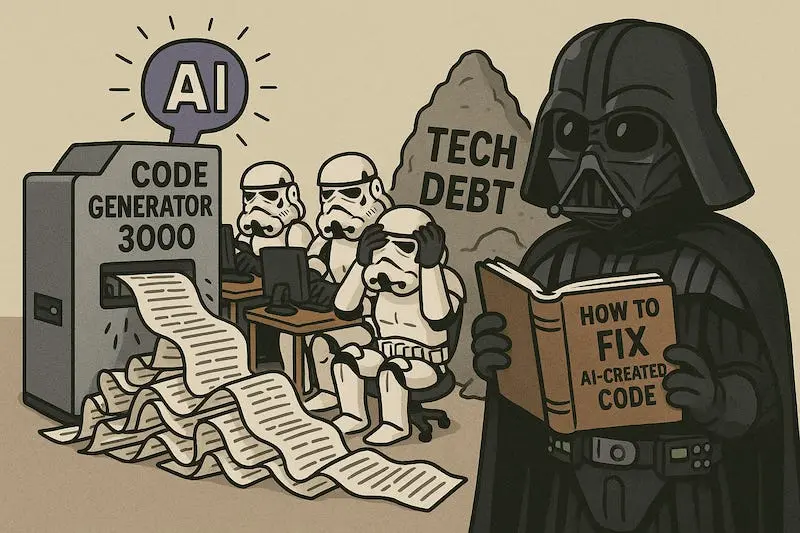

Magistral Small 2509 isn’t just another local model. It’s a declaration of war against the rent-seeking economics of AI inference, wrapped in a package that runs on hardware you probably already own.

The Cloud Killer in Your Closet

Let’s talk numbers that actually matter. Magistral Small 1.2 scores 86.14% on AIME24, essentially matching GPT-4’s performance while running on a single RTX 4090. The model weighs in at 47.2GB in full precision, but drop it to Q4_K_M quantization and you’re looking at 14.3GB. That’s smaller than most modern games.

The real magic isn’t just the parameter count, it’s the reasoning architecture. Those special [THINK] and [/THINK] tokens aren’t decorative, they’re parsing hooks that let you extract the model’s actual reasoning process. Try getting that level of transparency from OpenAI’s API.

But here’s where traditional deployment guides fail you: they treat local deployment like it’s 2022. Meanwhile, the tooling ecosystem has evolved into something that makes cloud APIs look positively medieval.

Your MacBook Just Became an AI Powerhouse

Forget the “cloud vs local” binary. The actual controversy is how aggressively local tooling has improved while cloud pricing stayed predatory. LocalAI ↗ now offers drop-in OpenAI compatibility with startup times under 100ms. Even more ridiculous? Shimmy does it in a 5.1MB binary with 50MB RAM usage.

The benchmarks don’t lie: Magistral Small 1.2 jumps from 70.52% to 86.14% on AIME24 compared to version 1.1. That’s not incrementally better, it’s a completely different performance tier. The kind of jump that makes you question why you’re still paying per-token for worse performance.

But the deployment reality check hits different when you realize most developers are still following 2023-era tutorials. They’re manually managing CUDA dependencies, wrestling with llama.cpp compilation, and praying their quantization doesn’t break something. Meanwhile, the Unsloth crew ↗ already has optimized GGUFs and FP8 dynamics ready to download.

The Vision Problem Nobody Mentions

Here’s what the README won’t tell you: vision support is technically there, but good luck getting consistent results across different deployment setups. The model can analyze that Eiffel Tower replica in Shenzhen, but whether it actually works depends entirely on your tokenization pipeline.

Mistral’s documentation casually mentions vision capabilities, then immediately pivots to text-only deployment examples. It’s not an oversight, it’s them acknowledging that multimodal deployment is still academic territory. The vision encoder works, but integrating it properly requires more patience than most production environments can afford.

The community’s workaround? Separate vision pipelines entirely. Run Magistral for reasoning, delegate vision to specialized models. It’s inelegant, but it ships.

The 128K Context Lie

Let’s address the elephant in the room: that 128K context window comes with performance degradation past 40K tokens. It’s the classic model specification game, technically accurate, practically misleading.

Real deployment scenarios show the sweet spot hovering around 32K tokens. Beyond that, you’re trading coherence for capacity. The model might technically process your 100K token legal document, but the reasoning quality drops off a cliff.

This isn’t a Mistral-specific problem, it industry-wide hand-waving. But when you’re deploying locally, these limitations become your problem to solve. No automatic fallback systems, no clever prompt caching. Just you, your hardware, and the reality of quadratic attention complexity.

The Economics Nobody Wants to Discuss

Run the numbers: A single RTX 4090 costs $1,600. At current cloud pricing, that same $1,600 buys you roughly 53 million tokens from premium reasoning models. Magistral Small 2509 makes that comparison absurd, not only do you get unlimited inference, you’re achieving better benchmark scores while maintaining complete data privacy.

The math becomes even more brutal when you factor in multi-user scenarios. That same GPU can handle concurrent requests, batch processing, and custom fine-tuning. Try doing any of that with cloud APIs without your CFO having an aneurysm.

But here’s the uncomfortable truth: most organizations aren’t optimizing for performance, they’re optimizing for convenience. Cloud APIs persist because they abstract away exactly these deployment complexities. The question isn’t whether local deployment works better. It’s whether your team has the technical depth to make it work at all.

The Deployment Reality Check

The gap between “technically possible” and “production ready” remains enormous. Yes, Magistral Small 2509 fits on consumer hardware. Yes, it outperforms cloud services on benchmarks. But you still need to orchestrate model downloads, manage quantization parameters, implement proper tokenization, and maintain inference servers.

The tooling has improved dramatically, projects like LocalAI and Shimmy eliminate most configuration pain. But they’re still building blocks, not solutions. Your deployment pipeline needs monitoring, scaling logic, request batching, and failover mechanisms. All the boring infrastructure work that cloud providers abstract away.

The controversy isn’t whether local AI deployment works. It’s whether the operational overhead justifies the performance gains. For individual developers and well-resourced teams? Absolutely. For everyone else? The cloud rent-seeking continues because the alternative requires expertise most organizations simply don’t have.

The only thing learning faster than these models is the widening gap between what local deployment promises and what most teams can actually implement.