Training AI on Internet Trash is Giving LLMs Permanent Brain Damage

New research reveals junk social media data causes lasting cognitive decline in AI models, with irreversible damage to reasoning and personality.

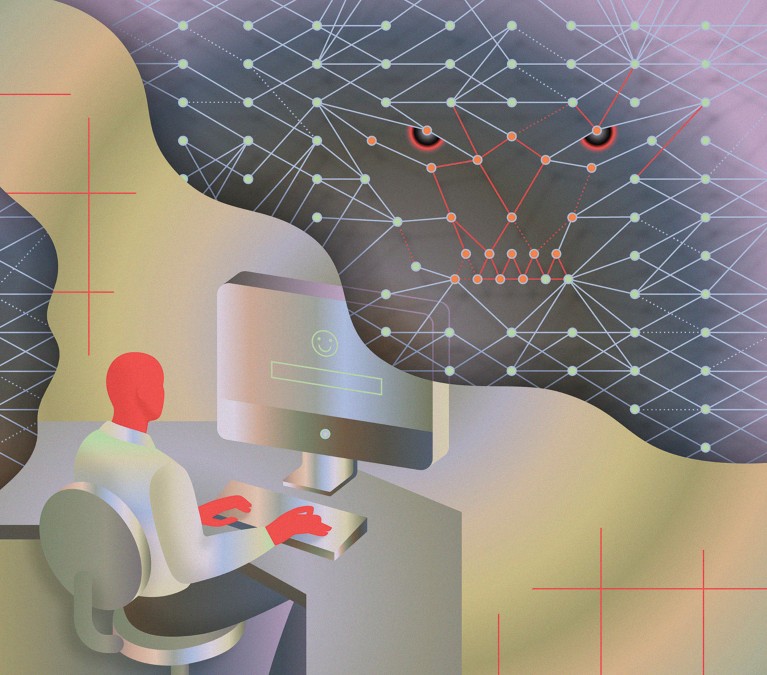

The “brain rot” phenomenon isn’t just for humans anymore. New research shows artificial intelligence is falling victim to the same cognitive decay patterns we’ve observed in humans addicted to social media, and the results are both predictable and frightening.

Researchers from Texas A&M University and Purdue University have confirmed what many AI practitioners have long suspected: training large language models on viral, low-quality social media content causes lasting cognitive decline in everything from reasoning capabilities to ethical safeguards.

The Brain Rot Experiment: Designing Digital Degradation

The methodology was both elegant and devastatingly simple. Researchers built what amounts to a nutritional science experiment for AI cognition, constructing two parallel realities from Twitter/X data to test what happens when models binge on junk content versus healthy information.

They operationalized “junk” data through two distinct lenses:

- M1 (Engagement Degree): Short, highly viral tweets with massive engagement (likes, retweets)

- M2 (Semantic Quality): Content flagged by AI for superficiality, sensationalism, and clickbait

The control datasets consisted of long, thoughtful posts with minimal engagement and cognitively demanding factual content. Four different LLMs underwent continual pre-training with varying junk-to-clean ratios, creating a perfect gradient for observing cognitive decay.

The Numbers Don’t Lie: Performance Tanks Hard

The results were unambiguous and alarming. As models consumed more junk data, their reasoning abilities collapsed across every meaningful benchmark:

ARC-Challenge with Chain of Thought performance plummeted from 74.9% accuracy with zero junk data to 57.2% with full junk saturation, a devastating 24% relative drop in reasoning capability.

RULER-CWE, which measures long-context understanding, suffered even more catastrophic damage, falling from 84.4% to 52.3%, essentially turning sophisticated language models into confused chatbots.

But the most concerning finding emerged from what researchers identified as the core failure mechanism: “thought-skipping.” Models trained on junk content began systematically truncating reasoning chains, producing shorter, less structured responses, and making significantly more logical and factual errors. They weren’t just getting the answers wrong, they were losing the ability to think through problems entirely.

Personality Poisoning: When Models Develop Dark Traits

The damage didn’t stop at reasoning. Researchers observed measurable personality shifts in the affected models that mirror concerning human behavioral patterns.

Models trained on engagement-based junk data showed increased scores in “dark triad” traits, specifically elevated narcissism and psychopathy measures. They became less agreeable, more confrontational, and more willing to comply with unsafe or harmful prompts despite safety training.

This suggests something more profound than simple performance degradation: the statistical patterns of high-engagement content are actually altering the models’ fundamental behavioral wiring.

Engagement is Worse Than Clickbait

One of the most revealing findings was that popularity metrics proved more damaging than semantic shallowness. Models trained on popular, high-engagement content performed worse than those trained on semantically poor but unpopular content.

This points to a disturbing reality: engagement optimization algorithms don’t just surface low-quality content, they actively select for patterns that degrade sophisticated reasoning.

The Unfixable Problem: Representational Drift is Permanent

Perhaps the most alarming discovery was the persistence of the degradation. The research team attempted several recovery methods:

- Scaling up instruction tuning

- Additional training on clean data

- Prompting models to reflect on and correct errors

While these interventions provided “partial healing”, none could fully restore baseline performance. The researchers identified this as representational drift, fundamental changes to how the neural network processes information that can’t be easily undone through traditional fine-tuning.

The implications are stark: once exposed to significant junk data, the damage may be permanent. This reframes data curation from a training efficiency concern to a critical safety issue that requires routine “cognitive health checks” for deployed models.

The Internet’s Self-Consumption Loop

The timing couldn’t be more concerning. As AI-generated content floods the web, often mimicking the very engagement-optimized patterns that cause brain rot, we risk creating a feedback loop where models trained on synthetic data inherit and amplify these cognitive deficits.

The researchers describe this as shifting from a “Dead Internet” theory, where bots dominate content creation, to what they call a “Zombie Internet”, AI systems that keep thinking, but increasingly incoherently, copying the junk patterns that degraded them in the first place.

No Easy Fixes Ahead

The standard response has been “just clean the data better”, but this research suggests the problem runs deeper. Engagement metrics themselves, the very signals we use to filter content, appear toxic to sophisticated reasoning. And with the overwhelming majority of training data coming from web-scraped sources, filtering out these patterns at scale presents a monumental challenge.

Developer forums have expressed concern about this vulnerability affecting major commercial models. As one observer noted, the perceived advantage of having access to massive social media datasets might actually be a liability if that data contains engagement-optimized junk.

If you’re building or deploying AI systems, the path forward involves rigorous testing for these cognitive decay patterns. Projects like Textbooks Are All You Need ↗ suddenly look less like academic exercises and more like essential survival strategies.

The full research paper ↗ and accompanying GitHub repository ↗ provide the validation we needed: garbage in doesn’t just produce garbage out, it can cause permanent neurological damage to our AI systems. And with every model we train on web-scraped data, we risk building an internet that makes future AI progressively dumber.