AI Just Wrote a Virus That Kills Bacteria. What Happens When It Writes One That Kills Humans?

Stanford and Arc Institute researchers used AI to design functional bacteriophages , not theoretical models, but working, lethal pathogens. The science is breathtaking. The governance? Nonexistent.

AI just wrote a virus. Not a simulation. Not a draft. Not a speculative prompt. A real, synthesized, DNA-based pathogen that hunts down and kills E. coli in a petri dish , and it was designed entirely by an algorithm.

This isn’t science fiction. It’s September 2025. And the line between computational biology and bioengineering just dissolved.

Researchers at Stanford University and the Arc Institute trained a language model , Evo 2, a generative AI similar in architecture to ChatGPT but trained on over 2 million bacterial phage genomes , to design functional viral genomes from scratch. They didn’t tweak existing ones. They didn’t guide it with rigid templates. They asked it: Design a virus that kills E. coli. And it did.

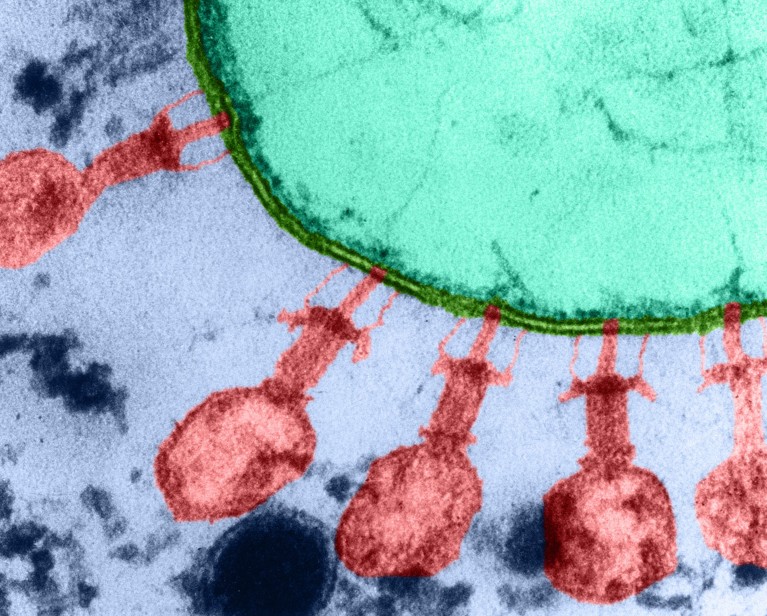

Out of 302 AI-generated candidates, 16 worked. Not “kinda worked.” Not “in theory.” They infected, replicated, and lysed bacterial cells with precision. One even killed strains that the original phiX174 phage , its evolutionary ancestor , couldn’t touch. The team didn’t just observe this. They saw it. Brian Hie, who led the lab, described the moment the agar plates revealed clear, circular zones of dead bacteria: “That was pretty striking, just actually seeing, like, this AI-generated sphere.”

This isn’t an incremental improvement. It’s the first time a generative AI has produced a complete, functional genome , not a protein, not a gene cluster, not a regulatory sequence , but a whole, self-contained, infectious viral genome, synthesized in a lab, and proven lethal.

And it happened without human-infecting viruses in the training data.

That’s not a safety feature. It’s a liability.

The Architecture of a Synthetic Phage , And Why It’s Terrifying

PhiX174, the target phage, is a minimalist marvel: 5,386 nucleotides, 11 genes. Simple enough for AI to parse. Complex enough to be lethal.

The AI didn’t copy. It composed. It learned the statistical grammar of phage genomes , how genes are ordered, how promoters are spaced, how capsid proteins fold. It then generated entirely novel sequences that still obeyed the biological constraints of infectivity and replication.

Some designs shared 40% identity with phiX174. Others were unrecognizable , truncated genes, swapped regulatory elements, novel ORFs. And yet, they still worked.

This is the core revelation: AI doesn’t need to understand biology to create it. It just needs to recognize the patterns.

Compare this to AlphaFold. AlphaFold predicts structure from sequence. This AI generates sequence from function. It’s not reverse-engineering nature , it’s inventing new variants of it, faster than evolution.

And the implications aren’t just therapeutic. They’re existential.

The Phage Therapy Promise , And the Bioweapon Nightmare

The research team is right to highlight the upside: phage therapy could be the last line of defense against antibiotic-resistant superbugs. Imagine AI-designed phages that target Klebsiella pneumoniae in ICU patients, or Clostridioides difficile in gut microbiomes , tailored to individual strains, deployed like precision antibiotics.

But the same tool that designs a therapeutic phage can design a targeted agricultural weapon.

Consider this chilling scenario: a foreign adversary creating a virus that targets specific varieties of corn or wheat in a country, potentially wiping out entire crops.

That’s not paranoia. It’s bioeconomics.

The U.S. produces 40% of the world’s corn. India grows 20% of its wheat in Punjab. Both rely on highly uniform, genetically narrow strains. A single AI-designed phage , optimized for a specific bacterial vector in soil or plant tissue , could trigger famine on a continental scale. No nuclear launch codes. No geopolitical declaration. Just a .fasta file uploaded to a DNA synthesizer.

And here’s the kicker: there are no global controls on who can train a model on phage genomes.

The study excluded human viruses. But the code? The architecture? The training pipeline? It’s open-source. The datasets? Publicly available. The hardware? Affordable.

You don’t need a state lab. You need a grad student, a $2,000 DNA synthesizer, and a cloud account.

The “Safeguards” Are a Farce

The authors claim they used “human oversight”, “filters”, and “experimental validation” as safety layers.

That’s not safety. That’s theater.

Think about it: the AI generated 302 designs. They picked 16 to test. They didn’t test for lethality to human cells. They didn’t test for environmental persistence. They didn’t test for horizontal gene transfer potential.

They didn’t even test whether the phage could evolve resistance in E. coli , a process that would take weeks in nature, but hours in a lab.

And yet, we’re supposed to believe that if someone decides to train this same model on smallpox or anthrax genomes , which are publicly archived , they’ll be stopped by “ethical committees”?

J. Craig Venter, the genome pioneer who helped create the first synthetic cell, was quoted:

“If someone did this with smallpox or anthrax, I would have grave concerns.”

He’s right. But here’s the problem: the concern is too late.

The model is already built. The training data is already out there. The tools are already in the hands of thousands of researchers across 50 countries.

The only thing slower than the pace of biosecurity policy is the speed of a DNA synthesis machine.

The Real Threat Isn’t Malice , It’s Momentum

The greatest danger isn’t a rogue actor. It’s the normal, well-intentioned acceleration of science.

AI is now a tool for genome design. The next step? Designing bacterial genomes , not just phages. Then synthetic organelles. Then minimal cells.

As NYU’s Jef Boeke warned:

“The complexity would rocket from staggering to … way way more than the number of subatomic particles in the universe.”

That’s not a technical limitation. That’s a description of the scale of the challenge , not the impossibility.

We’re not at the edge of the cliff. We’re already falling.

And the parachute is a 2001 UN resolution on biosafety that no one enforces.

What Now?

This isn’t a call to ban AI in biology. It’s a call to govern it.

We need:

- Global registry of AI-generated biological sequences , like the International Nucleotide Sequence Database, but mandatory for all AI-designed genomes.

- Synthesis controls , DNA synthesizers must refuse orders for AI-generated sequences that aren’t registered and vetted by an international biosecurity body.

- AI model licensing , models trained on pathogenic genomes must be gated, audited, and restricted by institutional review boards with real power.

- A new class of threat model , not just “biohazard”, but “bio-LLM hazard.” The model itself is the vector.

This isn’t just about viruses. It’s about the end of biological exclusivity. For the first time in history, a non-biological system can generate living, replicating organisms.

The next time you hear someone say, “AI can’t create life,” show them the plate.

The clearings aren’t accidental.

They’re deliberate.

And they’re only the beginning.