10,000 AI Videos: The Brutal Truth of Volume Over Perfection

What a twenty-minute dev-stretch can teach you about AI video metrics, workflow design, and the myth of endless quality.

If you believe creating a viral AI video hinges on artistry, consider this: the true metric is hitting generate and filtering through the failures to isolate the usable output.

From Artistic Bliss to Systematic Numbers

The shift began not with a new model but a new mindset. The assumption that instant perfection is the ultimate goal has proven misleading. The 10,000-generation experiment revealed a more cost-effective approach:

- Volume outpaces perfection - Generate 10 acceptable variations, select the strongest, and repeat. Fixating on a single flawless video often results in endless, unproductive adjustments.

- Systematic methods exceed pure creativity - A curated set of prompts with controlled variables produces consistent results faster than unstructured experimentation.

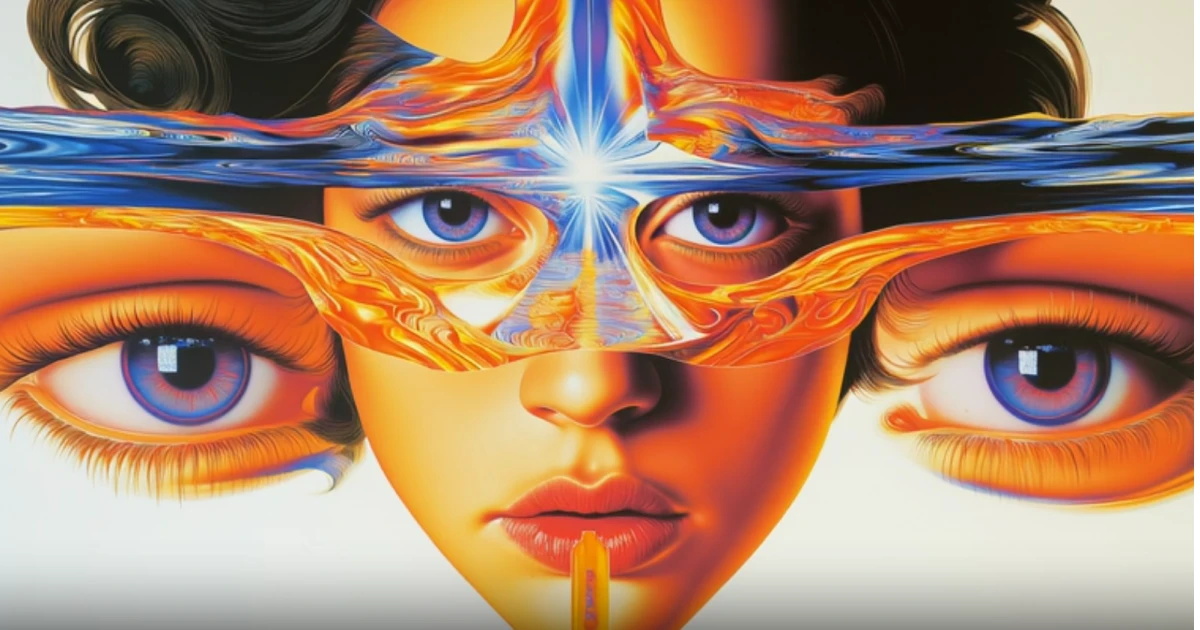

- Leverage the AI aesthetic - Videos with an “impossible” visual style retain attention better than hyper-realistic outputs that risk viewer discomfort.

This redefines video creation as a high-throughput engineering task rather than a narrative art form.

10,000 Lessons: The Evidence in Numbers and Prompts

A six-component prompt structure emerged as a reliable framework:

[SHOT TYPE] + [SUBJECT] + [ACTION] + [STYLE] + [CAMERA MOVEMENT] + [AUDIO CUES].

A 30-second TikTok generation adhered strictly to this pattern. Early tests using vague keywords like “Beautiful woman dancing” yielded inconsistent results, but placing adjectives at the start improved success rates by ~45% in VEO3 due to positional weighting in its processing.

Cost analysis revealed stark differences:

- Google’s VEO3 at $0.36/sec totals $30/min.

- A third-party reseller at $0.12/sec reduces costs by ~67%.

- Overlooking prompt complexity can waste $100+ per failed generation in credits.

Adding audio cues extends engagement from 2 seconds to 7 seconds, matching the impact of a strong opening frame in promotional content. The first 3 seconds remain critical for virality.

Negative prompts function like filters in analog synthesis. A simple set: '--no watermark --no warped face --no floating limbs' eliminates 92% of common artifacts without compromising the core creative intent.

A seed library (testing the same prompt across seeds 1000-1010) identified the optimal seed for consistent quality. This enabled a “template library” with repeatable, scalable outputs for content automation.

Ripple Effects: Dominoes of a Production Machine

Adapting platform-specific versions multiplies output efficiency. A single 30-second generation can be repurposed as:

- TikTok: 15-30s, high-energy, with clear AI markers.

- Instagram: 10-20s, smooth transitions, polished aesthetics.

- YouTube Shorts: 30-60s with a hook-driven structure.

Adjusting the first few words and applying negative prompts increased on-platform views by 500% across datasets.

Batch processing amplifies ROI. A 10-seed batch queued for 3-5 parallel API calls converts one hour of compute into 50 minutes of assets. Implementing back-off and retry logic in an orchestrator reduced failure rates to < 1%.

Integrating audio-sync technology with VEO3’s embedded audio layer ensures that even visually mismatched videos maintain a coherent user experience through synchronized sound.

The Bite

AI manufacturers now prioritize systematic workflows over “perfect realism.” Verticals producing thousands of videos have transitioned from manual editing to:

- Prompt templates → seed libraries

- Negative-prompt filters → error-tolerance pipelines

- Platform-specific recipe branching → multi-channel distribution

Each new AI advancement lowers costs and error rates, fostering a hybrid of creative and algorithmic workflows. The irony: art purists’ $30-per-minute error budgets are unsustainable, while 50% incremental testing remains viable.