Parquet Was Never Enough: Why Your Raw Data Lake Is a Data Swamp Waiting to Happen

While Parquet excels at storage efficiency, it lacks transactional integrity. Open table formats like Iceberg, Delta Lake, and Hudi are increasingly seen as essential for reliable data pipelines by adding ACID compliance and schema evolution.

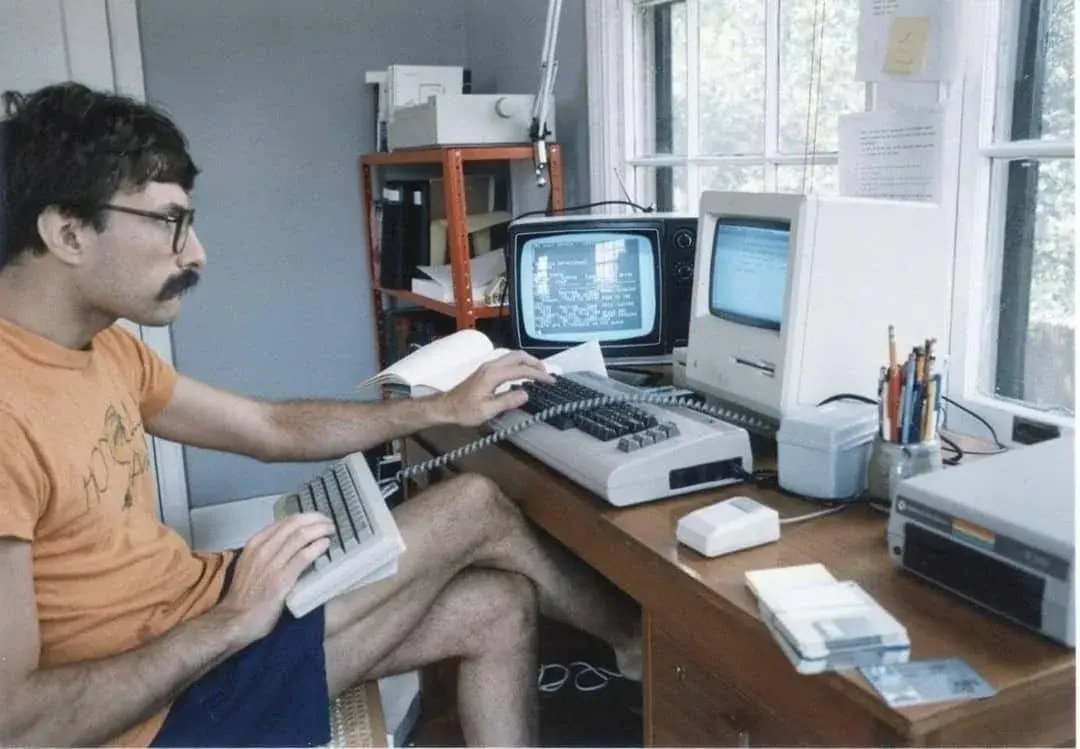

The initial promise of data lakes was revolutionary: store everything in cheap object storage using efficient formats like Parquet and query it at will. The reality for many engineering teams? Broken schemas, partial writes, painfully slow scans, and data corruption when multiple jobs touched the same files.

Here’s the uncomfortable truth: Parquet is an amazing storage format but a terrible table management system. It’s the perfectly manufactured brick in a pile of bricks, useless without the architectural blueprint that tells you how they fit together.

The Fundamental Mistake: Parquet as More Than Storage

Apache Parquet revolutionized analytical workloads by introducing columnar storage. When you need to calculate the average of one column from a table with 100 columns, Parquet lets you read just that column instead of loading everything into memory. It provides drastic I/O reduction and superior compression through data-aware encodings.

But Parquet files alone lack the intelligence to answer basic questions:

- Which files constitute the current version of my table?

- What happens if my Spark job fails halfway through writing?

- How do I safely change my schema without breaking everything?

- Can multiple writers work on the same data simultaneously?

As one developer discovered working directly with raw Parquet files, “No ACID guarantees, no safe schema evolution, no time travel, and a whole lot of chaos when multiple jobs touch the same data.”

The Metadata Layer Revolution

Open table formats like Apache Iceberg, Delta Lake, and Apache Hudi solve this by adding a sophisticated metadata management system on top of Parquet files. Think of it this way: if Parquet files are the individual books in a library, table formats are the master librarian’s ledger, they don’t contain the books themselves, but they meticulously track exactly which books make up the current collection, their locations, and the complete history of every book ever added or removed.

This architectural shift unlocks capabilities that transform brittle data swamps into robust data lakehouses:

ACID Transactions: Your Data Safety Net

Without transactional guarantees, data integrity is constantly at risk. In traditional data lakes using raw Parquet, a failed Spark job could leave data in a corrupted, partial state. Concurrent writes from different processes could silently overwrite each other, leading to lost data.

Iceberg provides full ACID compliance by treating every operation as a single atomic transaction. It writes all necessary data files first, and only after successful persistence does it attempt the final commit, an atomic swap of a single pointer in the catalog. Either the operation completes successfully or fails entirely, leaving the original state untouched.

Schema Evolution Without Rewriting Your Entire Dataset

Business requirements change, and your table schemas must evolve. With raw Parquet, schema evolution was a operational nightmare. The Hive-style approach was akin to a simple spreadsheet where data is identified by position, insert a new column at position B, and everything shifts, silently corrupting every query.

Iceberg operates like a true database, assigning a permanent internal ID to each column. The human-friendly name is merely an alias. You can rename columns to ‘Unit_Cost,’ reorder them, or add new columns, and the system still fetches correct data because it follows the immutable ID, not fragile position or name. These become fast, metadata-only operations that require no data rewrites.

Time Travel: Git for Your Data Infrastructure

Mistakes happen. A bug in an ETL script writes corrupted data. With traditional data lakes, recovery was a manual fire drill. Iceberg’s snapshots provide version control for your data. Every commit creates a new snapshot equivalent to a git commit hash. Time travel becomes as simple as querying the table’s state from last week or last year. If a bad write occurs, a full rollback is a one-line revert command that instantly resets to the last known good state.

Performance at Petabyte Scale

Recursively listing millions of Parquet files in cloud storage is a notorious performance bottleneck. Iceberg solves this by creating its own index through manifest files that contain column-level statistics for each Parquet file. When you query for specific values, the engine reads the manifest metadata and knows instantly which files can be completely skipped without ever touching the actual data.

As AWS explains ↗, “Apache Iceberg offers easy integrations with popular data processing frameworks such as Apache Spark, Apache Flink, Apache Hive, Presto, and more”, making it suitable for massive-scale deployments.

Real-World Impact: Beyond Theoretical Benefits

The difference between raw Parquet and table-formatted data isn’t academic, it fundamentally changes how organizations operate their data platforms.

The Reliable Data Lakehouse

Consider the classic conflict: business analysts running complex queries against critical sales tables for company-wide BI dashboards while ETL jobs append new data every 15 minutes. Without snapshot isolation, BI queries could read a mix of old and new data, producing silently incorrect reports.

Iceberg’s snapshot isolation completely resolves this conflict. When a BI query begins, the engine locks onto the current table snapshot and sees a perfectly consistent version for the entire query duration. Meanwhile, ETL jobs can commit multiple new snapshots independently without affecting ongoing reads.

Compliant Data Operations

Data privacy regulations like GDPR introduced the “right to be forgotten”, a requirement that was operationally impossible for traditional data lakes. To delete a single customer’s records scattered across petabyte-scale tables, you’d have to launch massive rewrite jobs that read terabytes of data just to delete a few kilobytes.

Iceberg supports row-level deletes through an efficient, metadata-driven process. It identifies only the Parquet files containing targeted records and creates lightweight delete files that instruct the query engine to ignore specific rows. The original data files remain untouched, with physical removal handled later during scheduled maintenance.

Streaming-Ready Architecture

Getting fresh data into lakes has always conflicted with maintaining query performance. Frequent commits create millions of tiny Parquet files, forcing query engines to spend more time opening and closing files than actually reading data.

Iceberg handles frequent small commits gracefully, making data available within seconds while providing tools for asynchronous compaction. This decouples ingestion from optimization, allowing low-latency data availability without sacrificing query performance.

The Performance Paradox: More Metadata Means Faster Queries

There’s a common misconception that table formats add overhead. The opposite proves true: for any non-trivial table, Iceberg provides significant performance improvements.

The perceived “overhead” is storing a few extra kilobytes of metadata files. The problem it solves is the primary performance bottleneck in cloud data lakes: recursively listing millions of files. Iceberg avoids this entirely by using manifest files as a pre-built index, transforming slow file-system operations into fast metadata lookups.

When Raw Parquet Still Makes Sense

Despite the advantages of table formats, raw Parquet remains the right choice in specific scenarios:

- Static datasets that never change after initial creation

- Simple append-only workloads with single writers

- Data archiving where transactional updates aren’t needed

- Prototyping and experimentation before establishing full governance

As the research shows, the choice isn’t about replacing Parquet but understanding when to add the management layer it lacks.

Implementing the Transition

Migrating from raw Parquet to a table format involves two primary strategies:

In-place migration registers existing Parquet files into the new metadata system without rewriting them. It’s faster and lower-cost but inherits any existing layout problems.

Shadow migration creates a new, optimized table by reading all data from the old structure using CREATE TABLE ... AS SELECT ... operations. It’s slower but results in a perfectly optimized table from day one.

Common pitfalls include forgetting post-migration optimization (especially compaction for small files) and misconfiguring catalog connections, the most frequent source of errors.

The Future Is Layered

The evolution is clear: we’re moving from thinking about data as files to thinking about data as managed assets. Parquet remains the foundation, the optimal storage container for analytical data. But open table formats provide the essential management layer that transforms fragile collections of files into robust, governable tables.

As organizations demand both the economic benefits of data lakes and the reliability of data warehouses, the combination of Parquet with table formats like Iceberg becomes not just advantageous but essential. You don’t choose between the foundation and the blueprint, you need both to build a sound structure that can scale reliably into the future.

The question is no longer if you’ll adopt a modern table format, but when and how you’ll leverage it to unlock the true potential of your data infrastructure.