Your Home-Brewed LLM Just Became a Regulated AI Provider

How the EU AI Act's computational threshold accidentally drafted hobbyist developers into regulatory compliance - and why your fine-tuned Llama might need a lawyer

EU regulators now officially care whether your hobbyist Llama fine-tuning experiment qualifies as a “high-risk AI system” under the AI Act.

The accidental regulatory draft of independent developers

Your local Llama-2 13B experiment just made you a regulated entity whether you wanted to be or not. The EU AI Act’s August 2nd compliance deadline swept up independent developers under its computational threshold of 10^23 FLOPs - a line many didn’t realize their weekend projects had crossed. That GTX 3090 collecting dust in your basement? Suddenly it’s not just crunching numbers but potentially crunching your legal compliance obligations.

The law doesn’t care if you’re running models for fun in your garage or building the next billion-dollar AI startup. If your model exceeds that computational threshold, you’re officially a “provider” with documentation requirements, transparency obligations, and liability exposure. The EU basically handed hobbyists a regulatory participation trophy they never asked for.

”Provider” status doesn’t care about your GitHub stars

Let’s cut through the regulatory fog: You don’t need to be Meta or OpenAI to trigger compliance requirements. The EU AI Act’s definition of “provider” explicitly includes anyone who “develops an AI system or has an AI system developed with a view to placing it on the market or putting it into service under their own name or trademark.” That means your fine-tuned Llama model published on Hugging Face? You’re now in the regulatory crosshairs.

The compliance burden isn’t trivial. Independent developers now need to:

- Maintain detailed documentation of training data sources and methodologies

- Implement risk management systems that track model behavior

- Create transparency documentation for end users

- Establish incident reporting protocols

- Navigate copyright compliance for training data

The cognitive dissonance is palpable - the same community that built open-source AI tools now faces bureaucratic hurdles that feel designed for corporate entities, not garage hackers.

The FLOP threshold debate nobody saw coming

Here’s where it gets messy: There’s genuine confusion about whether the threshold is actually 10^23 or 10^25 FLOPs. As one skeptical developer pointed out in the r/LocalLLaMA thread ↗, “10^25 is MASSIVE. 10 Yotta flops. Full NVL72 has Exaflop of int/fp4 or 1400 Petaflops.” Their calculation suggests you’d need “almost HUNDRED BILLION times more operations” to cross that limit on consumer hardware.

This ambiguity creates regulatory limbo. Are hobbyists actually compliant by default because their hardware can’t reach the threshold? Or does the theoretical capability of the model itself trigger requirements regardless of deployment hardware? The EU hasn’t provided clear guidance, leaving developers to navigate this legal minefield blindfolded.

Open source innovation hits regulatory walls

The real tragedy here isn’t the paperwork - it’s how these requirements strangle the open-source innovation that built today’s AI ecosystem. Independent developers who once freely shared model weights and training methodologies now face potential liability for how others use their creations. The collaborative spirit that powered early AI development is colliding with a regulatory framework designed for closed corporate systems.

Consider the chilling effect on model sharing: If you’re legally responsible for how your fine-tuned model gets used, would you still publish it? The EU AI Act’s risk-based approach makes sense for medical diagnostics or autonomous vehicles, but applying it to hobbyist projects creates what one Reddit commenter called “a prison of your own making” - except you didn’t build the prison, Brussels did.

Compliance theater for the solo developer

The most absurd aspect? The compliance requirements assume organizational structures that don’t exist for independent developers. Risk management systems, quality assurance protocols, and human oversight mechanisms are designed for companies with compliance teams - not solo coders working nights and weekends.

As the ACE + Company analysis ↗ shows, proper AI governance requires “shared, ongoing risk assessment” and “dynamic transparency” - resources most hobbyists simply don’t have. The regulatory burden scales with organizational complexity, yet the law treats a garage developer the same as Google DeepMind.

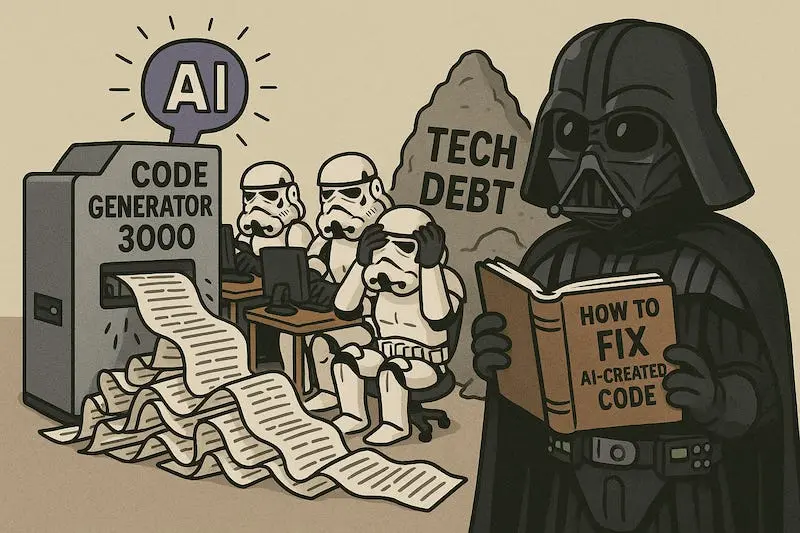

The only thing scaling faster than models is regulatory paperwork

The EU AI Act’s well-intentioned framework for high-risk systems has accidentally drafted hobbyists into a compliance regime they can’t possibly navigate. While established companies can absorb these costs, independent developers face an impossible choice: abandon their projects, operate in regulatory gray areas, or drown in paperwork that has nothing to do with their actual technical work.

The most telling comment from the Reddit thread wasn’t about technical details but existential dread: “Any private individual who actually attempts to comply with this completely untested regulation because of some vague worry about whatever is living in a prison of their own making.” The regulatory framework designed to protect people might end up protecting only those with legal teams - killing the grassroots innovation that built modern AI in the first place.