Your RAG System is Lying to You: The Uncomfortable Truth About Production Metrics

When high-stakes RAG deployments fail silently, teams discover that traditional accuracy metrics miss everything that matters

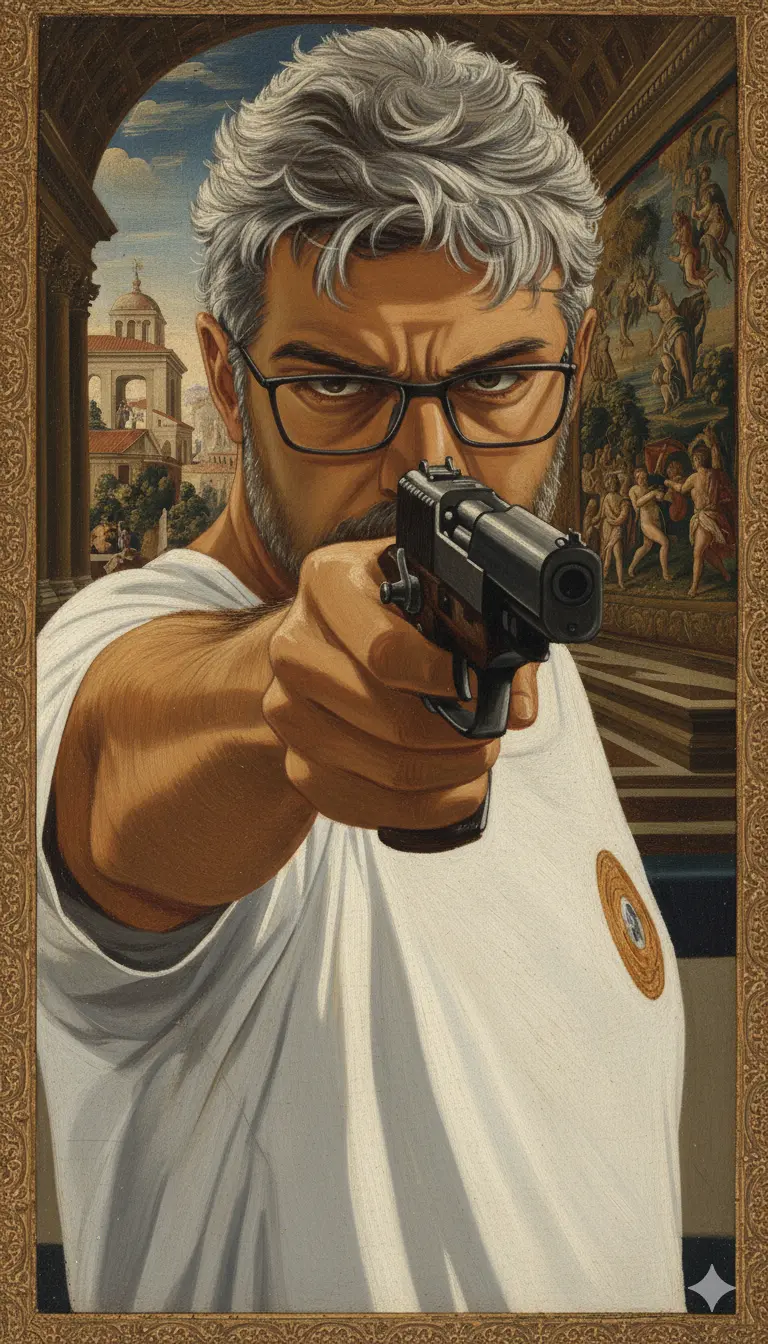

Your RAG system might be confidently delivering wrong answers 23% of the time, and your current metrics probably aren’t telling you. That’s the brutal reality facing production teams today, where the gap between lab performance and real-world reliability has become a chasm.

The False Confidence Trap

Most teams deploy RAG systems with the same evaluation toolkit they used in development: retrieval accuracy, answer relevance scores, and maybe some basic hallucination detection. These metrics look great on dashboards but miss the critical failures that burn users and destroy trust.

Consider the team building a customer support RAG where “the cost of a false positive is very high”, sending incorrect information could mean lost customers or regulatory violations. They discovered the hard way that traditional metrics like cosine similarity and token probabilities failed catastrophically when the model hallucinated answers based on retrieved context. The system would return high confidence scores while delivering completely fabricated information.

The core problem? RAG failures aren’t always about retrieving wrong documents. Sometimes the system retrieves perfectly relevant context but generates answers that contradict it, or worse, blends retrieved facts with parametric knowledge to create plausible-sounding fabrications.

The Metrics That Actually Matter (And Why Nobody Tracks Them)

When you move beyond academic benchmarks into production, you need metrics that reflect real business risk. Developer forums consistently highlight several under-measured failure modes:

Faithfulness Scoring isn’t just nice-to-have, it’s existential for enterprise deployments. The research paper “Probing Latent Knowledge Conflict for Faithful Retrieval-Augmented Generation” ↗ reveals that LLMs experience internal knowledge conflicts where they prioritize parametric memory over retrieved evidence, even when the context directly contradicts their training data.

Cross-document contradiction handling becomes critical when your RAG system aggregates information from multiple sources. Production systems regularly encounter conflicting information across documents, and traditional metrics don’t capture whether systems detect and resolve these conflicts appropriately.

Citation hallucination represents one of the most dangerous failure modes. Systems correctly retrieve relevant documents but incorrectly attribute claims to them, creating a veneer of credibility while delivering misinformation. As one production engineer noted, “Presence of citations appears to indicate proper grounding, and human reviewers rarely verify every citation against source documents.”

Temporal staleness detection remains almost universally overlooked. Most teams don’t track whether retrieved documents contain information that was accurate when indexed but has since become outdated, leading to confidently delivered obsolete information.

The Tooling Landscape: Promises and Pitfalls

The RAG evaluation ecosystem has exploded with solutions, each claiming to solve different aspects of the reliability problem. Let’s examine the major players:

RAGAS offers LLM-based scoring for retrieval quality and answer faithfulness, but developers report skepticism about “judging LLM outputs with another LLM.” As one engineer bluntly put it, stakeholders “do not trust the whole concept of judging the output of an LLM with another LLM.”

Deepchecks takes a more comprehensive approach with “dual-focus evaluation (simultaneously measures both accuracy and safety)” plus automatic annotation and weak-segment detection. Their platform attempts to bridge the gap between retrieval and generation metrics.

Traceloop focuses on traceability across the entire pipeline, capturing “the origin, flow, and quality of information in RAG systems.” This proves essential for debugging timing failures where “retrieval completes after generation timeouts trigger, causing systems to generate responses without retrieved context.”

Open RAG Eval stands out with research-backed metrics like UMBRELA for retrieval quality and AutoNugget for key information capture, requiring no predefined correct answers, a significant advantage for production systems dealing with constantly evolving knowledge bases.

Beyond Aggregate Metrics: The Case for Multi-Layer Monitoring

Production RAG evaluation requires moving beyond single-number scores to layered monitoring that captures different failure modes:

Retrieval-layer monitoring tracks standard metrics like recall@k and precision but adds dependency tracking between embedding models and indexes. Teams often discover “embedding drift without index synchronization” where “document indexes using older embeddings become increasingly misaligned” with query embeddings.

Generation-layer evaluation must measure faithfulness, citation accuracy, and contradiction handling separately from retrieval quality. Production systems need to detect when “models correctly identify that documents were retrieved but incorrectly attribute content to wrong sources.”

Pipeline-level observability captures coordination failures like “retrieval timing attacks” where “timing issues between retrieval completion and generation initialization create silent failures.” Systems might function correctly under test conditions but fail when “infrastructure strain changes timing characteristics.”

The Human-in-the-Loop Reality Check

Despite advances in automated evaluation, many production teams are returning to human judgment as the ultimate arbiter. One team working with high-stakes applications described their process of “getting samples of questions and answers labeled by humans who answer these questions in practice to see which metric will correlate with the human answer.”

This approach highlights a critical insight: the most reliable metrics are those that correlate with human judgment in your specific domain. General-purpose evaluation scores often miss domain-specific failure patterns that human experts immediately spot.

The same team developed a pragmatic workflow that routes uncertain answers to human agents, acknowledging that “if I manage to answer 50% of the users’ queries correctly and the other 50% of queries go to an agent, the system reduces the workload of the agent by 50%.” This acceptance of partial automation with human oversight represents a maturity often missing from RAG deployment discussions.

Building Trust Through Conservative Abstention

Perhaps the most counterintuitive insight from production deployments is that the most reliable RAG systems are often the ones that answer fewer questions. Teams building high-stakes applications increasingly prioritize “abstention under uncertainty” over raw answer rate.

One developer summarized this philosophy: “Optimize for abstention under uncertainty. Measure retrieval recall@k, reranker uplift, and cite coverage. Enforce answer-only-if-supported rules.” This conservative approach acknowledges that in many business contexts, “No answer” is infinitely better than “Wrong answer.”

Systems designed this way implement multiple fallback mechanisms:

- Low similarity thresholds route to human agents

- Confidence scoring triggers review workflows

- Contradiction detection flags uncertain responses

- Freshness checks prevent stale information delivery

The Path Forward: Context-Aware Evaluation

The future of RAG evaluation lies in context-aware metrics that understand the domain-specific consequences of failure. A wrong answer in customer support has different business impact than a wrong answer in medical diagnosis or financial advising.

Teams are beginning to implement “cost-weighted accuracy” metrics that weight errors by their business impact rather than treating all mistakes equally. They’re building evaluation suites that test failure modes specific to their domains rather than relying on generic benchmarks.

The most sophisticated teams are moving beyond static evaluation to continuous monitoring that detects “negative interference from retrieval overload” where “excessive retrieved content introduces noise that degrades generation by confusing models” or “recursive retrieval loops where systems repeatedly retrieve the same content without making progress.”

The Uncomfortable Truth

After examining dozens of production deployments and evaluation approaches, the uncomfortable truth emerges: we’re measuring the wrong things. Traditional accuracy metrics optimize for laboratory conditions while missing the subtle failures that destroy user trust in production.

The metrics that matter, faithfulness, contradiction handling, citation accuracy, temporal relevance, require more sophisticated measurement but prevent the catastrophic failures that undermine RAG adoption. Teams that invest in these nuanced evaluation approaches discover that their systems become not just more reliable, but more trustworthy.

As one engineer working on high-stakes applications concluded: “Trust matters more than coverage.” In the race to deploy RAG systems, that’s the metric we should all be optimizing for.