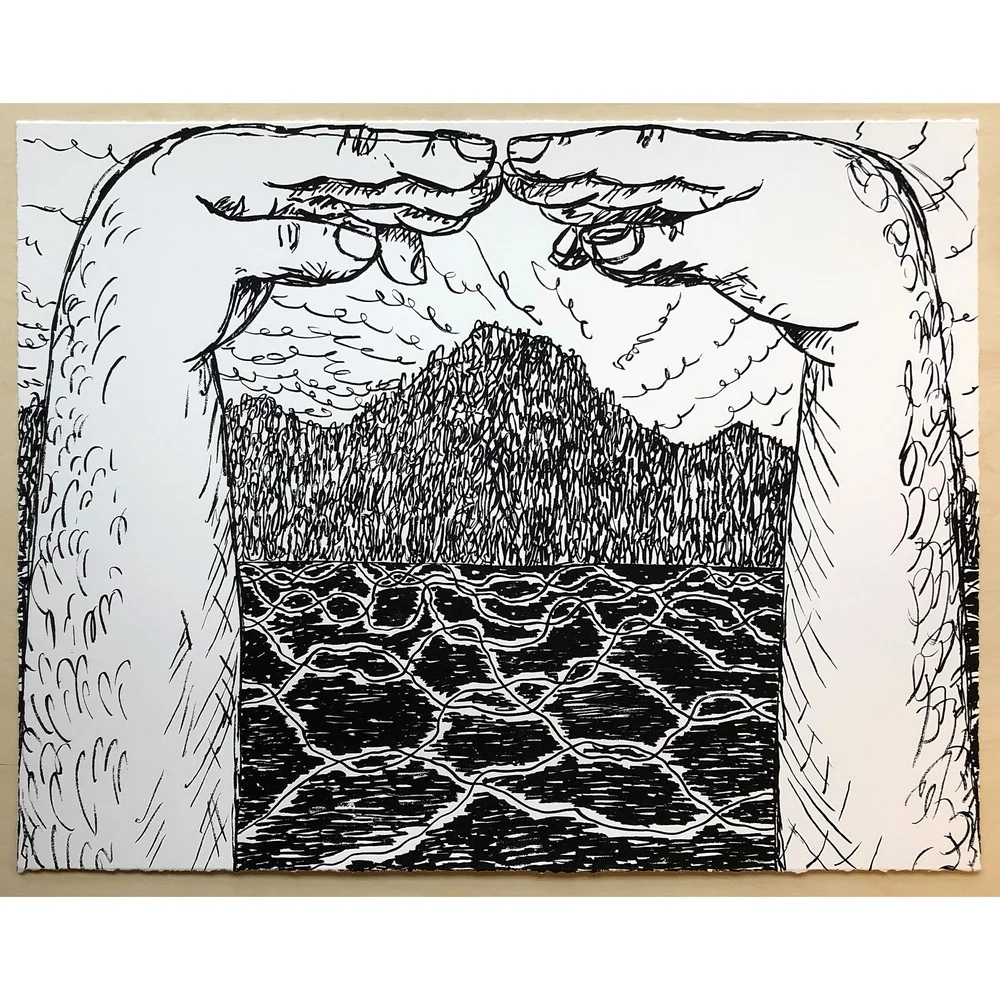

Scaling the AI Mountain: Why GPT-5, Gemini, and Claude 3.5 May Be Hitting the Ceiling

An analysis of AI scaling challenges, economic constraints, and emerging research directions in foundation model development.

The race to build larger foundation models is showing signs of structural strain. What was once a rapid progression of capabilities now resembles a prolonged effort with diminishing progress.

The “Great AI Leap” Is Stalling

“AI-generated lawsuits are flooding courts faster than chatbots can say ‘I’m not a lawyer.’”

This observation highlights a fundamental contradiction in current AI development: while public narratives emphasize breakthroughs, technical metrics suggest performance gains are plateauing. The recent launches of GPT-5, Gemini, and Claude 3.5 have generated significant attention, yet their benchmark improvements over prior versions remain marginal.

OpenAI’s compute demand has increased significantly, but each additional GPU hour yields progressively smaller returns.

Core Problem: Scaling Laws Meet Economic Reality

The “AI scaling law” - more compute + more data = better models—has dominated development for years. Three interrelated factors are now undermining this approach:

-

Diminishing returns on model size

GPT-5’s benchmark results show a 0.3% improvement on MMLU over GPT-4 despite an $8 billion training budget. Gemini’s internal documentation (reported by eWeek) acknowledges a performance slowdown comparable to Sutskever’s NeurIPS 2022 warning about pre-training limitations. -

Compute cost escalation

OpenAI’s financial reports reveal $4 billion quarterly inference costs, exceeding $3 billion in training expenditures. This 1.3:1 cost-to-revenue ratio raises sustainability concerns for current R&D models. -

Data saturation

Ilya Sutskever’s public statements describe the internet as “the fossil fuel of AI”, with recent claims that we’ve reached “peak data.” Without new high-quality data sources, model improvements shift from understanding to pattern memorization.

These factors confirm a fundamental shift: the traditional scaling model is no longer delivering proportional returns.

1. GPT-5’s Marginal Gains

The Verge analysis (full report ↗) documents GPT-5’s performance on code completion and reasoning benchmarks. Despite a 500B parameter count, the model’s HumanEval score improved by only 2 points compared to GPT-4, despite a $6 billion training budget.

2. Gemini’s Performance Adjustments

Google’s engineering blog disclosed a strategic pivot after Gemini failed to meet latency targets despite 30% higher FLOP counts. The technical analysis attributes this to “diminishing returns on scaling” and increased reliance on synthetic data pipelines.

3. Claude 3.5’s Development Pause

Anthropic delayed the release of Claude 3.5 Opus after internal testing revealed minimal safety alignment improvements. The engineering lead cited “the scaling wall” and emphasized the need for architectural innovations over parameter count increases.

4. New Scaling Rules from RL Research

UC Berkeley’s “Compute-Optimal Scaling for Value-Based Deep RL” paper introduces the concept of TD-overfitting, where larger batch sizes negatively impact smaller models. The key finding: optimal batch size depends on model capacity rather than fixed scaling rules. This challenges the assumption that larger models inherently require larger training batches.

The new scaling rules demonstrate that beyond certain model sizes, training methods must be fundamentally adjusted to avoid compute waste.

The assumption that larger models will automatically solve AI challenges is being tested by economic and technical constraints. The next phase of progress requires architectural innovation, data curation, and compute optimization not just parameter count increases. While major players adjust their scaling strategies, alternative approaches are gaining traction through cost-effective implementations and specialized applications. The industry’s next breakthrough will likely emerge from rethinking training dynamics rather than simply expanding existing models.