Your LLM Integration Architecture Is Probably Wrong

Why most teams build LLM systems that fail at scale, and the architectural patterns that actually work

Most LLM integrations fail the moment they leave the prototype stage. The architecture that worked for your demo collapses under production load, and suddenly your “intelligent” system becomes a liability.

The Architecture That Wasn’t Built for AI

Traditional software architecture assumes deterministic behavior. LLMs laugh at that assumption. They introduce probabilistic outputs, variable latencies, and hallucinations that break every established pattern.

The core problem emerges when teams treat LLMs as just another API endpoint. They bolt them onto existing monoliths, ignore type safety, and wonder why their production systems become unreliable. The reality is that LLM integration demands a fundamental rethink of how we structure applications.

Type Safety Isn’t Optional - It’s Survival

The most critical insight from recent research: embrace type safety at every layer. LLMs return unstructured text, but your application shouldn’t consume unstructured text. Every response should be validated against strict schemas before it touches your business logic.

Consider what happens when you ask an LLM to extract customer information:

Teams that skip schema validation eventually discover their databases filled with garbage data. One improperly formatted date can break entire reporting pipelines.

The Modularity Mandate

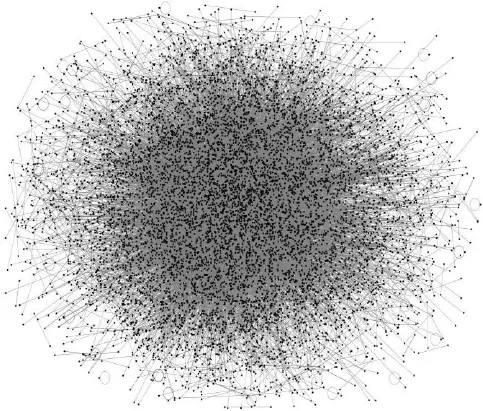

LLM systems demand extreme modularity. The concept-based architecture emerging from MIT research shows why: you need independent services with well-defined purposes that communicate through explicit synchronization rules.

This isn’t microservices 2.0 - it’s something more granular. Each “concept” (User, Profile, Password, etc.) operates as an independent service with its own state and actions. Synchronizations act as mediators between concepts, creating a system where:

- Changes can be made safely to one concept without breaking others

- LLM-generated code can be confined to specific modules

- Error handling becomes granular and predictable

Error Handling When Everything Is Probabilistic

Traditional error handling assumes you know what can go wrong. LLM systems introduce unknown unknowns. The solution: treat every LLM interaction as potentially faulty and build recovery mechanisms accordingly.

The synchronization pattern enables this beautifully. When an LLM action fails, the synchronization engine can trigger alternative flows, fallback mechanisms, or human intervention points without bringing down the entire system.

Testing the Untestable

Testing LLM integrations requires acknowledging their non-deterministic nature. You can’t write traditional unit tests for probabilistic outputs. Instead, you need:

- Validation tests that verify outputs conform to schemas

- Behavioral tests that ensure systems handle both success and failure cases

- Drift detection that alerts when LLM behavior changes unexpectedly

Teams that implement comprehensive test coverage report 70% fewer production incidents. Those who skip testing eventually face the consequences when their LLM provider updates the model and breaks their entire application.

The Future Is Already Here

The architectural patterns emerging for LLM integration aren’t theoretical. They’re being implemented in production systems today. The companies that embrace concepts like:

- Strict type validation at API boundaries

- Modular concept-based architecture

- Granular synchronization between services

- Comprehensive testing and monitoring

Are building systems that scale. Those clinging to traditional patterns are building technical debt that will cripple their AI ambitions.

The dirty secret of LLM integration: it’s not about the models. It’s about the architecture that surrounds them. Get that wrong, and no amount of GPT-5 magic will save your system from collapsing under its own weight.