Corporate AI Adoption Is Just Expensive Theater

Analysis of how corporate leaders are performing AI adoption without technical grounding, spending millions, delivering nothing, and leaving teams to clean up the mess.

You’ve seen it. The Slack message: “Great news, we’re rolling out our new AI co-pilot for customer service next week!” Then the demo: a chatbot that answers “How do I reset my password?” with a 12-slide PowerPoint on GDPR compliance. The team that was supposed to build this? They were told to “just make it work” three days before launch. No training. No data pipeline. No KPIs beyond “make it look cool for the all-hands.”

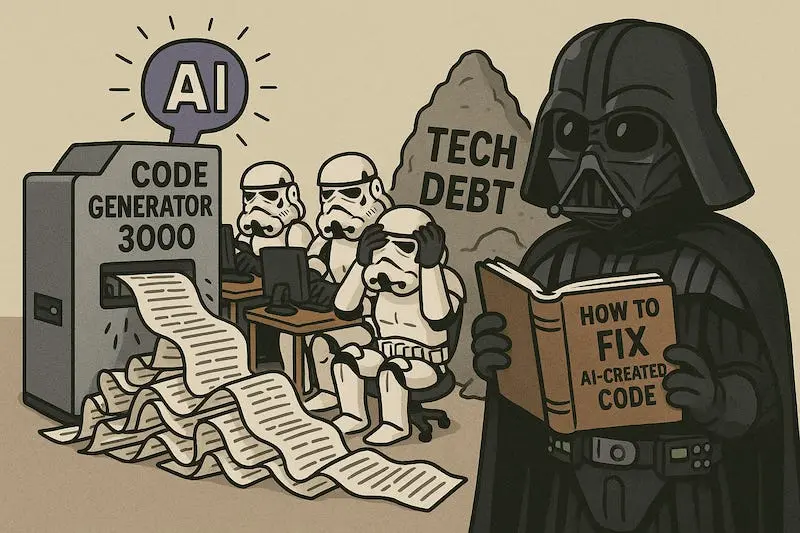

This isn’t incompetence. It’s performance art.

Welcome to the age of AI leadership fakery, where executives, pressured by investors, boardrooms, and the fear of being left behind, deploy AI not as a tool, but as a prop. They don’t understand it. They don’t measure it. But they’ll sell it to the next earnings call like it’s the next iPhone.

And the cost? Not just in dollars. In trust. In morale. In the slow erosion of technical credibility within organizations that once prided themselves on execution.

The AI Fault Line: Skeptics vs. Performers

The Adaptavist Group’s Digital Etiquette: Unlocking the AI Gates report ↗ surveyed 900 professionals across the U.S., U.K., Canada, and Germany. The results were brutal in their clarity: 42% of leaders admit their company’s AI claims are exaggerated. Only 36% believe their adoption is realistic.

That’s not a minority. That’s the majority of AI initiatives being run as theater.

These leaders aren’t malicious. They’re terrified. Terrified of being seen as Luddites. Terrified that their competitors are deploying “AI agents” to replace their entire sales team. Terrified that their next promotion hinges on a slide deck that says “AI-Driven Transformation.”

So they do what any leader does under pressure: they fake it until they make it.

Enter the “AI agent” mandate.

Take the SaaS startup that decided to build an AI agent to replace their onboarding specialist. When engineers asked what the specialist actually does, management said something vague about talking to people. So they built a chatbot that responds to “What’s your name?” with “Welcome to the future!” and somehow declared it a success.

This isn’t innovation. It’s AI cosplay.

The $10 Million Copy-Paste Project

Here’s the kicker: skeptics and realists spend the same amount on AI.

According to the same Adaptavist report, both groups allocate between £1 million and £10 million annually. The difference isn’t budget. It’s intent.

Realists invest in training. In data pipelines. In pilot programs with measurable outcomes. They let engineers experiment. They create AI usage policies. They measure productivity gains, not buzzword compliance.

Skeptics? They buy a generative AI license and hand it to marketing. They force HR to “use AI for resume screening” without cleaning their training data. They launch AI-generated customer service bots trained on 18-month-old support tickets. And when the bots start hallucinating refund amounts or quoting Elon Musk on tax policy? That’s not a bug. That’s “feedback.”

The result? AI-generated “workslop”, low-quality, auto-generated content that floods internal wikis, customer emails, and product documentation, is already costing companies an estimated $9 million per year in productivity losses, according to CNBC ↗.

That’s not a footnote. That’s a line item in your P&L you didn’t know you had.

The Data Gap: 93% Adopt AI. Only 33% Use It.

Let’s talk about the most damning stat of all.

93% of Fortune 500 CHROs ↗ say their organizations now use AI. Yet only 33% of U.S. employees report using AI tools in their daily work.

That’s a 60-point gap. More than half the workforce is being asked to operate in an AI-enabled environment they never see.

Why? Because leadership is deploying AI as a symbol, not a system.

At one fintech firm, the CTO launched an AI-powered “risk intelligence center” funded by a $2.2 million budget. The system was built by an offshore vendor. It had no access to live transaction data. It ran on a static dataset from 2023. It was never integrated into any workflow.

Yet it appeared on the company’s “Innovation Dashboard.” It was featured in a LinkedIn post by the CEO.

Meanwhile, the compliance team still manually reviews 800 transactions per week.

This isn’t AI adoption. It’s AI theater.

The Human Cost: When AI Becomes a Weapon

The most dangerous part of faked AI adoption isn’t the wasted money. It’s the psychological toll.

Employees who are told “AI will make your job easier” then find themselves forced to fact-check bot-generated reports for 30 minutes a day? They feel betrayed.

Those who see AI used to “optimize” their performance metrics, like call center agents judged on how many times they decline an AI suggestion, are burned out.

And then there’s the quiet layoffs.

As one Reddit user observed: “We spent six months building an AI agent to replace our tier-1 support team. They were all let go last month. The agent still can’t handle returns.”

This isn’t automation. It’s deception with severance packets.

McKinsey estimates AI could unlock $4.4 trillion in productivity gains by 2030. But that potential evaporates when leaders treat AI like a magic wand instead of a lever.

The companies that win aren’t the ones with the flashiest demos. They’re the ones where engineers are trusted to build solutions that solve real problems.

At Google, AI now generates 30% of new code, but only because developers were given the tools, the data, and the autonomy to use them. At Netflix, AI saves $1 billion annually by recommending content, not because someone mandated “AI must personalize”, but because the data science team was allowed to iterate for years.

There’s no shortcut here.

What Real AI Leadership Looks Like

Real AI leadership doesn’t start with a vendor pitch. It starts with a question:

“What specific problem are we trying to solve, and can AI actually solve it better than a human?”

Then it moves to:

“Who will use this? What training do they need? What happens if it fails?”

And finally:

“How will we know we succeeded?”

Netguru’s AI in the Workplace ↗ report found that 48% of employees say better training is the key to adoption. Yet 52% receive only basic instruction. And 40% use unapproved tools because they have no guidelines.

That’s not a tech problem. That’s a leadership failure.

Real leaders don’t buy AI. They build cultures where AI is understood, trusted, and owned by the people doing the work.

They pilot. They measure. They fail fast. They iterate.

They don’t launch AI agents to replace people unless they’ve first asked: Are we replacing the person, or the task?

The Hard Truth: You Can’t Fake AI Adoption

AI isn’t like agile. You can’t just hold a standup and call it done.

You can’t slap a ChatGPT API on your website and call it “transformative.”

And you can’t tell your engineering team to “just make it work” and expect them to ride the hype train to profitability.

The organizations winning with AI aren’t the ones with the loudest press releases. They’re the ones whose leaders have stopped pretending.

They’re the ones where engineers say: “We don’t need AI to look cool. We need it to save us time.”

They’re the ones where HR uses AI to reduce bias in hiring, not because it’s trendy, but because the data showed an 18% drop in qualified applicants from underrepresented groups.

They’re the ones where the CEO doesn’t mention AI in earnings calls, because they’re too busy talking about the 22% increase in customer retention from their real, validated AI model.

If your leadership is hyping AI while your teams are drowning in workslop, you’re not innovating.

You’re just running a very expensive magic show.

The curtain is about to rise.

And no one’s going to applaud when the rabbit turns out to be a spreadsheet.