Why treating AI data as transactional tables beats unstructured blob chaos

Why treating AI data as transactional tables beats unstructured blob chaos

The AI revolution is being built on a foundation of technical debt that’s costing companies millions in wasted GPU cycles and engineering time. While Big Tech pours $320 billion ↗ into AI infrastructure this year alone, most teams are still wrestling with the same fundamental problem: treating AI data like a pile of unstructured blobs rather than transactional assets.

The Unstructured Data Nightmare

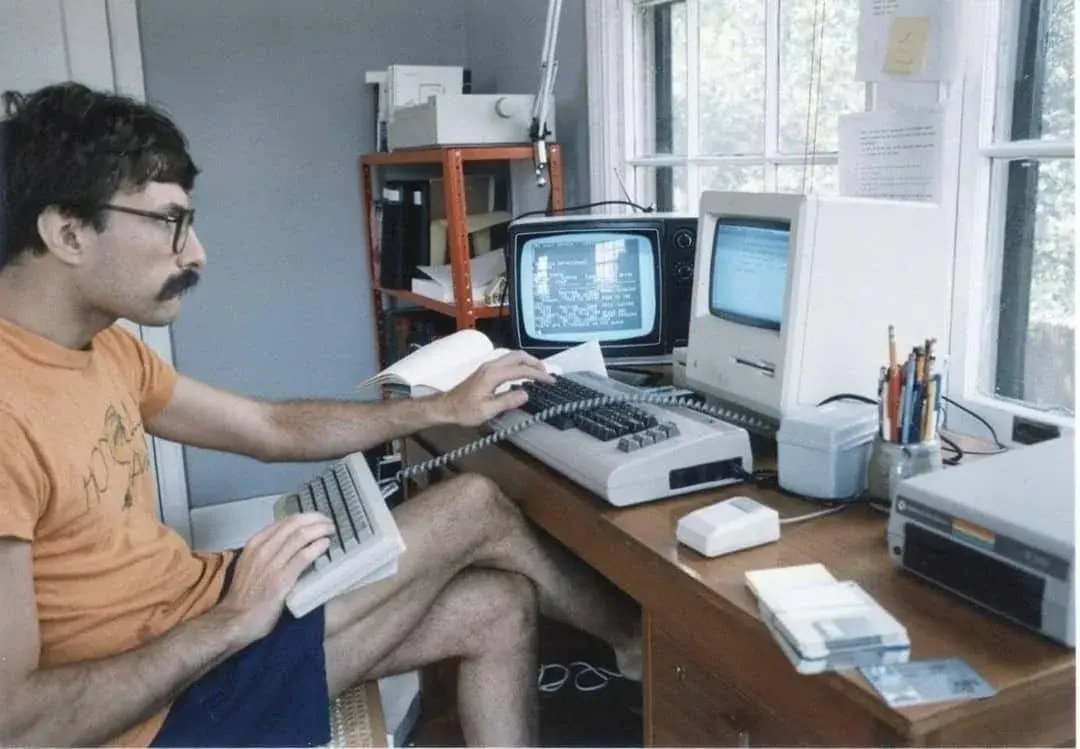

Walk into any AI startup’s data pipeline, and you’ll likely find what one consultant describes as “just a pile of big text files.” Files scattered across object storage, metadata living in separate systems, and orchestrators that can’t “see” the data they’re processing. This approach might look simple on paper, but in practice it creates a cascade of inefficiencies.

The core problem? When data exists as unstructured blobs, orchestrators can’t optimize batching based on actual data characteristics. Workers pull random chunks without understanding token lengths, expected latency, or resource requirements. The result: GPUs sit idle while waiting for poorly batched workloads, retries become clumsy affairs, and debugging turns into forensic archaeology.

Tables Over Blobs: The Paradigm Shift

The emerging solution flips traditional AI data management on its head. Instead of treating data as unstructured files, forward-thinking teams are adopting a table-first approach where everything, samples, runs, evaluations, artifacts, lives as transactional tables with clear schema and lineage tracking.

This isn’t just theoretical. Developers report using PostgreSQL as a control plane with tables for samples, runs, evals, and jobs. Workers pull rows using SELECT ... FOR UPDATE SKIP LOCKED ordered by priority and token estimates, batching by token bins and committing per row. This approach keeps GPUs consistently busy and makes retries clean and predictable.

The key insight: treating datasets as tables provides a foundation for working with multimodal data while maintaining transactional integrity. As TractoAI explains ↗, “When a data blob is tightly coupled with the metadata, you cannot efficiently operate on a metadata level without touching the data itself. This leads to unnecessary IO overhead.”

Why Traditional Object Storage Falls Short

S3-compatible and POSIX-like storages lack an interface for atomic operations across multiple objects. This limitation becomes critical when processing interconnected datasets that can’t be merged, like “cooking videos” plus “recipes” or “coding agent prompts” plus “agent trajectories.”

In the world of OLTP systems, the main way to change interconnected data is through transactions. But in AI workflows, teams accept client-side workarounds as normal, even though they wouldn’t tolerate similar limitations in traditional database systems.

The contrast is striking: while Meta builds 129k GPU clusters ↗ spanning multiple data center buildings, most teams struggle with basic data consistency because their infrastructure can’t handle atomic updates across related datasets.

Data-Aware Orchestration Changes Everything

The real power of table-first infrastructure emerges when orchestrators understand the data they’re processing. Instead of treating batch inference as a black box, data-aware schedulers can:

- Group workloads by token length to optimize GPU memory usage

- Retry failed processing at the row level rather than restarting entire jobs

- Implement snapshot isolation so readers see consistent dataset versions

- Track lineage through simple foreign key relationships

This approach transforms GPU utilization from a guessing game into a predictable science. One developer reported that after switching to table-first storage, “fewer mystery bugs, better GPU use, cleaner comparisons” became the norm rather than the exception.

The MapReduce Renaissance for AI Workloads

Interestingly, the solution to modern AI data challenges might lie in revisiting Big Data principles. The data-aware approach essentially brings MapReduce semantics to AI workloads, where the scheduling framework understands the inner logic of processing.

As TractoAI demonstrates, treating GPU batch inference as a straightforward map operation allows schedulers to subdivide processing chunks based on actual performance. This paradigm enables automatic scaling and efficient failure recovery, something raw compute orchestration frameworks struggle with.

When a data-aware scheduler encounters a failed worker, it can redistribute the specific input rows across remaining workers. Data-agnostic schedulers, by contrast, typically have to re-run entire jobs, wasting enormous amounts of compute.

Implementation Patterns That Actually Work

So what does table-first infrastructure look like in practice? Successful implementations share several characteristics:

Unified Namespace: All data artifacts, raw datasets, refined data, synthetic data, evaluation results, live in the same transactional namespace. This eliminates the friction of copying data between different storage systems.

Schema Enforcement: Even for multimodal data, enforcing schema at the outer table level provides guardrails that prevent data drift and make exploration easier.

Transactionality: Dataset-level transactions provide snapshot isolation for readers and prevent dirty state exposure during distributed writing.

Versioning as First-Class Citizen: New dataset versions become new tables, often reusing previous data chunks, with lineage tracking built into metadata.

Teams using this approach typically combine transactional databases for metadata control with scalable storage for actual data objects, using foreign keys to maintain relationships. The orchestrator becomes data-aware, making scheduling decisions based on actual data characteristics rather than treating everything as opaque blobs.

The Business Case Beyond Technical Elegance

With AI infrastructure spending projected to reach $500 billion annually ↗ by 2030, efficiency isn’t just a technical concern, it’s a business imperative. Poor GPU utilization directly translates to wasted capital, while inefficient data pipelines slow time-to-market for AI products.

The table-first approach offers tangible ROI through:

- Better GPU utilization through intelligent batching

- Faster iteration cycles with clean retries and versioning

- Reduced debugging time with clear lineage and schema

- Improved collaboration through standardized data interfaces

The Infrastructure Divide

We’re witnessing a growing divide in AI infrastructure sophistication. On one side, companies like Meta are building gigawatt-scale clusters with advanced liquid cooling and custom silicon. On the other, most teams struggle with basic data management problems that table-first approaches could solve.

This divide matters because infrastructure limitations directly constrain AI capabilities. Teams wrestling with blob storage and basic orchestration can’t effectively implement reinforcement learning, supervised fine-tuning, or complex reasoning workflows, all areas where Meta and other leaders are making significant investments.

Getting Started Without Rewriting Everything

The good news: adopting table-first principles doesn’t require abandoning existing infrastructure. Many successful implementations use a hybrid approach where:

- Raw data remains in object storage

- Metadata and control planes move to transactional databases

- Orchestrators become data-aware through simple schema integration

- Existing processing frameworks gain transactional capabilities through wrapper layers

Start by identifying the biggest pain points in your current workflow, likely around batching, retries, or debugging, and implement table-first solutions for those specific areas. The incremental approach often reveals benefits quickly, building momentum for broader adoption.

The Future Is Structured

As AI workloads grow more complex and resource-intensive, the case for table-first infrastructure becomes overwhelming. The companies that treat AI data as first-class transactional assets will build more reliable, efficient, and scalable systems. Those clinging to unstructured blob approaches will waste increasing amounts of time and money on preventable problems.

The infrastructure revolution isn’t just about bigger GPUs or faster networks, it’s about smarter data management. And right now, the smartest approach is treating your AI data like what it actually is: structured information that deserves the same transactional guarantees we’ve demanded from databases for decades.

The question isn’t whether you’ll adopt table-first infrastructure, but how much money you’ll waste before you do.