Scaling to 10 Million Users on Azure: The Microservices Mistake Nobody Admits

Why premature microservices and over-engineering doom Azure scaling efforts, real lessons from hitting 10 million users

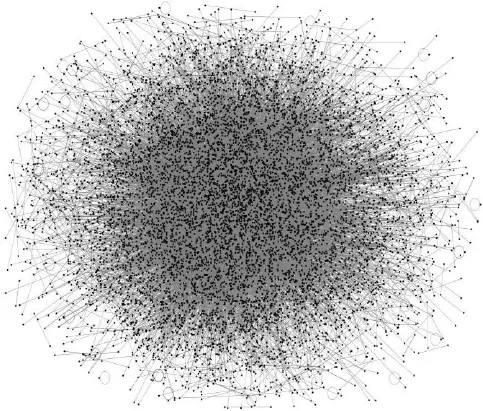

Scaling applications to 10 million users on Azure isn’t about flashy new tools, it’s about resisting the urge to over-engineer early. Most teams dive into microservices and complex architectures before they even hit 1,000 users, only to find themselves drowning in technical debt. The truth? Scaling to millions starts with doing absolutely nothing. StackOverflow ran on a single database server until it had 10 million monthly visitors, a fact that still shocks developers convinced they need Kubernetes from day one. Read more about StackOverflow’s architecture ↗.

The Single-User Myth: Why Simplicity Wins

Let’s be brutally honest: scaling your Azure app to 10 million users starts with doing nothing. For the first 1,000 users, the best move is to deploy a modular monolith on Azure App Service with a single SQL database. Yes, really. Microsoft’s own guidance emphasizes that premature optimization is the root of all scaling evil. A modular monolith gives you natural boundaries while keeping related code together, making it trivial to split later when pain points emerge.

Avoid the trap of building for imagined scale. When your app has one user, adding Kubernetes or microservices is like installing a rocket engine on a toy car. It looks cool but serves no purpose. At this stage, focus on statelessness: ensure your web servers don’t store session data locally. This way, when you do scale later, user sessions won’t break. Microsoft’s Well-Architected Framework ↗ isn’t theoretical, it’s a practical checklist you can use daily. And yes, add Application Insights from day one. Monitoring isn’t optional, it’s your early warning system.

Vertical Scaling: The Quick Fix That Fails You

When traffic hits 1,000 users, the knee-jerk reaction is to throw more power at the problem. Vertical scaling, upgrading to a bigger VM or higher App Service tier, is easy. Azure makes it effortless: click a button, wait a few minutes, and your database runs faster. But this is a trap. There’s always a maximum size for single machines, and bigger instances get exponentially more expensive.

Vertical scaling carries you through moderate traffic increases, but it’s a dead end. Azure App Service plans ↗ can’t scale beyond a certain point, usually around 10,000 users. At that stage, your only option is horizontal scaling, which requires rewiring your architecture. The real win here is recognizing when vertical scaling fails. If database CPU consistently hits 70% or response times exceed 500ms, it’s time to move beyond single-server thinking. But don’t rush it. Many companies waste weeks upgrading tiers when a simple Redis cache would solve the problem.

The 10,000-User Cliff: Why Your Load Balancer Isn’t Enough

At 10,000 users, vertical scaling stops working. Now you need to scale out, not up. This is where horizontal scaling kicks in, spreading traffic across multiple app instances behind a load balancer. Azure’s Application Gateway or Front Door handles this beautifully, but the real magic is in what you don’t do.

Many teams panic at this stage and start extracting microservices. Don’t. Instead, focus on statelessness and caching. Azure Cache for Redis ↗ can cut database load by 60%, but only if you cache the right data. For example, caching reference data like product catalogs or user profiles reduces repetitive database queries. The key is avoiding cache invalidation nightmares: set expiration times or use data change notifications to keep caches fresh.

Lyft reduced overages by 37% using telemetry-informed throttling, not by adding servers, but by intelligently managing resource use. Their approach? Monitor query patterns and throttle expensive requests before they crash the system. This is the boring truth: scaling to 10,000 users is about disciplined caching and smart monitoring, not architectural gymnastics.

Beyond 100k: The Real Scaling Game Starts

Half a million users is where most applications die. At this scale, you’re not just adding servers, you’re re-architecting for resilience. Multi-region deployments and database sharding become mandatory. But the real game-changer is decoupling. Instead of synchronous API calls between services, use Azure Service Bus ↗ for asynchronous messaging.

When a user uploads a photo, don’t process it inline. Drop a message onto a queue and let background workers handle it. This keeps your front-end snappy during traffic spikes. Service Bus queues ensure reliable delivery, even when parts of your system fail. QueueConcepts ↗ aren’t just technical, they’re survival tools.

Segment, a leading analytics platform, reduced major outages by 45% by carefully extracting microservices only after identifying clear bottlenecks. They started with user management, then billing, never for the sake of “modularity.” The lesson? Extract services to solve specific problems, not because they’re trendy. A modular monolith stays simpler, cheaper, and more reliable until you have concrete pain points.

The 1 Million User Gauntlet: Data Consistency and Multi-Region Chaos

Reaching 1 million users means global scale, and with it, the CAP theorem becomes your new reality. You can’t have perfect consistency, availability, and partition tolerance simultaneously. Azure gives you tools to choose your poison. Cosmos DB ↗ supports multi-region writes with tunable consistency, ideal for read-heavy workloads where eventual consistency is acceptable. But if you need strong consistency for transactions, you’ll route all writes to a single region and use geo-replication for reads.

Multi-region deployments with Azure Front Door ↗ route users to the nearest location, cutting latency by hundreds of milliseconds. Still, data replication introduces lag. A European user writing to a US database will experience delays, often 500ms+. That’s why many teams shard data by region: US users’ data lives in the US, EU users’ in Europe. This avoids cross-region writes entirely but adds complexity when regions fail.

Sharding relational databases is messy. You’ll need to implement a shard map or hashing function to route queries correctly. For example, user IDs ending in 0-3 go to Shard 0, 4-7 to Shard 1, simple, but brittle if not automated. Alternatively, offload specific data to NoSQL stores like Cosmos DB for high-scale needs. A hybrid approach, SQL for core transactions, NoSQL for user profiles, often works best.

Observability: The Unsung Hero of Scaling

At 1 million users, your biggest risk isn’t technical debt, it’s blind spots. When every second of downtime costs thousands, you need real-time visibility. Azure Application Insights ↗ is non-negotiable. It tracks request rates, error rates, and latency across all services. Set alerts for P99 latency > 500ms or error rates > 1%, and automate responses. For example, if a microservice fails, trigger a failover to a backup instance.

Cost optimization is equally critical. Azure’s Advisor ↗ scans your resources daily, flagging idle VMs or over-provisioned databases. One client reduced their monthly bill by $23,000 by shifting to reserved instances and auto-scaling policies. The real win? Not just saving money, but knowing exactly where your resources are being wasted.

Scaling is a Marathon, Not a Sprint

Scaling to 10 million users isn’t about one grand architecture, it’s a series of small, intentional steps. Start simple. Monitor everything. Scale only when metrics prove you must. The most successful systems, like Instagram, which handled 30 million users with 13 engineers, rely on boring fundamentals: stateless services, disciplined caching, and observability.

Premature microservices add complexity without solving problems. Vertical scaling buys time but isn’t sustainable. And global scale demands trade-offs, consistency over availability, or vice versa. The real secret? Resist the urge to over-engineer. Let your actual pain points dictate your architecture. As one engineer put it: “Keep it boring: stateless services, cache-first, and queues for backpressure.”

That’s how you build for millions on Azure.