Sidecar Patterns: The Silent Performance Tax in Distributed Systems

How the sidecar pattern, often hailed as a microservice best practice, can silently drain system performance , and when it's truly worth the trade-offs.

The sidecar pattern is often presented as a universal solution for microservices. Dev teams hear “decouple concerns”, “separate responsibilities”, and “simplify your code” , and rush to implement it without question. But behind the clean architecture diagrams and glowing case studies, there’s a dirty little secret: sidecars aren’t free. They chew through CPU, add latency, and create hidden failure points that can cripple performance-critical systems. Let’s cut through the hype and talk about the real costs.

What Exactly Is a Sidecar Pattern? (And Why People Love It)

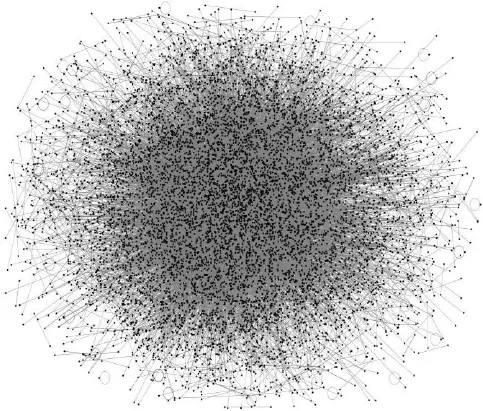

At its core, a sidecar is a secondary container or process that runs alongside your main application in the same pod or host. It handles cross-cutting concerns like logging, security, or traffic management , freeing the primary app to focus on business logic. Think of it like a motorcycle sidecar: the main vehicle handles driving, while the sidecar carries the map, radio, or extra baggage.

This pattern gained traction because it solves real problems. Want to add TLS termination to a legacy app without rewriting it? A sidecar like Envoy can handle it. Need to pull secrets from HashiCorp Vault? Vault Agent sits alongside your app, injecting credentials securely. Logging? Fluentd or Filebeat runs as a sidecar to ship logs to central systems. These are legitimate use cases , and that’s why teams adopt them without scrutiny.

But here’s the thing: every sidecar adds overhead. And when you multiply that across hundreds of microservices, the costs become non-trivial.

When Sidecars Shine (And When They’re Just Noise)

Let’s be clear: sidecars aren’t evil. They’re useful tools in specific scenarios. Here’s where they actually make sense:

- Legacy app modernization: Adding security or observability to monolithic codebases without touching the original code.

- Third-party service integration: When using tools like Vault, you need a sidecar to manage secrets securely.

- Regulated environments: In industries like finance or healthcare, offloading security logic to a hardened sidecar simplifies compliance audits.

But here’s where things go sideways:

“We added Envoy for retries and TLS termination, but under peak load, our latency increased by 12% across all services. We didn’t realize the proxy was adding that overhead until we started hitting SLA breaches.”

This isn’t hypothetical. Teams running high-throughput systems (like real-time trading platforms or gaming backends) often discover too late that sidecars are throttling their performance. Every hop through a sidecar adds milliseconds , and in systems where 1ms matters, that’s catastrophic.

The Hidden Costs Nobody Talks About

The System Design Newsletter breaks it down cleanly: sidecars introduce four critical trade-offs that teams overlook:

- Resource overhead: Each sidecar consumes CPU and memory independently. In constrained environments (like edge deployments or serverless), this can force you to over-provision nodes.

- Latency inflation: Even efficient proxies like Envoy add 1, 5ms per request. Multiply that by 10 service calls in a workflow? You’ve just added 50ms of “free” overhead.

- Failure surface area: A single sidecar failure can make your entire app appear broken , even if the main process is healthy. Your logs might say “200 OK”, but the sidecar’s reconnection logic failed silently.

- Operational complexity: Now you’re managing two processes per pod instead of one. Scaling, debugging, and updating become exponentially harder.

One engineering lead at a Fortune 500 company told me: “We had 15 microservices with Envoy sidecars. When we removed them for a critical path, our P99 latency dropped by 30% with no code changes. Turns out, we didn’t actually need the proxy for most requests.”

When to Avoid Sidecars (And What to Do Instead)

If your system is latency-sensitive, resource-constrained, or has simple needs, skip the sidecar. Here’s when to reach for alternatives:

- For logging: Use a lightweight client library inside your app, or ship logs directly to your observability pipeline. Fluentd as a sidecar is overkill for simple JSON logging.

- For security: If your team has security expertise, build TLS termination or auth directly into your app. Libraries like OpenSSL are mature and efficient.

- For retries/circuit breakers: Implement them in your service client (e.g., using Resilience4j or Polly). You’ll get better control and lower overhead than a sidecar.

- For service discovery: In Kubernetes, rely on built-in DNS-based discovery instead of adding a sidecar mesh layer.

As one engineer put it: “We removed all sidecars for internal service-to-service calls. The cost savings were massive , and the complexity of debugging ‘why is my sidecar failing?’ disappeared overnight.”

The Reality Check

The sidecar pattern isn’t inherently bad. It’s a tool , and like any tool, it’s only valuable when used appropriately. Treating it as a universal best practice is like using a sledgehammer for a thumbtack: you’ll get the job done, but you’ll wreck the wall in the process.

Before adding a sidecar, ask yourself:

- Is this concern truly cross-cutting (i.e., can’t be handled by the app itself)?

- Do the benefits outweigh the performance and operational costs?

- Would a simpler solution (a library, a built-in feature, or even just better code) work better?

The most elegant distributed systems don’t rely on sidecars for everything. They use them strategically , and avoid them where possible. Because in the end, the best architecture isn’t about following patterns. It’s about solving problems without creating new ones.