Your Million-User Dream is a Database Nightmare in Waiting

Why scaling from zero to millions breaks most systems and how to design for the inevitable collapse.

Every startup founder dreams of hockey-stick growth. Every engineer quietly dreads it. That moment when your scrappy little app, cobbled together over weekends, suddenly gets a mention on a popular forum and your traffic graph goes vertical. It’s the dream, right? Wrong. It’s the beginning of a nightmare that starts and ends with your database.

The core problem isn’t your code, your framework, or your slick UI. It’s the fundamental assumption that the system you build for a hundred users will somehow gracefully handle a million. It won’t. This disconnect between ambition and architectural reality is where good startups go to die, often before they even realize what hit them.

The “Just Make It Work” Trap

It starts innocently. You’re a small team. You need to ship an MVP. The guiding principle is “just make it work.” You spin up a single server instance, install PostgreSQL or MongoDB, and your entire application, users, posts, likes, comments, settings, lives in one monolithic database. It’s simple, it’s fast to develop, and for your first thousand users, it’s perfectly fine.

This is precisely the trap. The ease of the initial setup masks the technical debt you’re accumulating. You’re not building a system, you’re building a time bomb. The prevailing sentiment on developer forums is that being assigned to design a system for “millions of users” with zero experience is a massive red flag, a recipe for disaster born from management’s ignorance of the complexity involved. They want a sales pitch, not a system, but the technical reality is unforgiving.

The First Fracture: When Read Becomes the New Write

As your user base grows, you’ll notice the first signs of trouble. The database isn’t just storing data, it’s being relentlessly queried. Every page load, every API call, every background job hits the same database. The read-to-write ratio, once manageable, has skyrocketed. Your application is now read-heavy, and your single database is choking on the demand.

This is where caching enters the conversation. It’s no longer a “nice-to-have” optimization, it’s a survival mechanism. You’ll throw a Redis ↗ or Memcached layer in front of your database to temporarily store frequently accessed data. User profiles, popular posts, configuration settings, anything that doesn’t change in real-time gets cached.

But caching is a double-edged sword. It introduces complexity. Now you have to worry about cache invalidation. When a user updates their profile, how do you ensure the old version is purged from the cache? This is the infamous computer science problem, and there’s no perfect solution. As Phil Karlton quipped, “There are only two hard things in Computer Science: cache invalidation and naming things.” Your simple system now has a stateful, volatile component you must manage.

The Horizontal Wall: Why Bigger Servers Aren’t the Answer

Your cached system buys you time, but growth continues. The next logical step, and the most common mistake, is vertical scaling. You throw more RAM, a faster CPU, and bigger SSDs at your database server. It works, for a while. But vertical scaling is a ladder with a short top rung. Hardware has physical and financial limits. You can’t keep buying bigger machines forever.

The real solution is horizontal scaling, or “scaling out.” Instead of one beefy server, you use multiple, less powerful servers. This immediately introduces a host of new problems:

- How do you distribute incoming traffic? You need a load balancer ↗ to act as a traffic cop.

- How do your stateless application servers share session data? Your user’s login session on Server A needs to be recognized by Server B.

- And most importantly, how does your database scale horizontally?

Sharding: The Only Way Out, and the Road to Hell

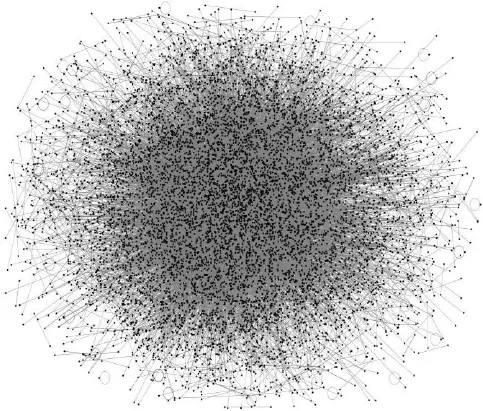

This is the moment of truth. You can’t scale a single, monolithic database horizontally. You have to break it apart. This process is called sharding ↗. You take your massive database and split it into smaller, independent pieces, or “shards”, each living on its own server. On the surface, it sounds simple. In practice, it’s one of the most challenging architectural maneuvers you can perform.

AWS defines database sharding as “the process of storing a large database across multiple machines… splitting data into smaller chunks, called shards, and storing them across several database servers.” This “shared-nothing architecture” means each shard operates independently, which is great for parallel processing and fault tolerance. If one shard’s server fails, the others can continue operating.

But how do you decide how to split the data? This is where you earn your salary as a systems architect. The choice of a “shard key” is critical and will haunt you for years. There are several methods, each with brutal trade-offs:

- Range-Based Sharding: Splitting data by value ranges (e.g., users A-F on one shard, G-L on another). It’s simple to implement but almost guaranteed to create “hotspots.” Your shard for users with last names starting with ‘S’ will be overloaded while the ‘Q’ shard sits idle.

- Hashed Sharding: Running a value through a hash function to determine its shard. This distributes data evenly but makes querying by range nearly impossible. Want to find all users who signed up last month? Good luck. You’ll have to query every single shard and aggregate the results, a slow and expensive operation.

- Directory or Geo-Sharding: Using a lookup table or geographic location to map data to a shard. This can be flexible and improve latency for geo-specific apps, but it adds another layer of infrastructure to manage and can still lead to uneven distribution.

Choosing the wrong shard key is a fatal error. It can cripple performance, make simple queries impossible, and lead to a painful re-sharding process down the line, which is the open-heart surgery of the database world.

Beyond the Database: The System-Wide Ripples

Sharding your database is the centerpiece of scaling, but the ripples spread everywhere. Your application logic must now be “shard-aware.” To fetch a user’s data, your app first calculates which shard that user lives on before it can even run the query.

This complexity forces you to rethink your entire stack:

- Data Consistency: How do you maintain consistency across shards? The CAP theorem becomes your daily reality. You’ll likely have to embrace “eventual consistency”, where different parts of your system might have slightly different views of the data for a short period.

- Cross-Shard Joins: The simple SQL

JOINyou used to love is now your enemy. Joining data that lives on two different physical shards is a nightmare. You either have to denormalize your data (store copies of data in multiple places) or fetch the data from both shards and join it in your application layer, both of which have significant downsides. - Operational Overhead: You’ve gone from managing one database to managing dozens. Backups, monitoring, upgrades, and security are now exponentially more complex.

The Human Factor: You Need an Architect

This is why startups eventually need a dedicated Systems Architect ↗. This isn’t a role you can just add to a senior developer’s plate. It requires a specific blend of deep technical knowledge, experience with failure, and the ability to think in terms of trade-offs, not just features. An architect’s job is to see this cliff coming from a mile away and design a system that can evolve gracefully, not one that needs to be rebuilt at 50,000 feet.

The role involves analyzing stakeholder requirements, choosing the right database technology for the job (SQL vs. NoSQL is a critical early decision), and designing a “North Star” architecture that can handle the transition from a single server to a fully distributed, sharded system. They balance technical requirements with business needs, defining the system vision and translating it into reality before the first line of code is even written.

So, what’s the takeaway? Don’t wait for the crisis. Design for scale from day one, even if you don’t implement it all immediately. This means understanding your data access patterns, choosing a shard key before you need to shard, and building your application with the assumption that your database will one day be distributed.

The journey from zero to millions of users is less about a single revolutionary architecture and more about a series of deliberate, planned evolutions. Your million-user dream doesn’t have to be a database nightmare. It just needs a blueprint that acknowledges the inevitable collision between simple beginnings and massive scale. Start with the end in mind, or you’ll end up as another cautionary tale on a developer forum.