The productivity gains promised by AI coding assistants come with a hidden tax, one that’s quietly accumulating in codebases worldwide. While developers celebrate generating thousands of lines of code in minutes, engineering leaders are noticing a subtle but dangerous erosion: architectural consistency is weakening, technical debt is mounting, and “almost correct” implementations are slipping through code reviews.

The Productivity Paradox: Faster Code Generation, Slower Delivery

The irony is stark: AI is dramatically accelerating code generation while simultaneously slowing down overall project delivery. A recent METR study found that AI coding assistants actually decreased experienced developers’ productivity by 19%. Developers estimated AI would reduce completion time by 20%, but the reality was the opposite, AI tooling slowed them down.

This isn’t just about individual productivity. The problem manifests in what InfoWorld calls the “productivity paradox”, speed gains in code generation expose bottlenecks in code review, integration, and testing. It’s like speeding up one machine on an assembly line while leaving the others untouched: you don’t get a faster factory, you get a massive pile-up.

The Three Symptoms of AI-Induced Code Rot

1. “Almost Correct” Code That Passes Tests But Fails in Production

The most insidious problem isn’t code that fails spectacularly, it’s code that works just well enough to pass basic tests but contains subtle flaws that emerge only in production. Developers report seeing more implementations that appear functional on the surface but contain logical gaps or edge-case failures.

As one engineering manager noted, “The codebase has less consistent architecture, and we’re seeing more copy-pasted boilerplate that should be refactored.” This creates what some are calling “AI debt”, the accumulating costs of hasty AI implementations that require future rework.

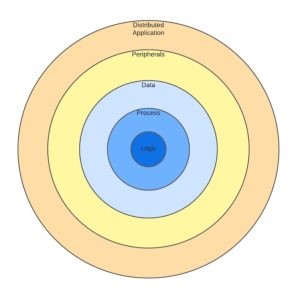

2. Architectural Incoherence at Scale

LLMs excel at generating code for isolated problems but struggle with maintaining cohesive architecture across an entire codebase. The result is what developers describe as “less consistent architecture”, different patterns, inconsistent abstractions, and architectural drift that makes the system harder to reason about over time.

This architectural fragmentation has a compounding effect: as the codebase becomes less organized, it also becomes harder for AI tools to work effectively within it. The very tools meant to accelerate development become less effective as the system they’re working on becomes more chaotic.

3. The Review Bottleneck and Cognitive Overload

AI is generating more code, faster, but human review capacity hasn’t scaled accordingly. Pull requests are becoming “super-sized”, AI can generate massive changes in a single prompt that are incredibly difficult for human reviewers to comprehend fully.

As one developer observed, “The problem here is that a lot of the design work is moved from the developer to the reviewer.” This creates a situation where reviewers are overwhelmed, leading to either rushed, superficial reviews or lengthy review cycles that block developers.

The Three Emerging Developer Workflows

The challenge is compounded by the fact that teams aren’t homogeneous in their AI adoption. Three distinct developer experience workflows are emerging:

- Legacy DevX (80% human, 20% AI): Experienced developers who view software as a craft, using AI primarily for search replacement or minor boilerplate tasks

- Augmented DevX (50% human, 50% AI): Modern power users who partner with AI for isolated tasks and troubleshooting

- Autonomous DevX (20% human, 80% AI): Prompt engineers who offload most code generation to AI agents

Each workflow requires different support, tooling, and review processes. A one-size-fits-all approach to code quality standards is doomed to fail when your team spans these different working models.

The “AI Debt” Crisis Is Already Here

The concept of “AI debt” is gaining traction as organizations recognize the long-term costs of rapid AI adoption. According to Asana’s State of AI at Work report, 79% of companies globally expect to incur AI debt from poorly implemented autonomous tools.

This debt manifests as security risks, poor data quality, low-impact AI agents that waste human time, and management skills gaps. As one expert noted, it could look like “a bunch of code created by AI that doesn’t work right or AI-generated content that nobody is using.”

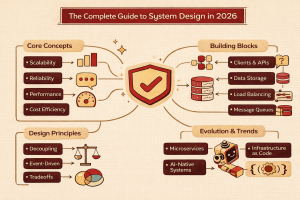

Fighting Back: Strategies for Maintaining Quality in the AI Era

Strengthen Code Review Processes

The first line of defense is reinforcing code review standards. Teams need clear definitions of what constitutes a “review-ready” PR and empowered reviewers who can push back on changes that are too large or lack context.

Many teams are finding success with:

– Enforcing bite-size updates (operating on 1-2 files at a time)

– Rejecting changes that require extensive incremental fixes

– Moving back to feature branches from trunk-based development when quality slips

Automate Responsibility

While automation is essential, it needs human oversight. Static analysis tools, linters, and automated testing should assist, not replace, human judgment. The key is integrating these tools early in the development process, catching issues when they’re cheapest to fix.

As Future Processing notes, “Preventing technical debt requires a careful balance between innovation speed and sustainable system design.” This means investing in modular architectures, well-governed data pipelines, and continuous monitoring.

Establish Common Rules and Context

Providing AI tools with organizational context, approved libraries, internal utility functions, API specifications, can dramatically improve output quality. Teams that share effective prompts and establish unified rules for AI tool usage see more consistent results.

Shift from Speed Metrics to Quality Metrics

Leadership must communicate that raw coding speed is a vanity metric. The real goal is sustainable, high-quality throughput. This requires aligning expectations around what constitutes valuable output and rewarding maintainability over velocity.

The Path Forward: Quality as a Competitive Advantage

The companies that will thrive in the AI era aren’t necessarily those that generate code fastest, but those that maintain quality while leveraging AI’s capabilities. This requires:

- Acknowledging that AI-generated code needs more review, not less

- Investing in architectural consistency as a first-class concern

- Building cross-functional teams that include AI specialists alongside traditional engineering roles

- Treating AI debt management as an ongoing operational cost

The quiet erosion of code quality isn’t inevitable, it’s a choice. Organizations that prioritize sustainable practices alongside AI adoption will find themselves with maintainable systems and actual productivity gains, not just faster code generation with diminishing returns.

The revolution in software development isn’t about writing code faster, it’s about building systems that can evolve sustainably. And that requires human oversight, architectural discipline, and a recognition that AI tools are assistants, not replacements, for skilled engineering judgment.