Database Design: Integrity Clashes With Performance

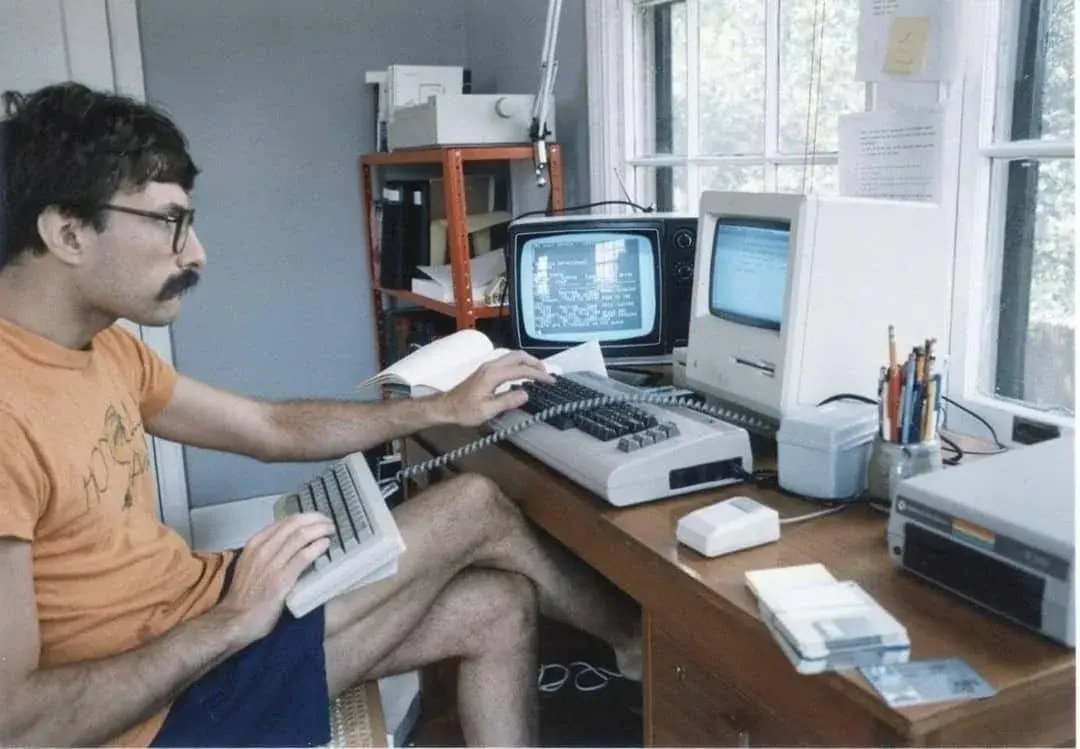

The eternal database battle, seen through a librarian's weary eyes, how to choose between clean data and fast queries

Every library system designer eventually faces the same philosophical dilemma: do you organize your books perfectly, or do you make them easy to find? This same tension plays out daily in database design, the endless tug-of-war between normalization and denormalization. It’s not just academic theory, it’s the difference between a system that hums with efficiency and one that groans under user complaints.

The Library’s Data Nightmare: A Normalization Saga

Picture our library project: 20,000 books, patrons borrowing and reserving titles, staff managing operations, and fines accruing for overdue returns. The database designer’s instinct screams “normalize!”, separate everything into pristine, focused tables.

But then reality hits. A simple query to find “all books by Stephen King” suddenly requires joining through books, authors, book_authors, publishers, and who knows what else. The developer’s coffee gets cold waiting for the result set.

This specific problem surfaced recently when a developer seeking advice on data engineering forums asked about partitioning addresses across their library system. Should publisher and user both have city, street, and state fields, or should they create a separate address table?

The consensus from experienced engineers was clear: start normalized. As one contributor noted, for Online Transaction Processing (OLTP) systems like library operations, normalized design minimizes update anomalies ↗ and ensures data consistency, critical when tracking who has which book checked out.

The Wisdom of “Normalize Until It Hurts”

There’s an old engineer’s maxim that circulated in the discussion: “Normalize until it hurts, then denormalize until it works.” This perfectly captures the iterative nature of database design.

The hurt typically manifests in three specific ways:

Join Fever: Reports requiring patron history, book availability, and overdue status become multi-table nightmares. Each additional join adds computational overhead that scales exponentially with data volume.

Query Complexity: Even simple questions like “Which books are most popular this month?” require navigating relationships between patrons, loans, reservations, and inventory.

Performance Walls: As the system scales beyond initial prototypes, query performance degrades to the point where users start complaining about sluggish responses.

When Denormalization Becomes Your Best Friend

The turning point often comes when someone requests an analytics dashboard. Suddenly, your beautifully normalized schema becomes a performance liability. Library directors want to know book circulation rates, patron engagement metrics, and revenue from fines, all in real-time.

This is where the modern data engineering wisdom kicks in: build separate reporting tables. As multiple engineers pointed out, you often end up with both normalized and denormalized schemas coexisting. The application database uses a normalized model for efficient CRUD operations, your so-called “bronze layer”, while you create star schema models in your “silver layer” for efficient reporting and analytics.

The trade-offs become starkly clear ↗: denormalization boosts read performance by reducing joins and simplifying queries, but at the cost of increased storage and potential data inconsistencies.

The Address Conundrum: A Microcosm of the Trade-off

Let’s return to that address question that sparked the original dilemma. In a perfectly normalized world, you’d have an addresses table with address_id, street, city, state, and zip_code, then reference it from both publishers and users.

But consider the practical implications:

- Normalized Approach: Any address change updates one record, ensuring consistency across the system

- Denormalized Approach: Faster queries since you avoid the join, but risk inconsistencies if addresses change

For a library system where publisher addresses rarely change but user addresses might, the hybrid approach starts looking attractive: normalize publisher addresses, but denormalize user addresses if performance demands it.

OLTP vs OLAP: The Great Database Divide

The fundamental insight that emerged from the discussion centers on purpose. Transactional systems (OLTP) thrive on normalization, they’re optimized for writes, consistency, and avoiding data anomalies. Analytical systems (OLAP) prefer denormalization, they’re optimized for reads, aggregation, and query speed.

This distinction explains why modern data architectures often employ both approaches. Your library’s core operating system, handling checkouts, returns, and reservations, should be normalized. But your reporting system tracking book popularity trends should be denormalized for performance.

The traditional ETL (Extract, Transform, Load) pattern reinforces this separation. Data engineers follow normalization for scalable writes by developers, then denormalization for scalable reads by data analysts.

The Modern Twist: ELT and Dynamic Schemas

Today’s data landscape introduces another wrinkle: the rise of ELT over ETL. Instead of transforming data before loading, modern systems load raw data first, then transform it. This approach recognizes that storage is cheap, while processing time is expensive.

In our library context, this means you might maintain your normalized transactional database while simultaneously streaming data to a data warehouse where it’s transformed into denormalized reporting tables. The best design, as one contributor wisely noted, serves your current consumers well without compromising future flexibility.

Practical Implementation: Striking the Balance

So how do you navigate these trade-offs in practice? Here’s a battle-tested approach:

Start Normalized: Design your transactional system following 3rd Normal Form (3NF) principles. This prevents data anomalies and ensures integrity during operations.

Monitor Performance: As your system grows, identify the pain points. Which queries are slow? Which reports take forever to generate?

Selective Denormalization: Create denormalized views or tables specifically for problematic queries. Don’t denormalize everything, just what hurts.

Establish Refresh Patterns: Decide whether your denormalized structures refresh in real-time (via triggers), near-real-time (via change data capture), or batch (nightly ETL).

Document the Trade-offs: Make deliberate choices about which inconsistencies you’re willing to tolerate for performance gains.

The Library System’s Optimal Architecture

For our 20,000-book library, the optimal architecture might look like this:

- Transactional Database: Fully normalized, handling checkouts, returns, reservations

- Operational Reports: Materialized views or indexed views providing quick access to common operational data

- Analytical Warehouse: Denormalized star schema optimized for trend analysis and business intelligence

This hybrid approach acknowledges that different parts of your system serve different masters. The checkout desk needs immediate, consistent data. The library director needs historical trends. Trying to make one schema serve both perfectly is a recipe for compromise.

Both Approaches Are “Right”

The most insightful takeaway from these discussions isn’t about choosing one approach over the other, it’s about recognizing that most successful systems use both. Normalization ensures your data remains trustworthy, denormalization ensures your users remain happy.

The wisdom lies in knowing when to apply each principle. Your transactional systems demand normalization’s rigor. Your analytical systems crave denormalization’s speed. Trying to force one approach across all use cases is like trying to use a library’s card catalog system for data analytics, it might work, but nobody will enjoy the experience.

Ultimately, database design isn’t about finding one perfect answer. It’s about making thoughtful trade-offs between competing priorities, and being willing to adjust as your system evolves and your users’ needs change. The library that started with 20,000 books might one day serve millions of digital patrons, and its database architecture had better be ready to grow with them.