Coinbase's Engineer Firings Over AI Tool Resistance Reveal Architectural Risks

Analyzing the consequences of mandatory AI adoption in software engineering teams and its impact on technical quality and culture

Coinbase’s decision to terminate engineers who refused to use AI tools ↗ signals a shift in how organizations measure engineering value. When performance evaluations prioritize “AI usage effectiveness” over architectural quality, the long-term health of software systems becomes compromised.

Mandatory AI Integration Challenges

The Coinbase case highlights a trend in tech leadership where AI tool adoption is enforced as a productivity metric rather than an optional enhancement. Internal reports from engineering teams show requirements like “all PRs must originate from AI tools” and performance reviews tied to AI usage metrics.

This approach misaligns with how AI tools function in development. The 2025 Stack Overflow Developer Survey of 49,000 developers found 84% use AI tools, but favorable perceptions dropped from 70% in 2023 to 60% in 2025. The primary complaint: AI-generated code that requires significant debugging, with 66% of developers citing this as their largest time sink.

Architectural Quality Implications

Forced AI adoption creates a productivity paradox. The 2025 DORA/Faros “AI Productivity Paradox” study of 10,000 developers across 1,255 teams found AI-enabled teams completed 21% more tasks and merged 98% more pull requests, but review times increased by 91%.

This affects system quality: AI adoption correlated with 9% more bugs per developer and 154% larger average PR sizes. Larger PRs introduce review complexity and reduce architectural oversight. One developer in the Stack Overflow survey noted, “AI solutions that are almost correct but incomplete require more time to fix than they save.”

Experience-Based Productivity Gaps

A METR randomized trial revealed senior developers using AI tools on complex projects took 19% longer to complete tasks compared to working without AI. These developers expected a 24% time reduction but actually experienced a 20% perceived improvement, highlighting a reality gap between expectations and outcomes.

This creates skewed incentives where junior developers appear more productive while senior engineers face penalties for maintaining code quality. The time spent verifying AI outputs negates potential gains, particularly in complex systems requiring deep architectural understanding.

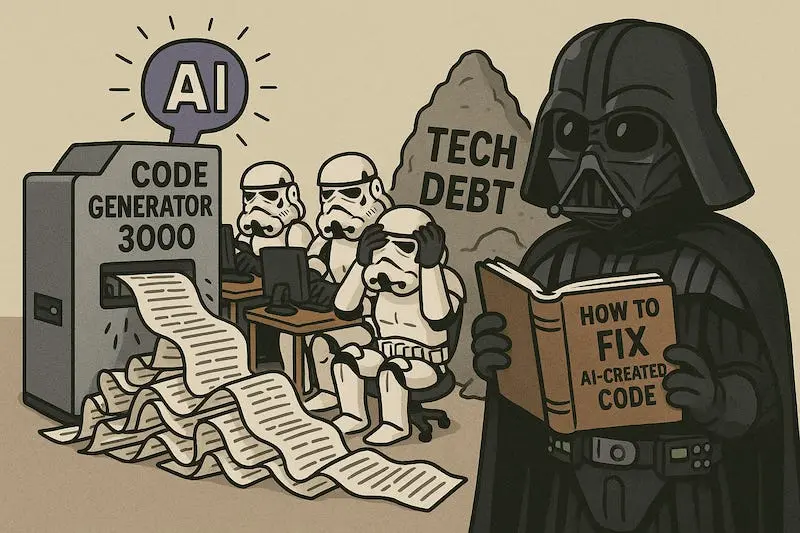

Technical Debt and Toolchain Risks

GitLab 18.3’s AI agent integration ↗ and Azure’s Agent Factory ↗ demonstrate expanding AI tooling capabilities. However, this expansion introduces technical risks:

- Architecture drift: AI lacks awareness of system constraints and patterns

- Knowledge fragmentation: Developers understand less of the code they maintain

- Debugging challenges: AI-generated code requires pattern analysis beyond original author knowledge

David Cramer from Sentry observed in a two-month agent experiment: “AI agents can’t replace hands-on development. They don’t substitute for engineering judgment in complex systems.”

Leadership and Cultural Misalignment

The New York Times reports 77% of CEOs view AI as transformative ↗, but less than half believe their technology leaders can navigate current digital challenges. This disconnect manifests in initiatives like mandatory AI training for executives while enforcing AI tool usage in engineering teams.

One engineering team member described the situation: “Our PR process now requires AI-generated code proposals. Performance reviews focus on AI usage efficiency rather than code quality.”

Engineering Judgment Tradeoffs

The core risk of mandatory AI adoption is the erosion of engineering judgment. Teams that treat AI as a strategic initiative with workflow design, governance, and infrastructure planning see better outcomes than those using AI as a productivity hack.

The Faros research identified five critical enablers for successful AI integration: workflow design, governance, infrastructure, training, and cross-functional alignment. Without these foundations, AI adoption resembles giving construction workers power tools without structural engineering training.

The data shows AI can improve productivity by 20-30% in controlled environments. The critical question remains whether organizations will prioritize long-term architectural integrity over short-term velocity gains. Coinbase’s approach indicates some leaders have already chosen speed over quality, with consequences for engineering culture and system reliability.