Alibaba’s Qwen 3 Max just dropped with over one trillion parameters and benchmark numbers that should make OpenAI nervous. But the developer community’s excitement is tempered by a familiar pattern: another “open” model that’s anything but.

The Benchmark Warrior That Outperforms Everyone

Qwen 3 Max isn’t just another incremental update. According to OpenRouter’s performance data, the model achieves staggering results: 85.2% on SuperGLUE, 80.6% on AIME25 math benchmarks, and 57.6% on LiveCodeBench v6. These numbers don’t just beat previous Qwen models, they surpass Claude Opus 4 and DeepSeek-V3.1 across multiple categories.

The technical specs read like a fantasy wishlist: 256,000 token context window, support for over 100 languages, and optimizations for retrieval-augmented generation that make previous models look primitive. Early testers report the model completes complex code refactoring tasks that stumped earlier versions, with one developer noting it “gave the best results so far” for Java applet to modern web application conversion.

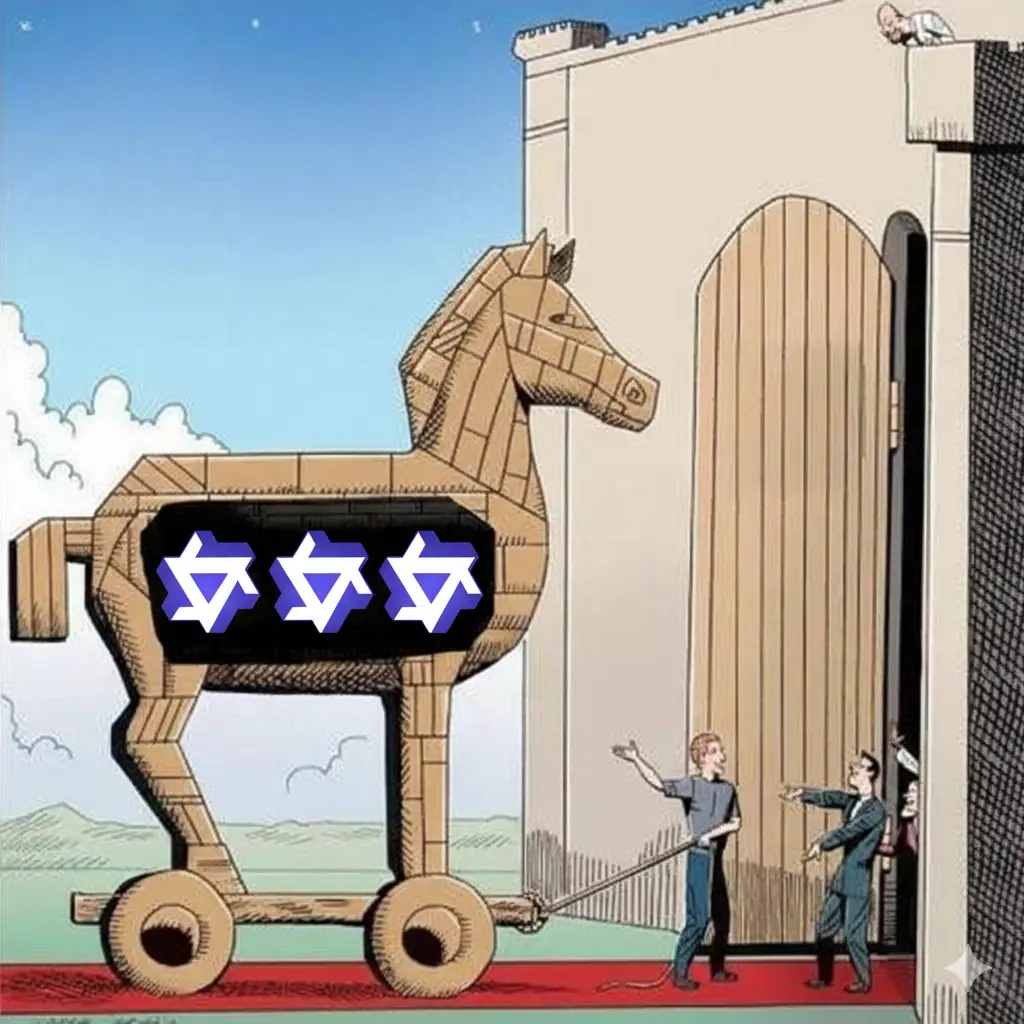

The Open-Source Charade

Here’s where the celebration stops. Despite Alibaba’s history of releasing open-source models, Qwen 3 Max follows the same pattern as its predecessor: closed-source with API access only. The community reaction on Reddit says it all: “They never open sourced their max versions. Their open source models are essentially advertising and probably some distils of max models.”

This isn’t just disappointing, it’s strategically brilliant. Alibaba gets the developer goodwill from their open-source releases while keeping the crown jewels under lock and key. The Qwen3-Max-Preview is available through Alibaba’s API at $1.20 per million input tokens, positioning it as a cost-effective alternative to Claude and GPT-4. But you can’t run it on your own hardware, fine-tune it for specific tasks, or examine how it achieves those impressive benchmark results.

The Developer Ecosystem’s Calculated Bet

Alibaba’s playbook is becoming clear: release capable but not cutting-edge models as open-source to build developer momentum, then monetize the real capability through cloud APIs. It’s working, the Qwen model repository now hosts over 130,000 derivative models on platforms like Hugging Face and ModelScope.

The company’s $53 billion investment in cloud and AI infrastructure ensures that even if developers build on open-source Qwen models, they’ll likely end up on Alibaba Cloud for deployment. It’s a classic embrace-extend-extinguish strategy dressed in open-source clothing. As one analysis noted, this creates a “virtuous cycle: open-source adoption → ecosystem growth → cloud revenue → R&D reinvestment.”

The Benchmark Question Everyone’s Avoiding

While the benchmark numbers look impressive, seasoned developers are asking harder questions. How much of this performance comes from careful benchmark optimization rather than general capability? The model’s strong showing against Claude Opus 4 doesn’t match many developers’ practical experience with these models.

There’s also the uncomfortable truth that these benchmarks primarily compare non-reasoning models. When you look at reasoning-enabled models like GPT-5 with thinking mode (94.6% on AIME25) or Gemini 2.5 Pro (69% on coding benchmarks), the picture changes dramatically. Qwen 3 Max might be winning the sprint while everyone else is training for the marathon.

The Open-Source Community’s Dilemma

Developers face a familiar choice: embrace the convenience and performance of closed APIs while sacrificing control and transparency, or stick with truly open models that might not match the benchmark numbers. For many enterprise applications, the choice is easy, just pay the API bill.

But for researchers, startups building proprietary technology, or anyone concerned about vendor lock-in, the decision is more complicated. The promise of open-source AI feels increasingly like bait, get developers hooked on capable but limited models, then upsell them to the real capability through expensive APIs.

Alibaba’s trillion-parameter marvel demonstrates how far Chinese AI companies have come technically. Their strategic approach to open-source shows they’ve mastered the business side too. The developers left wondering when, or if, they’ll ever get access to truly open models capable of competing with the best closed offerings might be the real benchmark that matters.