Event-Driven Architecture: The Distributed Disaster Waiting to Happen

Why your 'loosely coupled' event system is probably a tightly coupled mess in disguise

Your event-driven architecture isn’t elegant, it’s a distributed big ball of mud with better marketing. After a decade of watching companies turn “loosely coupled” systems into tightly coupled nightmares, the patterns are depressingly predictable. The promise of infinite scalability and flexibility dies the moment you need to figure out why your order service is silently failing because someone changed a field in an event payload three services away.

The Three Horsemen of Event-Driven Apocalypse

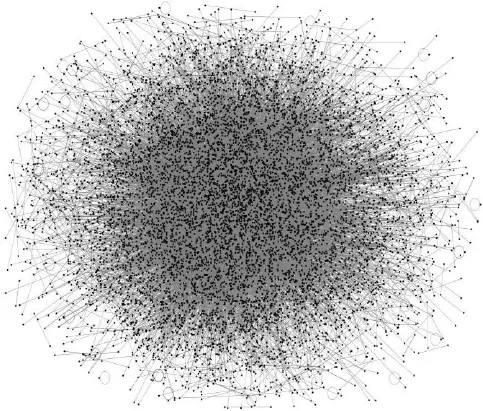

Problem 1: The Distributed Big Ball of Mud

The “big ball of mud” architectural pattern isn’t just for monoliths anymore, it’s been distributed. When Brian Foote and Joseph Yoder ↗ first described this anti-pattern, they probably didn’t imagine we’d scale it across cloud regions.

The symptoms are universal: unregulated growth where consumers multiply like rabbits, undefined structure because event boundaries were never properly modeled, and freely shared information that couples everything to everything else. Your architecturally pure microservices become a distributed monolith held together by string and hope.

The kicker? This happens because event-driven architecture has become too accessible. Cloud providers have removed the friction so completely that teams skip the hard thinking part. Why bother with domain modeling when you can just fire events into Pub/Sub and figure it out later? Spoiler alert: later never comes, it just becomes technical debt.

Problem 2: Event Design Anarchy

Here’s what separates APIs from events: we’ve collectively decided API design matters, but event design is apparently optional. While REST APIs get OpenAPI specs, versioning strategies, and review processes, events get… whatever the developer felt like throwing into a JSON payload that morning.

The result is architectural coupling through payload design. When your events expose internal database schemas (user_id, created_at, is_deleted_flag_v2), you’re not doing event-driven architecture, you’re doing database replication through events. Consumers start depending on implementation details because that’s all you’ve given them.

David Boyne’s observation ↗ hits hard: “It becomes harder and harder to manage events, and you end up in a complete mess.” The “free for all” approach to event design means your architecture’s contract is whatever the last developer to touch the producer decided to ship.

Problem 3: Discoverability Hell

“Producers don’t know about consumers” started as a design principle and became a religion. The result? Nobody knows anything about anything. Your events become a distributed system version of Schrödinger’s cat ↗, they exist in superposition, both being consumed and not being consumed, until someone needs to make a breaking change.

Without discoverability, you’re flying blind. Teams start asking the questions that should have answers: Who consumes this event? What version are they on? What happens if we change this field? The silence is deafening because your architecture has no memory, it just has a bunch of services firing events into the void and hoping for the best.

Back pressure from slow consumers becomes your new reality. When you can’t see your consumers, you can’t optimize for them either.

The Path Out of Architectural Chaos

The solutions aren’t revolutionary, they’re just ignored:

Stop implementing, start modeling. Use EventStorming ↗ or EventModeling ↗ to actually understand your domain before you start throwing events around. Your future self will thank you when you’re not trying to reverse-engineer business logic from a pile of Kafka topics.

Treat events like APIs. Adopt CloudEvents ↗ standards, version your events, and establish review processes. The “free for all” approach to event design is how you end up with payloads containing seventeen different timestamp formats and a prayer.

Embrace discoverability or embrace failure. Start with a basic README if you must, but aim for proper documentation with tools like AsyncAPI ↗ or EventCatalog ↗. Your architecture needs memory, not amnesia.

The Uncomfortable Truth

Event-driven architecture isn’t failing us, we’re failing it. We’ve taken a powerful architectural pattern and turned it into a distributed version of the same ball of mud we’ve always built, just with more network calls and better monitoring dashboards.

The companies succeeding with EDA aren’t the ones with the newest streaming platforms or the fanciest event meshes. They’re the ones who stopped treating events like throwaway messages and started treating them like the architectural contracts they actually are.

Your event-driven architecture doesn’t need another message broker or streaming platform. It needs you to stop, think, and actually design something for once. The “implementation first” mindset is how you get distributed chaos instead of distributed systems.

The question isn’t whether event-driven architecture works, it’s whether you’re willing to do the hard work to make it work. Most teams aren’t, which is why we’ll keep seeing the same three problems doom project after project. The tools are there, the patterns are documented, the lessons have been learned. The only thing missing is the discipline to apply them.

So go ahead, fire up that new Kafka cluster. Just don’t be surprised when you’re debugging a distributed big ball of mud instead of scaling infinitely into the cloud.