The Architect's Calculator: Debunking Multi-Cloud Mythology

Using Probability x Impact math to call out over-engineering excuses and justify architectural complexity

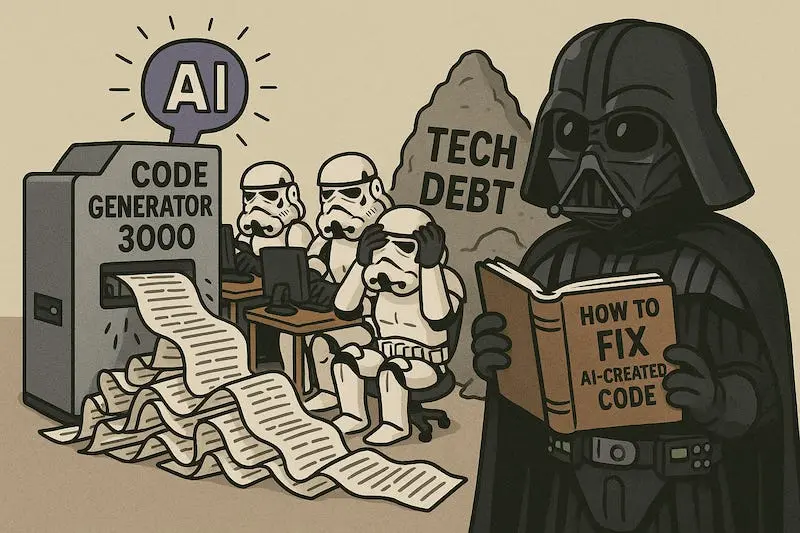

Every architecture meeting features the same pattern: Someone proposes an elegant, complex solution for a problem that exists mostly in PowerPoint slides. The “what if” scenarios sound convincing, until you apply basic math. The dirty secret of software architecture is that most complexity is justified by fear, not data.

The Probability x Impact Framework That Kills Bad Ideas

The core concept is brutally simple: Risk Value = Probability of Occurrence x Potential Impact. It’s basic risk management, but when applied to architectural decisions, it reveals how much complexity we build for statistically insignificant edge cases.

The framework assigns values from 0-100% to both probability and impact, then multiplies them to get a weighted risk score. This score can be compared against an organizational threshold (perhaps 75% or lower, depending on risk tolerance) to determine whether architectural investment is justified.

Take the classic multi-cloud debate:

- Probability: ~5% (major provider completely disappearing)

- Impact: ~90% (catastrophic if unprepared)

- Risk Value: ~4.5%

That 4.5% score screams “stop over-engineering.” Instead of immediately implementing multi-cloud complexity, you’re better off maintaining clean infrastructure-as-code and documented migration processes.

The Real Cost of Premature Complexity

The bias toward adding complexity has a name: subtraction neglect. Research from Leidy Klotz, highlighted in Practical Engineering Management ↗, shows that people systematically overlook subtractive solutions even when they’re more effective. We’ve been conditioned to demonstrate value through addition, new features, services, processes, layers of abstraction.

But in product engineering, what you subtract often matters far more than what you build. Microservice sprawl often starts with good intentions but decays into nanoservices, services so small and isolated they deliver negative ROI. As one example demonstrates, Segment moved from 100+ microservices back to a monolith, reducing deployment time from hours to minutes.

Three Deadly Sins and Their Calculated Reality

1. Building for Massive Scale From Day One

- Probability: 20% (context-dependent)

- Impact: 70% (significant disruption)

- Risk Value: 14%

This suggests designing for moderate growth with clear extension points rather than premature microservices. Many teams build distributed systems capable of handling millions of users while serving thousands, and paying the complexity tax for features they’ll never use.

2. Database Failure Scenarios

- Probability: 80% (eventually all systems experience issues)

- Impact: 95% (potentially catastrophic)

- Risk Value: 76%

This high score justifies early investment in robust backup and recovery systems. Notice the difference: database resiliency has both high probability AND high impact, making it a genuinely worthwhile architectural investment.

3. The Security Over-Preparedness Trap

Many security architectures are built around statistically improbable scenarios while ignoring basic hygiene. The framework forces teams to distinguish between “possible” and “probable” threats, allocating resources accordingly.

When Complexity Is Actually Justified

The framework isn’t anti-complexity, it’s anti-unjustified-complexity. There are legitimate cases where complex architectures pay dividends:

Production Agents require sophisticated orchestration, as O’Reilly’s Architect’s Dilemma ↗ explains. When you’re building concierge-style services that handle ambiguity and require stateful conversations, the complexity serves a real user need rather than hypothetical future requirements.

Regulatory Requirements often force higher-risk architectures because the impact of non-compliance outweighs the implementation costs.

Business-Critical Dependencies where third-party failures could literally kill your business justify more complex contingency planning.

Implementing The Calculator in Real Architectures

Translating this framework into practice requires discipline:

Make Reasoning Explicit: Document architectural decisions with clear probability and impact estimates. Track these assumptions over time, were your probability estimates accurate?

Set Organizational Thresholds: Define risk tolerance levels. Maybe 75%+ requires immediate action, 50-75% warrants monitoring, and below 50% gets deferred until evidence emerges.

Review Quarterly: Recalculate probabilities based on actual operational data. That 5% cloud provider failure probability might drop to 2% after a year of stable operations, making that multi-cloud prep even less justified.

Balance Across the System: Don’t optimize one component while neglecting others. A brilliant microservice architecture means nothing if your database has single points of failure.

The Most Controversial Insight: Simplicity as Strategy

The calculator’s most provocative finding is that simplicity isn’t just easier, it’s often strategically superior. As one Medium post exploring this framework notes, clean infrastructure-as-code with migration processes often beats full multi-cloud implementations for 95% of use cases.

Teams that embrace subtractive engineering:

- Remove unnecessary steps in user flows

- Kill underused settings and toggles

- Consolidate microservices into cohesive domains

- Automate inputs where possible

- Ask “Can this feature be absorbed into another?”

This approach isn’t about cutting corners, it’s about focusing engineering effort where it actually matters. Every minute spent on low-probability scenarios is a minute not spent improving the core user experience.

The Takeaway: Quantify Before You Complexify

Architects love elegant solutions, but elegance divorced from business reality becomes technical debt in disguise. The probability x impact framework provides the mathematical backbone to push back against complexity for complexity’s sake.

Next time someone proposes multi-cloud “just in case” or premature microservices “for scale”, ask for the numbers. If they can’t quantify the probability and impact, they’re building castles in the air, and you’re the one who’ll eventually have to maintain them.

The best architectural decisions aren’t the most sophisticated, they’re the ones that solve real problems with appropriate complexity. Everything else is just resume-driven development with extra steps.