Apple Just Made Browser AI Ridiculously Fast

Apple's FastVLM and MobileCLIP2 models running on WebGPU prove on-device AI doesn't need cloud servers anymore

Apple dropped a browser-based AI bomb that processes video captions faster than most APIs can return “processing.” Their new FastVLM and MobileCLIP2 models, released on Hugging Face alongside a real-time video captioning demo, represent the most significant leap in on-device AI processing we’ve seen this year.

When Your Browser Outperforms Cloud Services

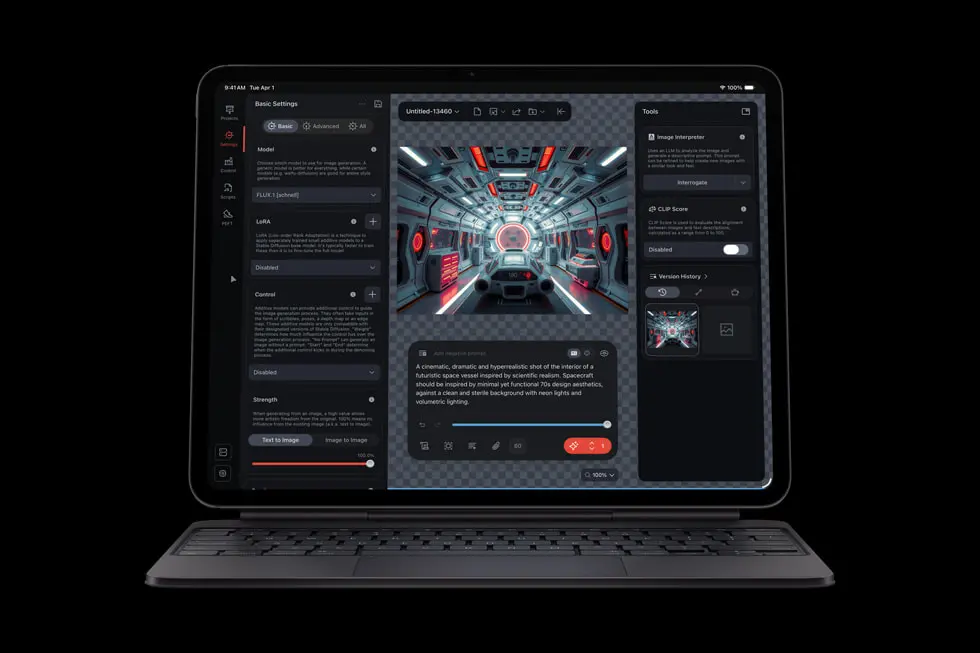

The demo at Hugging Face Spaces ↗ shows video captioning running directly in the browser using WebGPU acceleration. No server round-trips, no API calls, no waiting. It’s the kind of performance that makes you question why we’ve been paying for cloud vision APIs that take seconds to respond when local processing takes milliseconds.

Apple’s approach here is characteristically pragmatic: take their mobile-optimized models and make them run everywhere. The FastVLM models range from 0.5B to 7B parameters, with quantized versions (int8, int4) that scream on consumer hardware. MobileCLIP2 offers multiple size variants (S0 through S4) specifically designed for different latency targets.

The Architecture That Makes This Possible

What makes this release particularly interesting isn’t just the models, it’s how they’re deployed. WebGPU support means these models leverage your GPU directly, bypassing the traditional WebGL limitations that have constrained browser-based ML. The demo includes full source code, showing exactly how Apple implemented the WebGPU backend.

The models themselves use Apple’s FastViT architecture, which combines convolutional efficiency with transformer performance. The 5-stage design in their larger models (MCi3 and MCi4) demonstrates how architectural choices can dramatically reduce latency at higher resolutions. At 1024×1024 resolution, their MCi3 architecture runs 7.1× faster than comparable parameter-count models.

Why This Changes Everything for Developers

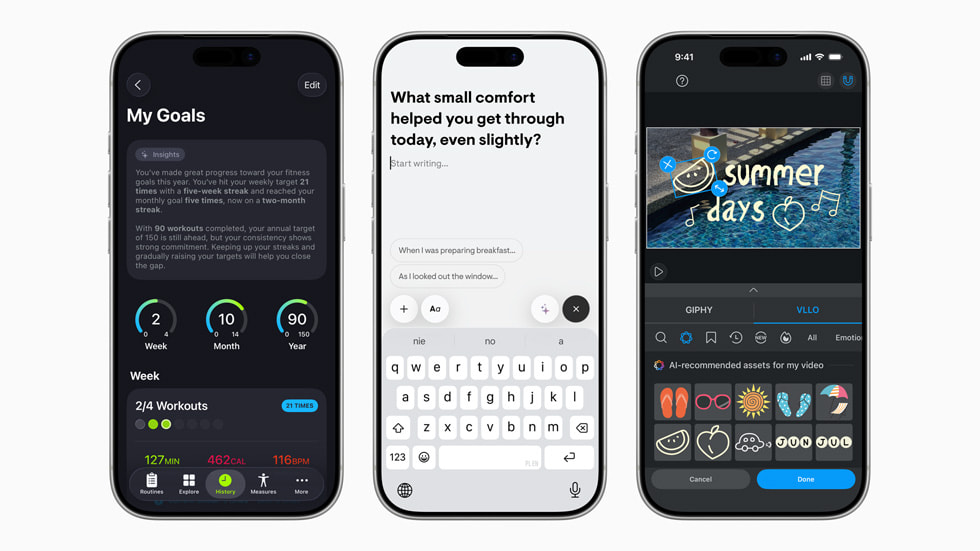

The implications here are massive. Real-time video analysis without server costs? Browser-based content moderation? Local image recognition that doesn’t phone home to Google or AWS? Apple just opened the door to entirely new categories of applications that don’t depend on cloud infrastructure.

Their performance claims are backed by concrete numbers: MobileCLIP2-S4 matches the accuracy of SigLIP-SO400M/14 while being 2× smaller and outperforms DFN ViT-L/14 at 2.5× lower latency. These aren’t incremental improvements, they’re generational leaps.

The Quiet Revolution in Model Distribution

Perhaps the most Apple move here is how they released this. Not through a flashy keynote, but through Hugging Face repositories with open access. The FastVLM collection ↗ and MobileCLIP2 models ↗ are available right now, with permissive licensing that suggests Apple is serious about developer adoption.

This release demonstrates that the future of AI isn’t just about bigger models, it’s about smarter deployment. While everyone else was trying to serve 100B parameter models from expensive data centers, Apple figured out how to run state-of-the-art vision models on your phone and in your browser.

The browser demo isn’t just a tech showcase, it’s a statement. The same models running in your browser today will be in Apple’s devices tomorrow, and they’ll work whether you have internet or not. That’s not just convenient, it’s revolutionary for applications where latency, privacy, or connectivity matter.

The only question now is how long until every other company follows Apple’s lead and realizes that sometimes the best cloud is no cloud at all.