Semantic Layers Are Back, And AI Is Driving the Revival

Semantic layers, once considered legacy, are experiencing renewed interest due to the need for standardized, AI-readable data definitions across BI and analytics platforms.

The semantic layer is staging a comeback that nobody saw coming. Just when you thought this 90s-era data architecture concept was headed for the IT graveyard, AI has dragged it back into the spotlight, and it’s not just rebranded hype. The dirty secret of modern AI systems is that they’re terrible at understanding your data without proper context and standardization.

Why AI Agents Need Semantic Consistency

Semantic layers standardize data definitions and governance policies so AI agents can understand, retrieve, and reason over all kinds of data as well as AI tools, memories, and other agents. This isn’t just theoretical, it’s the critical infrastructure that prevents AI systems from delivering contradictory answers based on inconsistent data definitions.

The community sentiment reveals this isn’t just vendor hype. As one experienced practitioner notes, “A good semantic model really bridges the BI adoption gap by providing assets that people can do drag and drop report development. In larger orgs it’s also a great way to enforce standardization of calculations like KPIs: without a semantic layer Sue from finance might have a retention rate calculation different from Bob from HR’s version.”

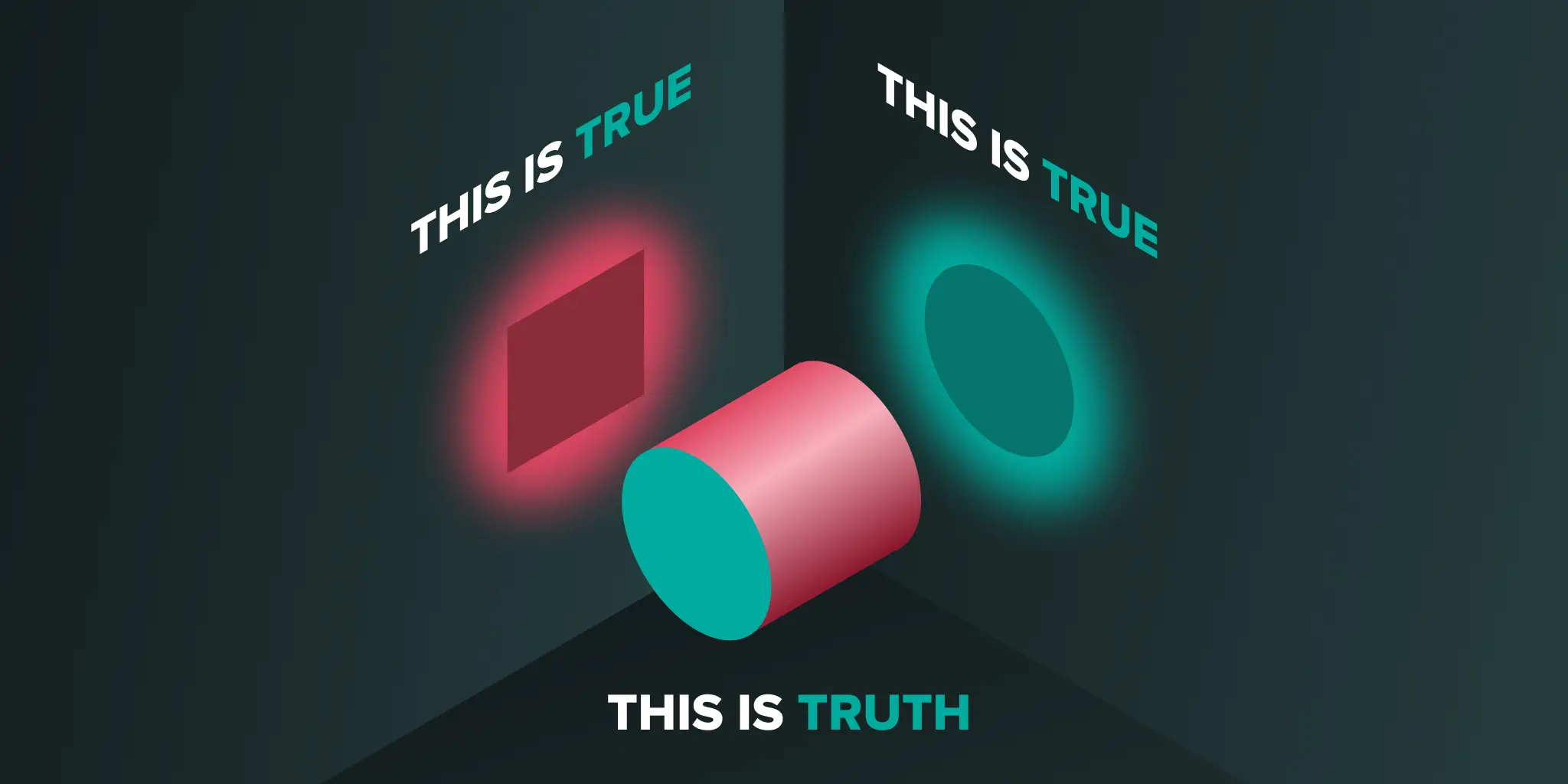

This standardization problem becomes exponentially worse with AI. When you ask an AI agent “what’s our customer retention rate”, ambiguity in definitions leads to unreliable outputs. The semantic layer becomes the single source of truth that both humans and machines can understand consistently.

From Legacy Architecture to AI Foundation

The modern semantic layer isn’t your grandfather’s Business Objects universe. Today’s implementations sit at the intersection of data governance, BI tooling, and AI infrastructure. They’ve evolved from simple query abstraction layers to sophisticated metadata management systems that serve both human analysts and AI agents.

Microsoft’s Power BI team understands this shift intimately. Their optimization guide for Copilot ↗ lays out exactly why semantic modeling matters for AI: “Full names improve clarity and help Copilot understand the semantic meaning of each field.” They recommend replacing abbreviations like “CustID” with “CustomerID” and “Rev” with “Revenue”, simple changes that dramatically improve AI comprehension.

The core architectural principle remains unchanged: semantic layers create a business-friendly abstraction over complex data infrastructure. But where they once served only human analysts, they now provide machine-readable context for AI systems.

The RAG Evolution: From Simple Retrieval to Context Engineering

The evolution of retrieval-augmented generation (RAG) has exposed the limitations of dumping raw documents into AI context windows. As Steve Hedden explains in his comprehensive analysis ↗, “RAG was never the end goal, just the starting point. As we move into the agentic era, retrieval is evolving into a part of a full discipline: context engineering.”

The problem with naive RAG implementations is what Hedden calls “context poisoning” or “context clash”, where misleading or contradictory information contaminates the reasoning process. Even when retrieving relevant context, you can overwhelm the model with sheer volume, leading to “context confusion” or “context distraction.”

This is where semantic layers become essential. They provide the structured metadata and standardized definitions that enable AI agents to navigate complex enterprise data landscapes without getting lost in noise. Semantic layers evolved from RAG’s limitations to become the foundation for what’s now being called “context engineering”, the art and science of filling the context window with just the right information at each step of an agent’s trajectory.

Knowledge Graphs: The Secret Sauce for Semantic AI

The rise of GraphRAG, retrieval augmented generation using knowledge graphs, has reignited interest in semantic technology. Knowledge graphs provide structure and meaning to enterprise data, linking entities and relationships across documents and databases to make retrieval more accurate and explainable for both humans and machines.

The market has taken notice of this trend. Between 2023-2025, we’ve seen significant consolidation in the semantic technology space:

- January 2023: Digital Science acquired metaphacts

- February 2023: Progress acquired MarkLogic

- July 2024: Samsung acquired Oxford Semantic Technologies

- October 2024: Ontotext and Semantic Web Company merged to form Graphwise

- May 2025: ServiceNow announced its acquisition of data.world

These moves mark a clear shift: knowledge graphs are no longer just metadata management tools, they’ve become the semantic backbone for AI and closer to their origins as expert systems.

Enterprise Data Governance Meets AI Governance

The most compelling case for semantic layers in the AI era might be governance. Traditional data governance focused on human consumption, making sure finance and marketing departments calculated revenue the same way. AI governance extends this to machine consumption.

As one practitioner bluntly stated, “Semantic layers are great from a governance perspective and the AI use case is kind of pointless (other than convincing your bosses that you should be allowed to spend time on semantic models).” While cynical, this highlights how pragmatic data professionals are using AI as the justification for investments they know they need anyway.

Consider the alternative: without standardized definitions, you’re essentially training your AI systems on conflicting data sources. When finance calculates “revenue” differently than sales, your AI will produce contradictory insights. The semantic layer becomes your single source of truth that ensures consistency across all human and machine consumers.

Practical Implementation: From Theory to Production

Modern semantic layer implementations span the entire data stack. Snowflake’s new Open Semantic Interchange ↗ initiative attempts to standardize how companies document their data to make it AI-ready. Microsoft’s Power BI team emphasizes that semantic optimization isn’t just about performance, it’s about enabling AI to deliver meaningful insights.

The key architectural pattern that emerges is the separation between core data layers and semantic layers. As one engineer explains, “I always have a semantic layer. I rarely have the end users hit the core layer. The core layer is where all clean and coordinated data goes. It should be modeled against the entire company and will change about as fast as the company does (not very). The semantic layer is used for your data products.”

This separation becomes critical when serving AI systems. The core layer handles data engineering concerns, consistency, coordination, and cleanliness. The semantic layer handles business context, definitions, relationships, and domain-specific logic that both humans and AI need to understand the data.

The Future: Beyond Human-Centric Data Models

The semantic layer revival represents a fundamental shift in how we think about data infrastructure. For decades, we’ve optimized data systems for human consumption. We’re now entering an era where our primary data consumers might be machines.

This changes everything about data modeling, governance, and tooling. AI agents don’t care about pretty visualizations, they care about consistent, well-defined data structures they can reason over. They need standardized metadata that describes relationships, hierarchies, and business rules in machine-readable formats.

The open question is whether semantic layers will remain separate infrastructure or become embedded in AI platforms. Companies like Omni are betting on semantic layers as core differentiators, their recent acquisition of Explo ↗ signals continued investment in semantic infrastructure as a competitive advantage.

As AI systems become more sophisticated, the semantic layer becomes less of an optional abstraction and more of a mandatory foundation. Without it, you’re essentially asking AI to make sense of chaos, and getting exactly the unreliable results you’d expect.

The semantic layer isn’t just back, it’s evolving into the critical translation layer between raw data and intelligent systems. And any organization serious about AI adoption should be thinking about how to implement semantic consistency before their AI initiatives drown in data ambiguity.