The NBA's Secret Ref: How AI Is Calling Fouls on Traditional Sports Analytics

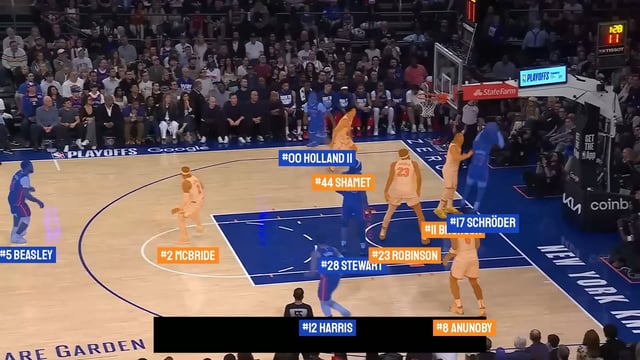

A revolutionary basketball AI stack combining RF-DETR, SAM2, and SigLIP achieves 93% player recognition accuracy without manual labeling, outperforming human scorers in messy game conditions.

Basketball has always been one of computer vision’s toughest opponents. Players move at blur-inducing speeds, bodies crash into each other creating chaotic occlusions, and identical uniforms make traditional tracking algorithms throw in the towel. For years, sports analytics relied on expensive manual tagging, limited camera setups, and educated guesses about who’s who on the court.

Enter a pipeline that’s changing the game: RF-DETR for detection, SAM2 for tracking through contact, SigLIP+UMAP for team clustering, and ResNet for jersey number classification. The results aren’t just impressive, they’re challenging fundamental assumptions about what AI can do in sports analytics today.

The Detection Foundation: RF-DETR’s Real-Time Advantage

Most sports AI systems start with detection, and RF-DETR-S offers a crucial edge over traditional YOLO architectures. As benchmarks show ↗, RF-DETR-S actually outperforms larger models like YOLO11-L while running faster, a critical advantage when processing real-time sports footage.

The system was fine-tuned on a custom dataset ↗ with 10 basketball-specific classes: players, jersey numbers, referees, the ball, and various shot types. This specialized training allows the model to handle basketball’s unique challenges, motion blur from fast breaks, partial occlusions during screens, and the notorious difficulty of reading jersey numbers when players are in motion.

The detection pipeline doesn’t just identify players, it classifies basketball-specific actions like “player-jump-shot” and “player-layup-dunk”, providing coaches and analysts with rich contextual data beyond simple player tracking. This multi-class detection forms the foundation for the entire recognition system.

Tracking Through Chaos: SAM2’s Occlusion Handling

Traditional tracking systems fall apart when players collide, set screens, or get momentarily blocked from camera view. SAM2 solves this with its internal “temporal memory bank”, a mechanism that stores each player’s appearance features over time, allowing re-identification even after complete occlusion.

The system prompts SAM2 with RF-DETR’s initial bounding boxes, then lets the segmentation model take over. But basketball’s high-action nature creates interesting failure modes:

“After a shot or pass, SAM2 might incorrectly classify the ball or a background element as part of the player’s mask”, the research notes. The solution? A cleanup function that identifies disconnected mask segments and removes outliers beyond a distance threshold from the main player body.

This approach proved remarkably robust across various game scenarios, maintaining player IDs through the kinds of chaotic plays that typically break tracking algorithms.

The Unsupervised Revolution: Team Clustering Without Labels

Here’s where things get particularly clever. Instead of manually labeling teams or using predefined uniform colors, the system employs SigLIP vision-language embeddings combined with UMAP dimensionality reduction and K-means clustering.

The process extracts one frame per second from training videos, crops player regions using RF-DETR detections (using central crops to avoid opponent contamination), and generates embeddings via SigLIP. The system then reduces these high-dimensional embeddings to three dimensions using UMAP before applying K-means clustering to automatically separate players into teams based on uniform features.

This zero-shot team identification works across different games, courts, and lighting conditions, something traditional color-based methods struggle with. SigLIP’s embeddings capture both low-level visual cues (colors, textures) and higher-level semantic information about uniform patterns and designs.

The OCR Showdown: SmolVLM2 vs ResNet-32

Jersey number recognition presents a fascinating case study in model specialization. The team initially tried SmolVLM2, a compact vision-language model pre-trained on document OCR. Out-of-the-box performance wasn’t terrible, 56% accuracy, but it struggled with basketball-specific challenges.

Fine-tuning SmolVLM2 on a custom dataset of 3.6k NBA jersey crops ↗ boosted accuracy to 86%, but the model still produced implausible predictions like “011” or “3000”, numbers that don’t exist in professional basketball.

Then came the surprise contender: ResNet-32. This classic CNN architecture, fine-tuned on the same dataset reformatted for classification, achieved 93% test accuracy, outperforming the specialized vision-language model.

The takeaway? Sometimes specialized, traditional architectures still beat general-purpose foundation models at domain-specific tasks. The dataset’s natural imbalance reflected real court distributions: number “8” appeared 315 times (8.7%) while “6” appeared only 8 times (0.2%), mimicking actual player number preferences.

Identity Resolution: Matching Numbers to Players

Getting jersey numbers right is only half the battle. The system must correctly assign each number to the tracked player using Intersection over Smaller Area (IoS), a variation of IoU that normalizes by the smaller region. Since jersey numbers are always smaller than player masks, IoS measures how much of the number lies within the player segmentation.

The system keeps only matches with IoS ≥ 0.9, converting RF-DETR’s number bounding boxes into masks before computing the metric. To combat recognition errors, particularly when players are positioned far from cameras, the pipeline samples numbers every 5 frames and confirms identities only after three consecutive identical predictions.

This temporal consistency mechanism stabilizes recognition without adding significant complexity, demonstrating how simple heuristics can dramatically improve reliability in production systems.

Performance Realities: What Works and What Doesn’t

The developers achieved remarkable accuracy but faced hard performance constraints. On an NVIDIA T4, the system runs at 1-2 FPS, far from real-time broadcast requirements. The bottleneck? SAM2’s linear performance drop as tracked objects increase. With 10 players on court, tracking becomes computationally expensive.

The model’s performance varies dramatically by player position. Recognition accuracy plummets for “spot-up shooters waiting in the corner” where jersey numbers are barely visible. This reveals an important truth: even 93% overall accuracy doesn’t mean equal performance across all game situations.

Interestingly, the pipeline demonstrated that classic CNNs like ResNet can still outperform modern vision-language models for specialized classification tasks. While research shows SigLIP and similar vision-language models ↗ excel at general visual understanding, domain-specific tasks often benefit from targeted, simpler architectures.

The Bigger Picture: What This Means for Sports Analytics

This basketball AI pipeline represents more than just another computer vision project, it demonstrates how modern AI tools can be composed to solve complex, multi-step recognition problems. The combination of specialized detection (RF-DETR), robust tracking (SAM2), unsupervised clustering (SigLIP+UMAP), and targeted classification (ResNet) creates a system greater than the sum of its parts.

The implications extend beyond basketball. Similar architectures could revolutionize player tracking in football, hockey, soccer, and other sports where player identification and movement analysis drive strategic insights. The unsupervised team clustering alone could save thousands of hours in manual labeling across sports leagues worldwide.

As one developer noted, they’ve already experimented with rule enforcement, specifically detecting 3-second violations ↗, suggesting automated officiating might not be far behind automated analytics.

The technology isn’t ready for real-time broadcast yet, but it’s closer than most realize. When pipeline optimization catches up with accuracy improvements, we might see AI systems not just analyzing games, but actively participating in officiating them. The question isn’t whether AI will change sports analytics, it’s how soon we’ll trust it to call the close ones.