The Slack message hits different when you’ve been watching it happen in real-time: “Has anyone else noticed their coworkers getting dumber by the day?” It’s not polite, but it’s not wrong either. Across engineering teams and product departments, a quiet epidemic is spreading, one where the smartest tools are producing the shallowest thinking.

Welcome to the age of AI-induced cognitive atrophy, where your colleagues aren’t actually getting dumber, but their critical thinking muscles are quietly atrophying from disuse. And the data is far more alarming than any snarky team chat suggests.

The 17% Intelligence Tax You Didn’t Know You Were Paying

Let’s start with the hard numbers. A recent study published on arXiv (Shen & Tamkin, 2026) put this theory to the test with 52 professional programmers. The setup was straightforward: one group learned a new coding skill using AI assistance, another went old-school and wrestled with the problem using their own brainpower. Both completed the same tasks. Both took roughly the same amount of time.

But when tested on their actual understanding of what they’d built, the AI-assisted group scored 17% lower on average. This wasn’t a minor gap, it was a statistically significant chasm that appeared across all experience levels, from junior developers to senior architects. The programmers who relied most heavily on AI-generated code without demanding explanations performed the worst.

This is cognitive offloading in action. As Psychology Today explains, when you outsource mental labor to AI, you’re not just saving time, you’re bypassing the very cognitive processes that build expertise. Your brain doesn’t encode the patterns, doesn’t struggle through the problem-solving loops, doesn’t form the deep neural connections that transform information into intuition.

The researchers discovered something crucial: participants who asked AI to explain its generated code performed significantly better. The difference wasn’t AI use itself, it was mindless AI use versus mindful AI use. One builds competence, the other builds only the illusion of it.

The “Let Me Just Ask Claude” Syndrome

If you’ve been in a planning session recently, you’ve heard the phrase. It’s become the workplace equivalent of “let me Google that”, a reflexive reach for external cognition that short-circuits internal reasoning. The sentiment from developer forums is clear: teams are having fewer productive technical conversations because everyone defaults to asking AI instead of thinking through problems collectively.

One particularly telling observation from the trenches: colleagues are “rushing and shitting out random things using AI without any critical thinking.” The phrase is crude but captures the core problem, speed without substance, output without understanding.

This manifests in subtle but dangerous ways. A product manager generates a PRD without deeply understanding the user problem. An engineer merges LLM-suggested code without tracing the logic. A designer creates mockups that look polished but solve the wrong problem. The work looks professional, but scratch the surface and you’ll find a hollow understanding underneath.

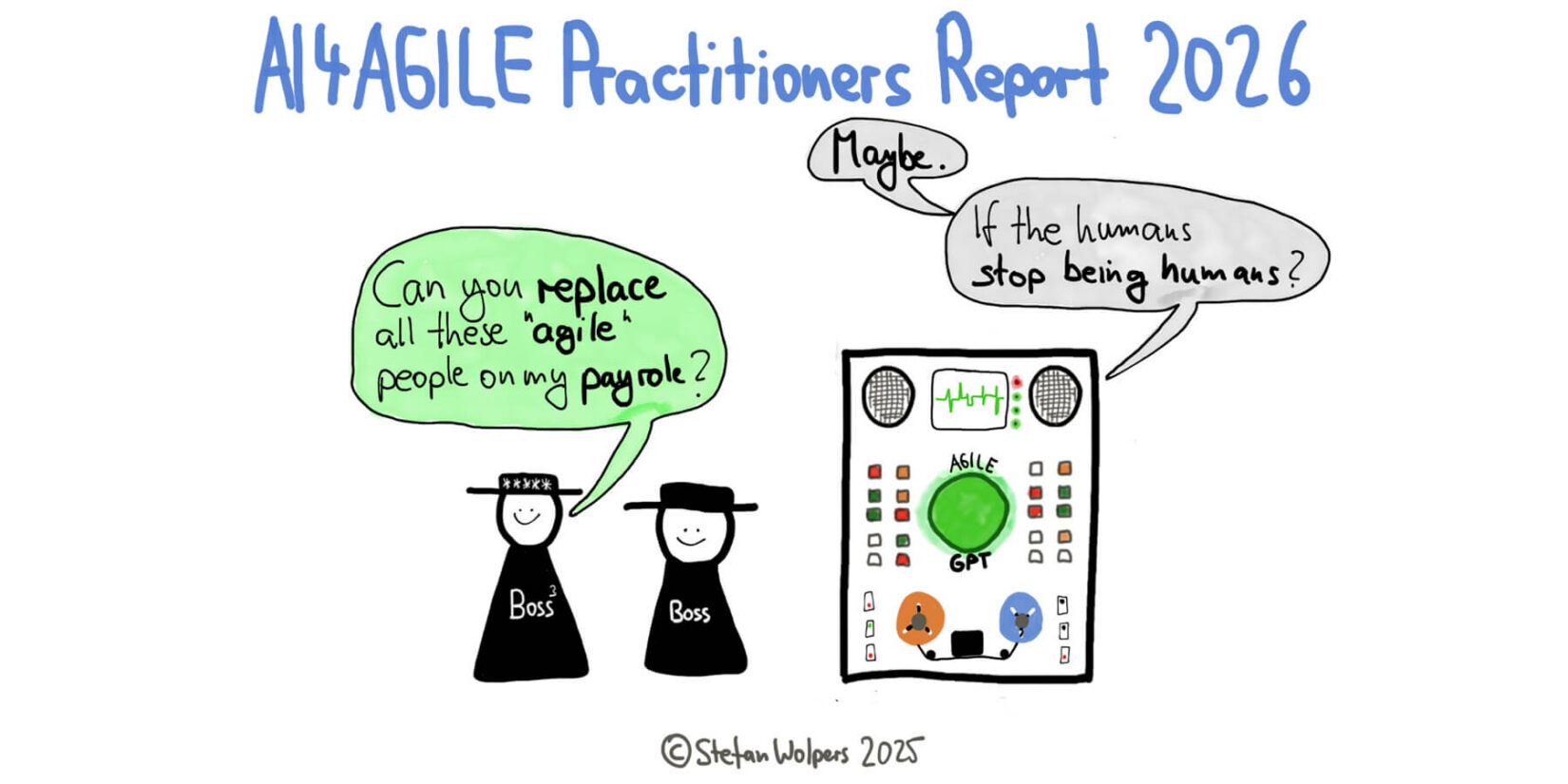

The AI4Agile Practitioners Report 2026 surveyed 289 Agile practitioners and found this concern is widespread. While 83% use AI tools, most spend 10% or less of their time with them, not because of resistance, but because of integration uncertainty. They don’t know where AI fits into meaningful work.

More tellingly, practitioners aren’t worried about AI replacing their jobs. They’re worried about it replacing their thinking. The report highlights that the most frequently mentioned concerns are “the erosion of Agile values and principles, the loss of human-centered collaboration, and reduced critical thinking.” One respondent captured the fear perfectly: AI might optimize delivery while weakening the spirit of agility, efficiency at the cost of reflection, convenience at the expense of competence.

When Generations Collide Over Cognitive Capacity

The cognitive atrophy problem isn’t uniform across age groups. Research from TheCable reveals a startling reversal: academic achievement records spanning nearly 200 years show a clear decline moving from Millennials to Generation Z, despite Gen Z spending more years in formal education than any previous generation.

In Nigeria, where Gen Z represents roughly 50 million people, this isn’t just a skills gap, it’s framed as an “intelligence crisis.” The core issue is that technology, promised as a bridge to deeper knowledge, has become a crutch that bypasses the brain’s innate capacity for critical exploration.

Older workers who remember life before AI assistance learned to wrestle with difficult questions, research without instant answers, and build mental models through sustained effort. As one veteran researcher described, locating a single article in a physical library required individual initiative that “inevitably opened up unexpected and critically valuable avenues of reading, thereby cultivating curiosity, judgment, and creative thought.”

Today’s workers, by contrast, are efficient at finding answers but deficient at understanding them. They’ve become masters of prompt engineering while losing the muscle for problem decomposition. They can generate 10 solutions in seconds but struggle to evaluate which one is actually correct.

The Creativity Crisis Nobody Saw Coming

Here’s the paradox: AI doesn’t threaten creativity because it thinks too much, but because it lets us think too little. As The Hindu’s analysis argues, we’ve fallen for the fallacy that writing (or coding, or designing) is a product rather than “an existential act of human understanding and interpretative imagination.”

The cult of speed and metric-driven performance has supplanted genuine thought. Students, professionals, and even scholars now outsource cognitive labor not from lack of intelligence, but because slowing down to think feels inefficient when AI can produce something adequate in seconds.

This shows up in scientific research too. Over the last two years, journal submissions have exploded, not from breakthroughs, but because AI can rapidly generate texts that mimic scientific discourse. Reviewers increasingly report “phantom citations” that don’t exist or are misattributed. These papers slip through peer review, enter training data, and pollute the knowledge base for future research.

The result is what researchers call “model collapse”, when AI systems trained on AI-generated content produce outputs that become “unintelligible and meaningless.” It’s cognitive pollution accumulating in our digital ecosystem, just like plastic in the ocean.

The Human-AI Division of Labor That Actually Works

So what’s the solution? Ban AI tools? Good luck with that, and honestly, it’s the wrong approach. AI isn’t the villain, mindless AI use is. The key is designing workflows where AI handles data gathering while humans handle interpretation.

The AI productivity gains we’re celebrating aren’t free. They’re financed at predatory interest rates against a collateral most organizations haven’t bothered to appraise: human cognitive resilience. While teams celebrate shipping code 3x faster, they’re quietly accumulating a cognitive debt that will come due.

Here’s what actually works:

-

AI-free zones for strategic thinking: Establish dedicated time and space for original problem-solving without digital assistance. This is where deep mental models are built.

-

The “explain it back” rule: If you use AI to generate code or content, you must be able to explain the logic to a colleague without referencing the AI. If you can’t, you haven’t learned it.

-

Critical AI literacy as a core skill: The framework from Med Kharbach defines Critical AI Literacy as “the ability to critically analyse and engage with AI systems by understanding their technical foundations, societal implications, and embedded power structures.” This goes way beyond prompt engineering, it’s about understanding whose data trained the model, what perspectives get marginalized, and what environmental costs are hidden in those API calls.

-

The THINK-REFINE-DISCLOSE framework: Before touching AI, THINK first, outline your own ideas, struggle with the problem. Then REFINE with AI for clarity and gaps, not generation. Finally, DISCLOSE when required, building transparency and accountability.

Why Your Codebase Is the Canary in the Coal Mine

The cognitive atrophy problem shows up first in your code. AI pair programming is quietly degrading code quality through architectural inconsistency and design drift. When developers accept LLM suggestions without deep understanding, they create what I call “silent code rot”, code that works but doesn’t cohere.

LLM-generated code is architecture’s silent killer. Your PR reviews are failing because the code passes all checks in isolation, but six months later, your architecture looks like a house where every room was built by a different contractor who never saw the blueprint. The mental model of the system becomes fragmented, living partly in the AI’s weights and partly in developers’ heads, but fully in neither.

This creates a cognitive rent crisis where developers spend up to 70% of their time just trying to understand code rather than writing it. The cognitive load of comprehension reduces capacity for deep thinking about design and strategy.

The Burnout Connection Nobody’s Talking About

Here’s the cruel irony: AI was supposed to give us our time back, but it’s eating it from the inside out. The productivity trap is engineering a burnout crisis by intensifying workloads. When AI makes individual tasks faster, organizations don’t reduce scope, they increase it. You’re not working less, you’re producing more, and the cognitive overhead of context-switching between AI suggestions and human judgment is exhausting.

The real threat isn’t AI replacing you. It’s AI making you replaceable by reducing your unique value. When everyone uses the same models, everyone’s output starts to look the same. The differentiator has always been the brains in the room, but if Claude is turning those brains into compost, your company is flushing its competitive advantage down the toilet.

Building Cognitive Immunity in an AI-Drenched World

The solution isn’t less AI, it’s more intentional AI. Here’s your practical playbook:

For Individuals:

– Write your first draft alone, always. Whether code, docs, or designs, start with your own thinking. AI can refine, but it shouldn’t originate.

– Master the art of the good question. The value isn’t in getting answers, it’s in framing problems correctly. This skill actually improves with AI use, if you’re deliberate about it.

– Practice “cognitive cross-training”. Regularly solve problems without AI assistance to maintain your mental muscles. Think of it as going to the gym for your brain.

For Teams:

– Establish AI transparency norms. When AI contributed to work, flag it. Not for shame, but for shared understanding.

– Create “deep work” sprints. Dedicate portions of sprints to solving complex problems without AI assistance. The goal isn’t purity, it’s preserving capacity.

– Designate AI interpreters. Have team members rotate through the role of explaining AI-generated solutions to the group. If they can’t, the solution isn’t ready.

For Organizations:

– Measure what matters. Track not just velocity but also understanding. Do post-mortems on decisions: could the team explain their reasoning without AI?

– Invest in critical AI literacy. Training shouldn’t just cover “how to use the tool” but “how to think about the tool’s thinking.”

– Protect human judgment zones. Strategic planning, architecture decisions, and creative direction need spaces where AI suggestions are intentionally excluded to force original thought.

The cognitive architect role is being reshaped by LLMs, but that doesn’t mean humans are obsolete. It means the human role shifts from generating solutions to evaluating, contextualizing, and transcending them. The architects who thrive will be those who use AI to explore the solution space faster, but rely on their own judgment to select and refine the right path.

The Bottom Line: Use It or Lose It

Your brain is subject to the same principle as your muscles: use it or lose it. AI is the most powerful cognitive tool humanity has created, but it’s also the most powerful cognitive crutch. The difference between enhancement and atrophy lies not in the tool, but in the intentionality of its use.

The organizations that will win in the AI era won’t be those with the most advanced models, they’ll be those with the most robust human thinking preserved alongside them. They’ll understand that AI productivity gains are high-interest loans against expertise, and they’ll pay down that debt through deliberate practice of critical thinking.

Your creativity isn’t fragile. But it does need exercise. So think first, use AI second, and reflect always. Because the goal isn’t perfect output, it’s becoming someone who can think deeply without a machine. And trust me, that skill will outlast every algorithm update.

The question isn’t whether AI is making us dumber. The question is whether we have the discipline to use AI without losing ourselves. Right now, the evidence suggests many of us don’t. The good news? Awareness is the first step toward building the cognitive immunity we need.

References:

Shen, J. H., & Tamkin, A. (2026). How AI Impacts Skill Formation. arXiv preprint arXiv:2601.20245.

Roe, J., Furze, L., & Perkins, M. (2025). Digital plastic: A metaphorical framework for Critical AI Literacy in the multiliteracies era. Pedagogies: An International Journal. Advance online publication.