For years, open-source image generation has been the budget option, the thing you used when you couldn’t afford Midjourney or DALL-E 3. Sure, Stable Diffusion gave us control and customization, but let’s be honest: everyone could spot the “AI look” from a mile away. Plastic skin, melted fingers, text that looked like alphabet soup, and that weird over-smoothness that made everything feel like a wax museum.

Alibaba’s Qwen team just dropped Qwen-Image-2512, and it’s not playing that game anymore. This isn’t an incremental update, it’s a wrecking ball through the quality barrier that’s separated open-source from proprietary models.

The “AI Look” Problem Was Real

The August 2025 release of Qwen-Image was solid. It worked. But it had the same tells as every other open-source model: faces lacked pore-level detail, hair merged into helmet-like blobs, and elderly subjects looked like they’d been run through an aggressive beauty filter that eliminated every wrinkle and life line. The model could generate images, but it couldn’t generate believable images.

The gap wasn’t just aesthetic, it was practical. Marketing teams couldn’t use these images for real campaigns. Game developers couldn’t generate convincing concept art. And anyone trying to create educational materials with text in the images? Forget it. The text rendering was about as reliable as a weather forecast from a magic 8-ball.

What Actually Changed in 2512

Qwen-Image-2512 addresses three core failure modes that have plagued open-source models, and the improvements are measurable, not just marketing fluff.

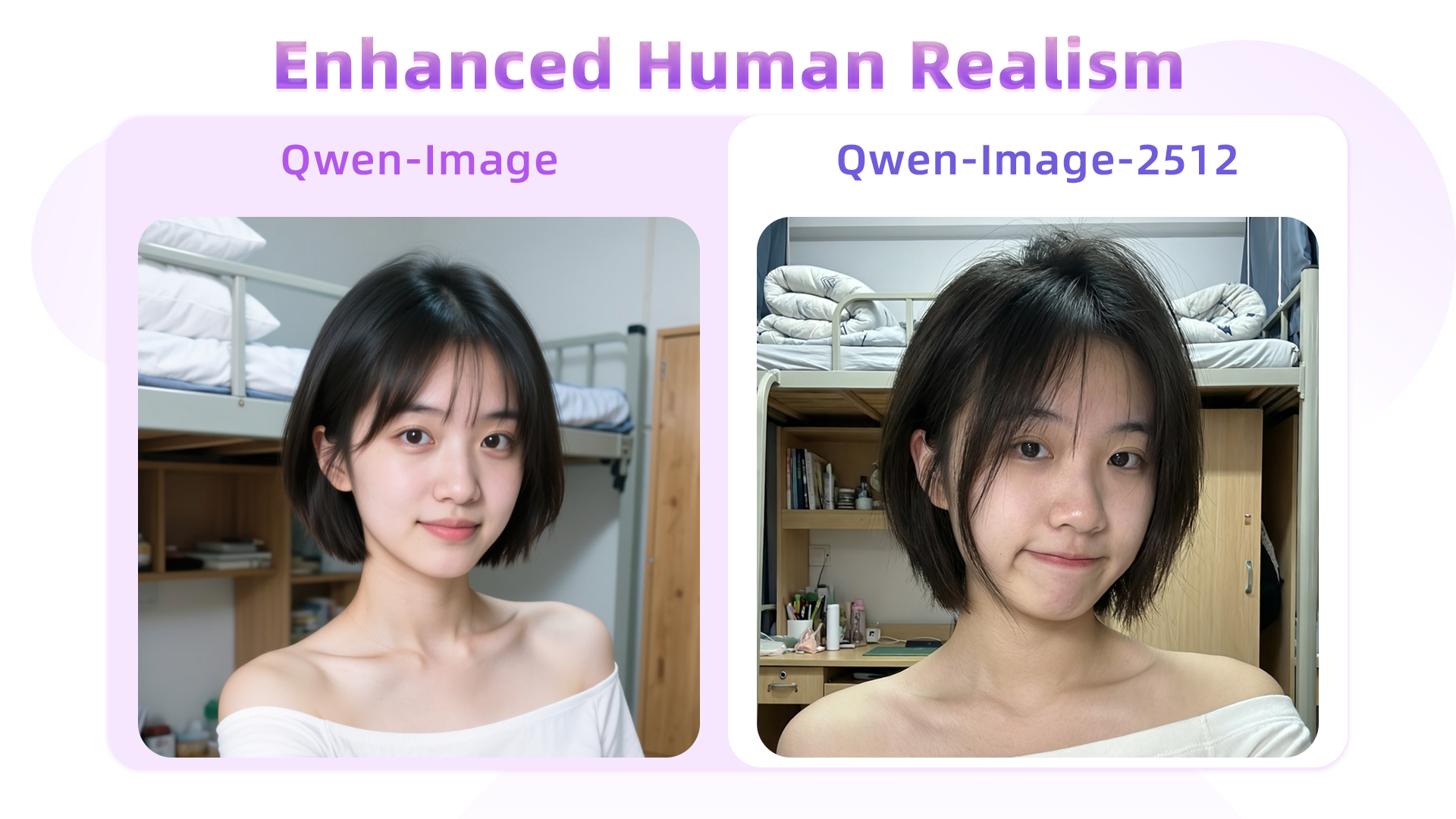

Human Realism That Doesn’t Look Airbrushed Into Oblivion

The model now renders individual hair strands instead of blurry clumps. Skin has actual texture, pores, subtle imperfections, the kind of details that make a face look human instead of digital. In side-by-side comparisons using the same prompts, Qwen-Image-2512 produces elderly subjects with properly defined wrinkles and age markers, while the August version generated what can only be described as “uncanny valley grandparents.”

One particularly telling example from the model card shows a Chinese college student in a dorm room. The original model blurred background objects, the desk, stationery, bedding, into mush. The 2512 version keeps every pencil sharpener and notebook line crisp. This isn’t just about faces, it’s about environmental coherence.

Natural Textures That Don’t Look Like Paintings

Landscapes, water, fur, anything with fine-grained detail, got a massive upgrade. The model card shows a canyon river scene where water flow, foliage gradients, and waterfall mist are rendered with fidelity that rivals commercial models. A golden retriever portrait demonstrates distinct hair strands with natural color transitions from warm gold to cream, complete with specular highlights on a slightly damp nose.

These aren’t subtle tweaks. When you’re generating wildlife for a nature documentary pitch or product visuals for a fur coat, this level of detail moves the image from “obviously AI” to “could be a photograph.”

Text Rendering That Actually Works

Here’s where things get spicy. Qwen-Image-2512 can generate full PowerPoint slides with accurate text layout, timelines with proper alignment, and infographics with readable annotations. One example shows a complex industrial process diagram with Chinese text labels, green checkmarks, and red X marks, all correctly positioned and legible.

This isn’t just “better than before.” This is “actually usable for business materials” territory. The model handles multilingual text, maintains consistent typography, and respects layout constraints. For developers building automated report generators or educational content tools, this is a game-changer.

The Benchmarks: 10,000 Blind Tests Don’t Lie

Qwen’s team ran over 10,000 blind evaluation rounds on AI Arena, pitting Qwen-Image-2512 against both open-source and closed-source competitors. The results: it’s currently the strongest open-source model and remains “highly competitive” with closed systems.

Let’s parse what “highly competitive” actually means. In practical terms, evaluators, real humans, not automated metrics, couldn’t consistently distinguish Qwen-Image-2512 outputs from those of models that cost money and run on locked-down APIs. When your open-source model passes a blind taste test against Midjourney and DALL-E 3, you’ve achieved something significant.

Running It: From Data Center to Ancient Desktop

Here’s where the open-source ethos really shines. Qwen-Image-2512 is available in multiple forms:

- Full precision: 40.9 GB BF16/F16 versions

- Quantized: GGUF versions ranging from 7.22 GB (Q2_K) to 21.8 GB (Q8_0)

- Turbo variant: 4-step LoRA for faster generation

The model is on Hugging Face, ModelScope, and Qwen Chat for immediate testing. But the real story is local deployment.

One developer ran the Q4_K_M quantized version (13.1 GB) on a $100 Dell desktop with an i5-8500, 32GB RAM, and no GPU. It took 55 minutes to generate a 512px image with 20 inference steps. That’s absurdly slow for production use, but it proves a critical point: you don’t need a data center to run this model. The barrier to entry is now “do you have a modern CPU and enough RAM?” not “do you have a rack of A100s?”

For those with actual GPUs, ComfyUI integration is ready. The setup process is straightforward:

mkdir comfy_ggufs

cd comfy_ggufs

python -m venv .venv

source .venv/bin/activate

git clone https://github.com/comfyanonymous/ComfyUI.git

cd ComfyUI

pip install -r requirements.txt

cd custom_nodes

git clone https://github.com/city96/ComfyUI-GGUF

cd ComfyUI-GGUF

pip install -r requirements.txt

Then download the models:

cd models

curl -L -C - -o unet/qwen-image-2512-Q4_K_M.gguf \

https://huggingface.co/unsloth/Qwen-Image-2512-GGUF/resolve/main/qwen-image-2512-Q4_K_M.gguf

curl -L -C - -o text_encoders/Qwen2.5-VL-7B-Instruct-UD-Q4_K_XL.gguf \

https://huggingface.co/unsloth/Qwen2.5-VL-7B-Instruct-GGUF/resolve/main/Qwen2.5-VL-7B-Instruct-UD-Q4_K_XL.gguf

curl -L -C - -o vae/qwen_image_vae.safetensors \

https://huggingface.co/Comfy-Org/Qwen-Image_ComfyUI/resolve/main/split_files/vae/qwen_image_vae.safetensors

Load the workflow JSON, click run, and you’re generating images locally in about a minute on modest hardware.

The Economics: Cloud vs. Living Room

That 55-minute CPU generation time raises an interesting question: is local inference actually economical? Cloud inference charges per minute. Running a quantized model on a spare desktop costs electricity and amortized hardware.

For hobbyists and researchers, local deployment means no API rate limits, no data leaving your premises, and no subscription fees. For startups, it means predictable costs instead of surprise bills when a marketing campaign goes viral. The math shifts dramatically when you’re generating thousands of images daily.

Text Rendering: The Feature Nobody Talks About

Most image generation demos focus on fantasy art or photorealistic portraits. Qwen-Image-2512’s technical report includes something unusual: complex text layouts. A slide showing Qwen-Image’s development timeline, with Chinese text labels, gradient axes, and connected nodes, all perfectly rendered. An industrial process diagram with chemical equations and status indicators. A 12-panel educational poster about “A Healthy Day” with time stamps and descriptions in Chinese.

This isn’t accidental. The model uses Qwen2.5-VL as its text encoder, which understands language structure better than the CLIP variants most diffusion models rely on. The result is text that respects line breaks, maintains consistent kerning, and doesn’t dissolve into gibberish when you exceed five words.

For developers building:

– Automated report generators

– Educational content platforms

– Marketing collateral tools

– UI/UX mockup generators

…this is the difference between “cool demo” and “ship to production.”

The Fine Print: It’s Not Magic

Before you cancel your Midjourney subscription, some realities:

Speed: Even on a decent GPU, you’re looking at 30-60 seconds per image at 50 steps. The 4-step turbo LoRA exists but trades quality for speed.

Memory: The Q4_K_M version needs 13.2GB+ of combined RAM/VRAM. If you’re on a 8GB GPU, you’ll be offloading to system RAM, which hurts performance.

Training Data: Like all models, it reflects biases in its training data. The showcase heavily features East Asian subjects, which is expected given the training corpus, but worth noting for global applications.

License: Apache 2.0, which is commercially friendly. No corporate approval committee needed.

The Bottom Line: The Barrier Is Gone

Qwen-Image-2512 doesn’t just narrow the gap between open-source and proprietary image generation, it eliminates the quality barrier entirely. The “AI look” that has justified premium pricing for closed models is now a solved problem in the open-source world.

For developers, this means you can build production features with locally hosted models. For artists, you get fine-grained control without quality sacrifices. For researchers, you have a state-of-the-art baseline to build on. And for the broader AI community, it’s proof that open collaboration can match, and sometimes exceed, well-funded corporate labs.

The model is available now. The code is on GitHub. The benchmarks are public. And that $100 Dell desktop is probably collecting dust in someone’s closet, ready to generate its first photorealistic image sometime around lunch.

The only question left: what are you going to build with it?