Alibaba’s Qwen team just dropped Qwen-Image-2.0, and the timing isn’t accidental. While Western AI labs were still digesting their holiday break, this release delivers a unified 7B model that generates and edits images at native 2K resolution with professional-grade text rendering. The kicker? It does this while being small enough to run on consumer hardware, eventually. Right now, it’s API-only, but if history repeats itself, open weights are coming.

The model’s architecture merges an 8B Qwen3-VL encoder with a 7B diffusion decoder, processing prompts up to 1,000 tokens to produce 2048×2048 pixel outputs. This isn’t upscaled resolution, it’s natively generated at 2K, capturing microscopic details like skin pores, fabric weaves, and architectural textures without the artifacts that plague competing systems. For developers who’ve been wrestling with fragmented pipelines, one model for generation, another for editing, a third for upscaling, this unified approach eliminates context switching entirely.

The Text Rendering Problem That Finally Got Solved

Let’s be blunt: most image generators treat text like decorative graffiti. You get warped characters, inconsistent fonts, and layout disasters that would make any designer weep. Qwen-Image-2.0 approaches this differently by integrating textual understanding directly into the generation process rather than slapping letters onto finished images.

The model demonstrates five distinct text rendering capabilities that matter for real-world use:

- Precision (“准”): It handles complex “picture-in-picture” compositions while maintaining visual consistency. In one example, Qwen-Image-2.0 generates a slide showing a puppy with and without a hat, keeping the same canine subject perfectly aligned across both images. This isn’t just cute, it’s the foundation for professional infographic work where visual consistency determines credibility.

- Complexity (“多”): The 1K-token context window allows for absurdly detailed prompts. The Qwen team fed it a 500+ token description for an A/B testing results report, complete with revenue figures, statistical significance markers, and bilingual labels. The output organizes this into a clean three-column layout that would pass muster in most boardrooms.

- Aesthetics (“美”): When generating mixed compositions, the model automatically places text in blank areas to avoid obscuring main subjects. It supports multiple calligraphic styles, from Emperor Huizong’s distinctive “Slender Gold” script to Wang Xizhi’s small regular script, rendering entire classical poems with stroke-level accuracy.

- Realism (“真”): Text appears on diverse surfaces with appropriate material properties. In one test case, the model renders handwritten notes on a glass whiteboard, complete with realistic reflections, perspective distortion, and even the photographer’s faint reflection in the glass surface. The text includes technical specifications like “[8B Qwen3-VL Encoder] → [7B Diffusion Decoder] → pixels (2048×2048)” with proper technical typography.

- Alignment (“齐”): Multi-panel comics with consistent characters, calendars with perfectly gridded dates, and infographics with geometrically precise text blocks all demonstrate the model’s organizational intelligence. A 4×6 comic grid shows dialogue neatly centered in speech bubbles across 24 panels, while maintaining character consistency throughout.

Key Features

- 7B parameter model

- Native 2K resolution

- Unified generation/editing

- Professional text rendering

The “Horse Riding Human” Benchmark and What It Actually Reveals

The Qwen team included a peculiar showcase image: a horse literally riding a human. On Reddit, this sparked immediate debate. Some saw it as a brilliant benchmark, testing whether the model understands abstract concepts like “above” and “below” when trained on a world where humans ride horses, not the reverse. Others noted that AI skeptics like Gary Marcus have long used similar examples to challenge claims of AI “understanding.”

The generated image shows a muscular brown horse standing on a prone man’s back, hooves pressing into his shoulders. The man wears a distressed expression, medieval clothing, and struggles in a push-up position. The model accurately renders the physics: dust particles, shadow casting, and the horse’s weight distribution.

This matters because it exposes a real tension in AI evaluation. Can a model that has never seen “horse rides human” in training data still compose the concept from linguistic understanding? The Qwen team’s decision to highlight this suggests confidence in their model’s compositional abilities, and perhaps a subtle jab at benchmarks that measure memorization rather than reasoning.

Technical Highlights

- 8B Qwen3-VL encoder

- 7B diffusion decoder

- 1,000 token context window

- 2048×2048 pixel outputs

Use Cases

- Infographic creation

- Photorealistic image generation

- Text-heavy visual design

- Multi-panel comic creation

From 20B to 7B: The Efficiency Play That Changes Everything

Previous Qwen-Image iterations required 20B parameters. Version 2.0 slashes this to 7B while improving capabilities. This isn’t just a technical achievement, it’s a strategic one. A 7B model becomes viable for local deployment on high-end consumer GPUs, something the community has been desperate for.

The Reddit discussion reveals palpable excitement about this size reduction. One commenter notes: “If/when weights drop, this should be very runnable on consumer hardware. V1 at 20B was already popular in ComfyUI, a 7B version doing more with less is exactly what local community needs.”

This efficiency gain stems from architectural improvements rather than brute-force scaling. The unified generation-and-editing pipeline means the model learns shared representations, reducing redundant parameters. Faster inference times, generating 2K images in seconds, make it practical for iterative design workflows where speed determines whether you keep experimenting or give up and open Canva.

Performance Metrics

- 2048×2048 resolution

- 1,000 token context

- 7B parameter count

- 2-3 second generation time

Community Reactions

- “Great for local deployment”

- “More capable than expected”

- “Ready for ComfyUI integration”

- “Excited for open weights”

API-Only Now, Open Weights Soon: The Waiting Game

Here’s where the community’s patience gets tested. Qwen-Image-2.0 is currently available only through Alibaba Cloud’s API and a free demo on Qwen Chat. No open weights yet. But precedent matters: the original Qwen-Image dropped its weights about a month after launch under Apache 2.0 license.

The expectation of open release creates a unique dynamic. Developers are already planning integrations, with one noting the model should be “very runnable on consumer hardware” once weights arrive. This anticipation-building strategy, release API first, let the community test and build hype, then open-source, contrasts sharply with Western labs that either go fully closed or release immediately.

Release Strategy

- API-first launch

- Community testing phase

- Open weights expected in 1 month

- Apache 2.0 licensing

Development Philosophy

- Open-source commitment

- Community-driven development

- Strategic API rollout

- Focus on accessibility

Benchmark Reality Check: Does It Actually Deliver?

On Alibaba’s AI Arena platform, Qwen-Image-2.0 topped the ELO leaderboard for text-to-image generation in blind human evaluations. Judges compared images head-to-head without knowing which model produced what, and the 7B unified model outperformed larger, specialized competitors.

The editing benchmarks show similar strength. As a unified model handling both generation and manipulation, it maintains performance across tasks where fragmented pipelines typically falter. This validates the architectural bet that merging understanding and generation paths creates a more robust system.

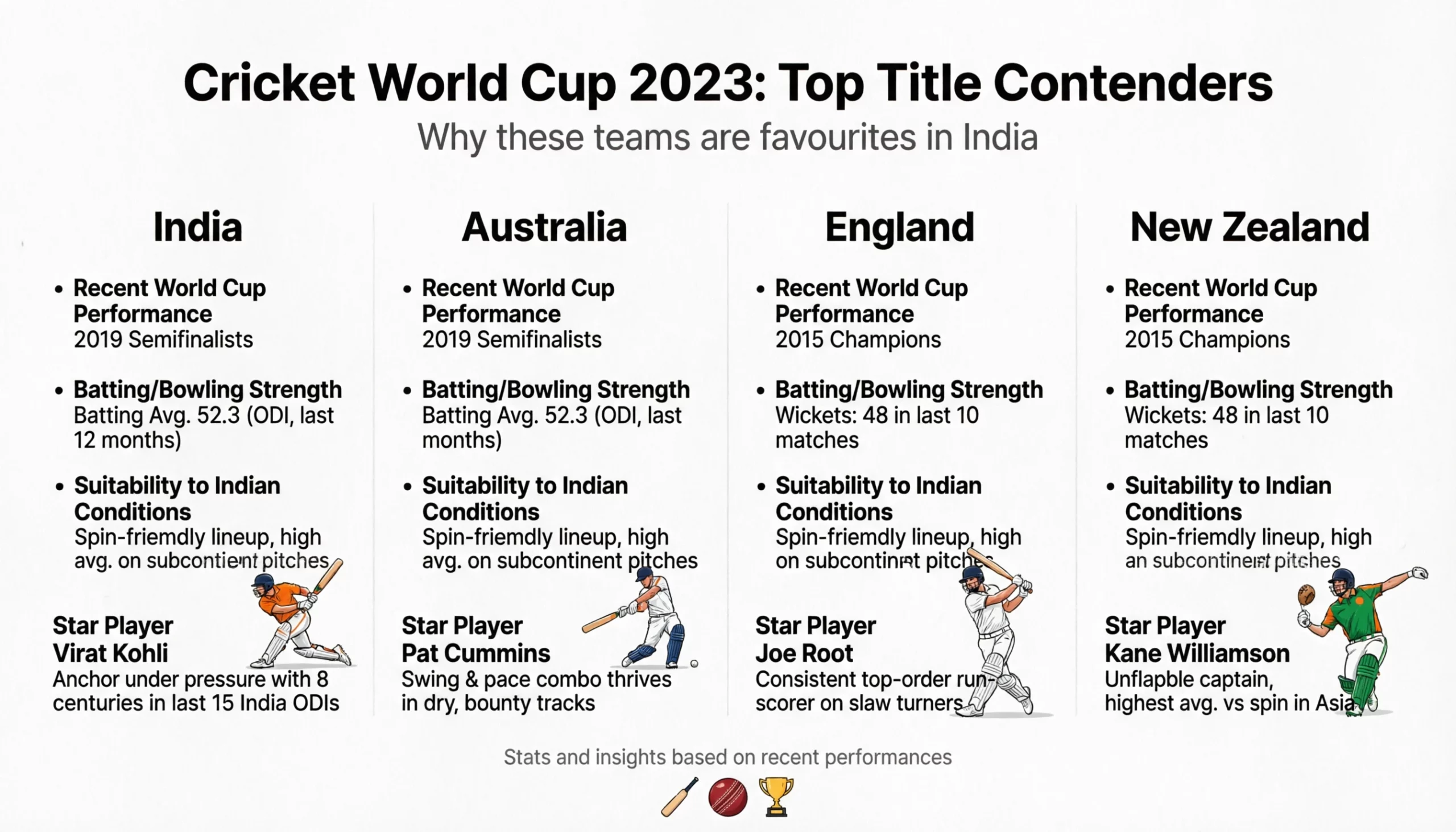

Hands-on testing from third-party sources confirms these claims. One evaluation generated a cricket World Cup infographic, macro skin photography, and an oil painting landscape. The results showed professional composition and photorealistic detail, though with occasional quirks (one cricketer inexplicably held a tennis racket). The reviewer concluded: “These are some of the best images I have ever seen an AI model produce.”

Performance Benchmarks

- Top ELO ranking

- Outperforms larger models

- Strong editing capabilities

- High-quality outputs

User Feedback

- “Best AI images I’ve seen”

- “Professional-level quality”

- “Occasional quirks”

- “Highly recommend”

Why This Matters for the AI Landscape

Qwen-Image-2.0 arrives during what Chinese media calls the “AI red packet battle”, a Spring Festival competition where tech giants deploy AI products to capture mainstream users. While ByteDance’s DouBao and Tencent’s Yuanbao focus on chatbots and red packet gimmicks, Qwen targets professional creators who need actual utility.

The release also highlights a growing divergence in AI development philosophy. Western labs increasingly gate their best models behind APIs and premium tiers. Alibaba, despite starting with API-only, has consistently followed through with open weights that democratize access. This creates a two-tier ecosystem: immediate access for cloud customers, eventual local deployment for the community.

For developers building multimodal applications, this unified approach simplifies architecture dramatically. Instead of chaining separate models for generation, editing, and upscaling, a single API call handles the entire workflow. The Qwen3-VL’s multimodal capabilities and architectural innovations that power the encoder demonstrate how vision-language understanding can directly enhance generation quality.

Industry Impact

- Competes with Western models

- Targets professional creators

- Democratizes AI access

- Encourages open-source development

Development Philosophy

- Open weights commitment

- API-first strategy

- Focus on accessibility

- Community engagement

The Path Forward: Integration and Deployment

If you’re planning to build with Qwen-Image-2.0, several practical considerations emerge. First, prompt engineering requires specificity. Concrete nouns, spatial prepositions, and quantitative descriptors (“four-panel comic grid”) produce better results than vague adjectives. For photorealism, include camera specifications and lighting details. For infographics, specify layout structures and color coding.

Second, the editing capabilities shine when you maintain session context. Upload reference images and chain modifications conversationally: “inscribe this poem in the sky”, then “make the text larger”, then “adjust kerning.” The unified architecture preserves consistency across these steps.

Third, plan for the eventual open release. The Qwen-Image-2512’s breakthrough in realistic open-source image generation set a high bar, but the 7B size of v2.0 makes it even more accessible. Start prototyping with the API now, and you’ll be ready to deploy locally when weights drop.

The model’s efficiency also raises questions about the future of AI scaling laws. If a 7B model can outperform 20B+ alternatives through better architecture, the race shifts from parameter count to design ingenuity. This could accelerate a trend toward smaller, more deployable models that respect user privacy and reduce computational costs.

Integration Tips

- Use specific prompts

- Include technical details

- Chain editing steps

- Plan for open weights

Future Implications

- Smaller models with better architecture

- Shift in AI development focus

- Increased privacy and efficiency

- More accessible AI tools

Conclusion: A Model That Earns Its Hype

Qwen-Image-2.0 delivers on its core promises: professional typography, native 2K resolution, unified generation and editing, and practical efficiency. The “horse riding human” benchmark, while quirky, demonstrates genuine compositional understanding rather than dataset memorization. The shift from 20B to 7B parameters makes advanced AI image generation accessible beyond cloud giants.

For developers, designers, and researchers, the key question isn’t whether Qwen-Image-2.0 is good, it’s whether you build around the API now or wait for open weights. Given Alibaba’s track record, waiting seems low-risk. But the API’s current capabilities are strong enough that early integration offers immediate competitive advantage.

The model’s success also suggests that multimodal AI’s future lies in unified architectures that merge understanding and generation. As Qwen3-VL-32B’s performance leap in local vision-language modeling has shown, smaller models can exceed expectations when architecture prioritizes efficiency over brute scale. Qwen-Image-2.0 extends this principle to the creative domain, where speed, consistency, and local deployment matter as much as raw capability.

In a landscape flooded with incremental updates, this release stands out. It challenges assumptions about model size, benchmarks real understanding, and maintains Alibaba’s commitment to eventual open-sourcing. For anyone building with AI-generated visuals, Qwen-Image-2.0 isn’t just another option, it’s a glimpse at where the entire field is heading.