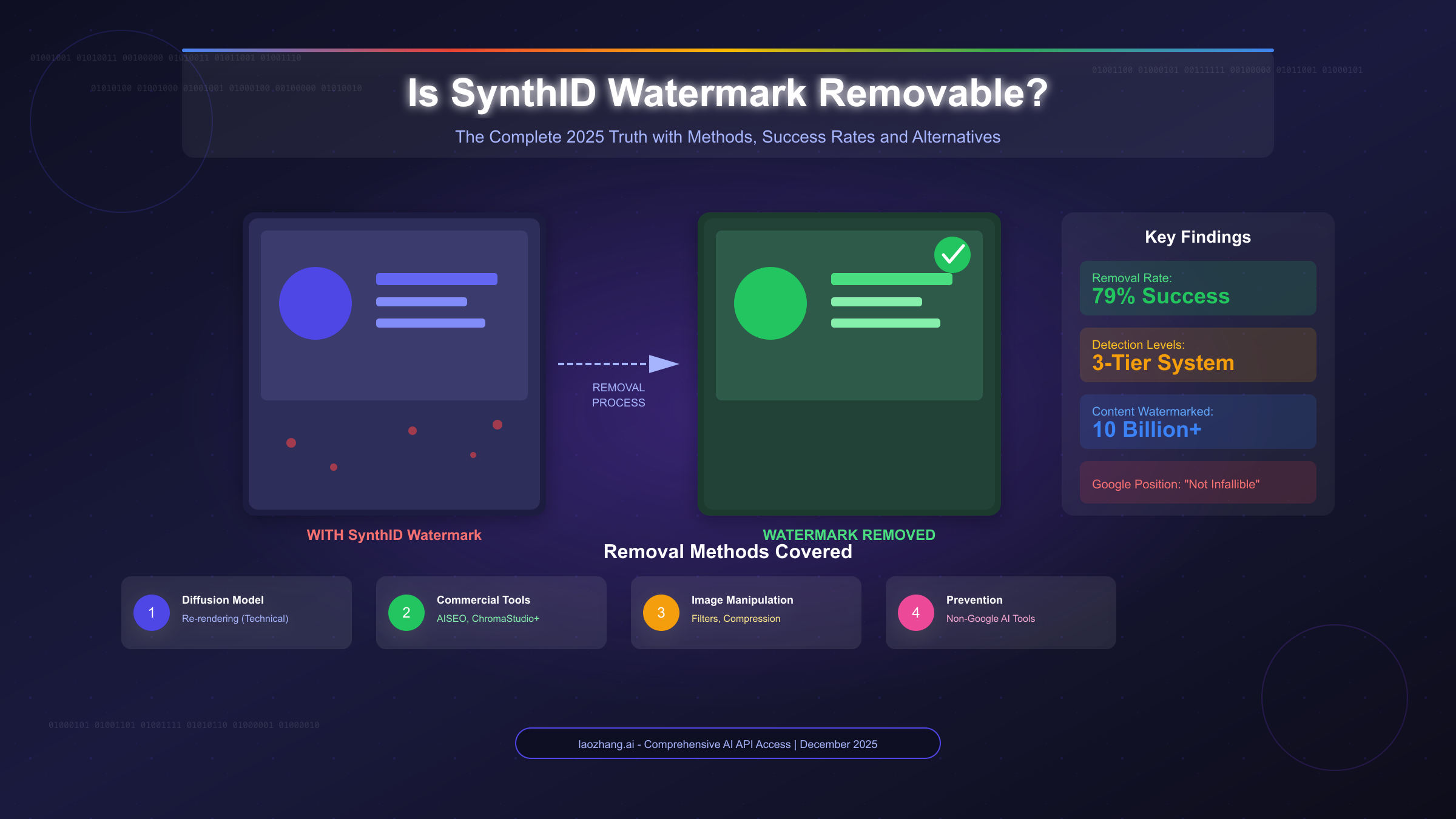

The premise was elegant: embed an invisible fingerprint into every AI-generated image, creating an unbreakable chain of custody from model to masterpiece. Google DeepMind’s SynthID promised exactly this, watermarking over 10 billion pieces of content across images, video, audio, and text. The technology was supposed to be the foundation of trust in an era of synthetic media, robust enough to survive cropping, compression, and format conversion. Instead, it took researchers less than a year to reduce this digital fortress to rubble.

A recently published proof-of-concept demonstrates that reprocessing SynthID-watermarked images through diffusion models removes the watermark with alarming efficiency, achieving a 79% success rate in controlled tests. The technique doesn’t require nation-state resources or insider knowledge, just a moderately powerful GPU, open-source software, and a technical understanding of how diffusion models actually work.

The Tournament Sampling House of Cards

To understand why SynthID collapses so easily, you need to grasp how it works in the first all. Unlike visible watermarks that sit on top of an image, SynthID embeds its signal during generation through a mechanism called tournament sampling. At each diffusion step, the model subtly biases pixel selections based on a private key, weaving an invisible pattern into the image’s statistical DNA. The watermark and visual content aren’t separate layers, they’re fused at the molecular level.

This approach was supposed to make removal mathematically infeasible. The problem? Diffusion models are designed to be robust to noise. It’s their entire reason for existence. They learn to separate signal from corruption, structure from chaos. When you feed a SynthID-watermarked image back into a diffusion pipeline, the model doesn’t see a sacred fingerprint, it sees just another pattern of noise to be cleaned.

The attack leverages this fundamental tension. By using the original image as a structural guide with ControlNets while applying low-denoise regeneration (denoise values around 0.2), the process preserves semantic content while replacing the low-level pixel noise that carries the watermark. Multiple sequential passes through KSamplers iteratively scrub the signal, like polishing a surface until the original scratches disappear.

The ComfyUI Workflow That Breaks a Billion-Dollar System

The practical implementation is almost insultingly straightforward. The Synthid-Bypass repository provides ready-to-use ComfyUI workflows that turn this theoretical vulnerability into a point-and-click operation. The general-purpose workflow chains together:

- Canny Edge Detection to create a structural outline

- ControlNet guidance to preserve composition

- Three sequential KSamplers with denoise values of 0.2

- FaceDetailer with YOLO-based face detection for portrait preservation

For images with human subjects, a specialized portrait workflow adds face-aware masking and targeted inpainting, isolating facial regions for higher-resolution processing before stitching them back into the main image. The result? Detection confidence drops from 90%+ ("Detected") to below 50% ("Not Detected") while visual quality remains largely intact.

Detection tools confirm the watermark’s presence before processing…

…and its complete absence after diffusion-based removal.

The entire pipeline requires a GPU with 16GB+ VRAM and moderate familiarity with ComfyUI, hardly a barrier for determined actors. The repository has already accumulated 162 stars and 27 forks, suggesting rapid community adoption.

The 79% Success Rate Reality Check

IEEE Spectrum research using the UnMarker tool found that diffusion re-rendering defeats 79% of SynthID watermarks, though Google disputes this figure. The company’s own documentation acknowledges that SynthID is "designed to be robust but not tamper-proof", which reads as corporate-speak for "we knew it was breakable."

The 21% failure rate isn’t necessarily a testament to SynthID’s strength. It reflects the practical limitations of current removal techniques: very high-resolution images require downscaling, aggressive processing can introduce visual artifacts, and fine details sometimes get lost in the denoising process. The watermark isn’t surviving because it’s strong, it’s surviving because the removal process is being careful not to destroy the image itself.

This distinction matters. In cybersecurity terms, SynthID isn’t a hardened encryption scheme, it’s security through obscurity. The moment someone bothered to look closely enough, the illusion shattered.

Visualizing the Invisible

Perhaps most damning is how easily researchers can see the watermark once they know what to look for. By asking Nano Banana Pro to regenerate a blank black image and then cranking exposure while crushing contrast, the supposedly invisible pattern emerges as clear as day. The watermark appears as subtle noise, different each time, confirming its non-deterministic nature.

This visualization reveals the core problem: SynthID is just sophisticated noise. And diffusion models are noise-removal machines. It’s like trying to waterproof a boat with paper towels, the fundamental materials are wrong for the job.

The Legal and Ethical Minefield

The timing of this research coincides with new legal frameworks attempting to criminalize watermark removal. The US COPIED Act of 2024 makes it illegal to remove AI watermarks with intent to deceive, targeting electoral manipulation and fraud. The EU AI Act imposes transparency requirements, while platform terms of service from Meta, Google, and X already prohibit identifier removal.

But the law runs into a practical wall: you can’t regulate mathematics. Diffusion models will continue to separate signal from noise, regardless of what Congress writes into the Federal Register. The research community is already treating SynthID bypass as legitimate AI safety work, with repositories explicitly framing their work as "responsible disclosure" to improve detection systems.

The ethical framework becomes murky. Security researchers studying vulnerabilities? Legitimate. Artists maintaining creative control over their AI-assisted work? Defensible. Bad actors creating deceptive content? Clearly harmful. But the tool doesn’t know the difference, it’s just math.

The Prevention vs. Removal Calculus

The most reliable way to get watermark-free AI images isn’t removal, it’s prevention. Using non-Google models like FLUX, Stable Diffusion, or Midjourney provides a 100% "success rate" because there’s no watermark to remove. This creates a perverse incentive: the only users who need removal tools are those locked into Google’s ecosystem, while everyone else generates clean images from the start.

For developers building AI applications, integrating removal workflows is complexity for complexity’s sake. Accessing watermark-free models through APIs like laozhang.ai or running models locally eliminates the entire problem. The effort spent on bypassing SynthID is better spent on choosing the right tool initially.

The Future of an Arms Race

This isn’t the end of the story, it’s the opening salvo. Google will iterate on SynthID, making future versions more resistant to diffusion-based attacks. Industry standardization efforts like C2PA may create compatible watermarking systems across providers. Regulatory pressure will likely mandate AI content identification, narrowing exceptions for watermark-free generation.

But the fundamental dynamic favors attackers. Each new watermarking scheme must survive in a world where diffusion models grow more powerful and removal techniques more refined. The equilibrium point isn’t watermarking victory, it’s a continuous cat-and-mouse game where detection confidence gradually erodes over time.

For now, the practical implication is clear: SynthID cannot be trusted as a standalone provenance solution. It works against casual modification and provides a baseline signal for content moderation, but determined actors can bypass it with publicly available tools and modest technical skill. Any system built on the assumption of SynthID’s inviolability is built on sand.

The mirage of invisible, unbreakable watermarking has been dispelled. What remains is the hard work of building robust, multi-layered provenance systems that acknowledge their own limitations, a task that requires honesty about technical capabilities, not marketing promises.

The bottom line for practitioners: If you’re building workflows that depend on watermark-free AI content, choose models that don’t apply SynthID from the start. If you’re relying on SynthID for security, implement additional verification layers. And if you’re a researcher, the tools are now available to test these boundaries yourself, because the best way to build robust defenses is to understand exactly how they fail.