For six years, the AI community has operated under a single ironclad assumption: large language models generate text token by token, left to right, period. Autoregressive generation wasn’t just the default, it was the only game in town. Now Tencent has dropped a model that treats that assumption like a suggestion. WeDLM-8B-Instruct uses diffusion-based generation to achieve 3-6× faster inference than vLLM-optimized Qwen3-8B on math reasoning tasks, and it’s forcing a long-overdue conversation about whether we’ve been optimizing the wrong architecture all along.

The Model That Shouldn’t Work (But Does)

WeDLM-8B-Instruct is Tencent’s instruction-tuned diffusion language model, and the numbers are borderline implausible. On GSM8K math reasoning, it runs 3-6× faster than Qwen3-8B-Instruct even when Qwen3 is running under vLLM, the gold standard for autoregressive inference optimization. That’s not a marginal improvement, that’s the kind of speedup that makes engineering managers recalculate their GPU budgets.

The model maintains a 32,768 token context length and stays compatible with existing infrastructure through native KV cache support, FlashAttention, PagedAttention, and CUDA Graphs. In other words, it’s not asking you to burn your inference stack and start over. It’s offering a drop-in architectural alternative that happens to be dramatically faster.

Here’s what makes researchers uncomfortable: WeDLM didn’t require training from scratch. Tencent converted Qwen3-8B-Base into a diffusion model, which means the technique could theoretically apply to any existing autoregressive model. The developer community has already noted that both 7B and 8B versions exist, converted from Qwen2.5 7B and Qwen3 8B respectively, demonstrating that this conversion process works across model families.

How Diffusion for Language Actually Works

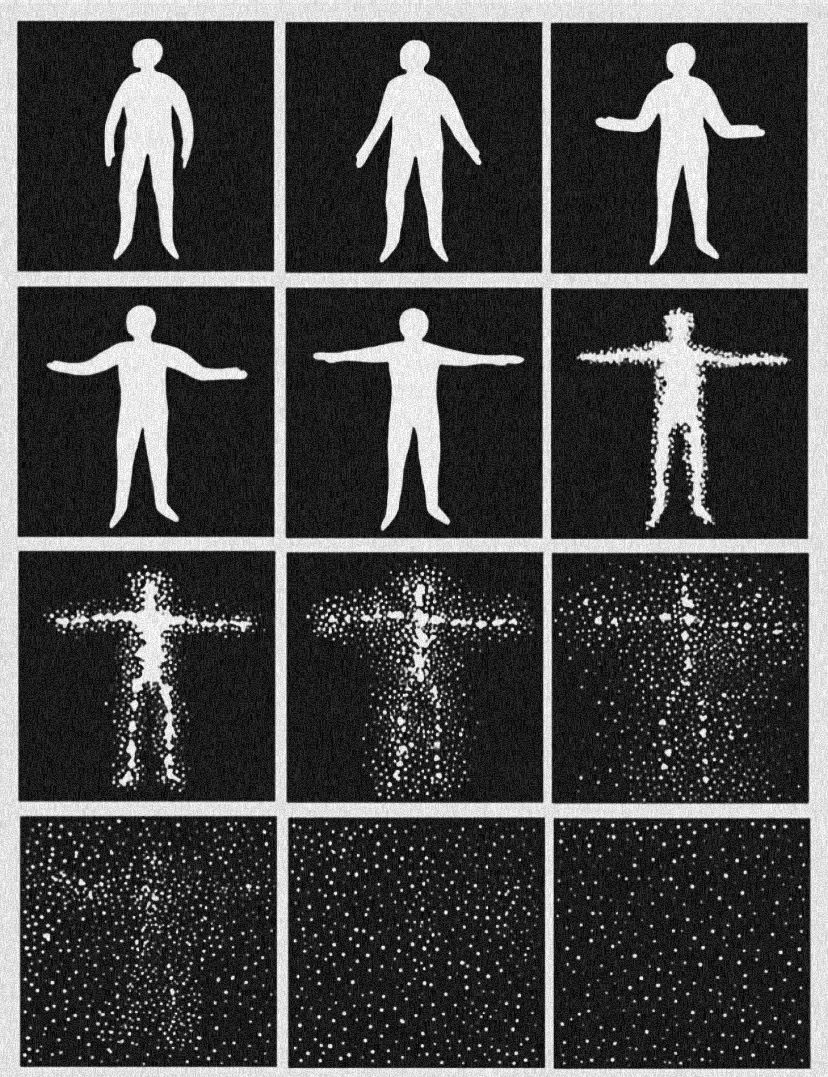

The term “diffusion language model” triggers skepticism for good reason. Diffusion models dominate image generation because they can refine an entire image in parallel denoising steps. Language is sequential by nature, words depend on previous words in ways that pixels don’t. So how does WeDLM pull this off?

Instead of generating token-by-token in a strict left-to-right order, WeDLM refines entire sequences (or chunks) over multiple denoising steps. It starts with a noisy sequence and iteratively denoises it into coherent text. The key insight is that while language has sequential dependencies, many of those dependencies can be resolved in parallel during early refinement stages. The final polishing steps handle the remaining sequential constraints.

This approach trades fewer sequential steps for more parallel computation, exactly what modern GPUs excel at. On structured, predictable outputs like math reasoning or code generation, the model can aggressively parallelize the generation process. For open-ended creative writing, where entropy is higher, the speedup narrows to 1.5-2×, but it never disappears entirely.

The Benchmark Data That Matters

Let’s cut through the marketing and look at the actual numbers from Tencent’s Hugging Face page:

| Benchmark | Qwen3-8B-Instruct | WeDLM-8B-Instruct |

|---|---|---|

| ARC-C (0-shot) | 91.47 | 92.92 |

| GSM8K (3-shot) | 89.91 | 92.27 |

| MATH (4-shot) | 69.60 | 64.80 |

| HumanEval (4-shot) | 71.95 | 80.49 |

| MMLU (5-shot) | 71.52 | 75.14 |

| GPQA-Diamond (5-shot) | 41.41 | 44.95 |

| Average | 75.12 | 77.53 |

The model beats Qwen3 on five out of six benchmarks, with a particularly strong jump on HumanEval (code generation). The MATH benchmark is the sole exception where it trails by 4.8 points, interesting because that’s where the speedup is most dramatic. This suggests a tradeoff: the diffusion process accelerates structured reasoning but may sacrifice some precision on the hardest mathematical proofs.

The inference speed table tells the real story:

| Scenario | Speedup | Notes |

|---|---|---|

| Math Reasoning (GSM8K) | 3-6× | Structured, predictable output |

| Code Generation | 2-3× | Deterministic syntax |

| Open-ended QA | 1.5-2× | Higher entropy limits parallelism |

The speedup is task-dependent, not constant. For math and code, where outputs follow strict structural patterns, the model can run up to six times faster. For creative or analytical writing, it’s still faster, but the advantage shrinks. This isn’t a bug, it’s a fundamental characteristic of diffusion-based generation.

Why This Breaks the Optimization Narrative

For years, the industry has poured resources into optimizing autoregressive inference. We’ve developed vLLM, TensorRT-LLM, custom CUDA kernels, speculative decoding, and quantization schemes, all to squeeze more tokens per second from fundamentally sequential generation. We’ve been polishing a bicycle when we could have been building a motorcycle.

WeDLM suggests that archural innovation might deliver larger gains than incremental optimization. The model achieves its speedup not through better kernels or smarter scheduling, but by rethinking the generation process itself. It parallelizes what was previously serial.

This has immediate practical implications. A 3-6× speedup means:

– Cost reduction: Serve the same traffic with one-third the GPU infrastructure

– Latency improvement: Interactive applications feel dramatically more responsive

– Batch efficiency: Process more requests concurrently without increasing hardware

The Apache 2.0 license makes this accessible to everyone, not just Tencent’s internal teams. The developer community has already recognized the potential, with discussions focusing on the 7-8B parameter space as particularly promising for practical deployment.

The Catch (There’s Always a Catch)

Before you throw out your Llama 3 deployment, consider the limitations. The MATH benchmark underperformance is real, diffusion models might struggle with problems requiring extremely precise, multi-step logical deduction where each step depends critically on the exact previous step. The parallel refinement process could introduce subtle reasoning errors that sequential generation avoids.

The 1.5-2× speedup on open-ended QA, while still impressive, shows diminishing returns as tasks become less structured. If your workload is primarily creative writing or open-ended analysis, the benefits shrink. And while KV cache compatibility is maintained, the diffusion process itself requires multiple denoising steps, which could increase memory pressure in certain scenarios.

The model also represents a paradigm shift in how we think about generation quality. With autoregressive models, we can inspect partial generations as they appear. With diffusion models, the output emerges from iterative refinement, making intermediate states less interpretable. This could complicate debugging and monitoring in production systems.

The Broader Context: LLaDA2.0 and the 100B Question

Tencent isn’t operating in a vacuum. The recent LLaDA2.0 paper demonstrates scaling diffusion language models to 100B parameters through systematic conversion from autoregressive models. Their 3-phase training scheme, progressive block diffusion, full-sequence diffusion, then compact block diffusion, provides a roadmap for converting frontier models.

This suggests WeDLM-8B is a proof-of-concept, not a one-off experiment. If the technique scales to 100B+ parameter models, we could see diffusion-based versions of GPT-4, Claude, and Gemini. The implications for inference economics would be staggering.

The paper’s approach of “knowledge inheritance”, preserving the original model’s capabilities during conversion, is crucial. It means we don’t need to retrain from scratch, avoiding the massive compute costs that have made previous architecture experiments prohibitively expensive.

What Happens Next

The AI community now faces a choice. Do we double down on optimizing autoregressive models, squeezing out another 10-20% improvement with increasingly complex engineering? Or do we fundamentally rethink the generation paradigm?

WeDLM makes a compelling case for the latter, but adoption won’t be immediate. The ecosystem around autoregressive models is mature, tooling, best practices, debugging techniques, and mental models are all built around token-by-token generation. Diffusion models require new thinking about prompt engineering, sampling parameters, and quality control.

For math-heavy applications, code generation, scientific computing, structured data extraction, the case for diffusion models is already strong. The 3-6× speedup with maintained or improved quality is too large to ignore. For general chat and creative applications, the decision is less clear-cut.

The real test will be whether other labs replicate and extend Tencent’s results. If Google, OpenAI, or Anthropic release diffusion-based models in 2025, we’ll know the paradigm shift is underway. If WeDLM remains an isolated data point, it may be remembered as a clever but ultimately niche experiment.

The Bottom Line

Tencent’s WeDLM-8B-Instruct isn’t just another model release, it’s a direct challenge to six years of architectural orthodoxy. By demonstrating that diffusion-based generation can beat highly-optimized autoregressive models on both speed and quality, it opens a door that many thought was locked.

The 3-6× speedup on math reasoning tasks is the headline, but the deeper story is about architectural innovation versus incremental optimization. We’ve spent years making bicycles faster. WeDLM suggests it might be time to build motorcycles.

For practitioners, the immediate action is clear: benchmark WeDLM on your specific workload. The speedup varies dramatically by task type, and the MATH benchmark underperformance is a reminder that no architecture is universally superior. But if your use case involves structured generation, code, math, data extraction, the performance gains are too significant to dismiss.

The token-by-token generation monopoly is officially over. What comes next depends on whether the community embraces diffusion or retreats to the familiar comfort of autoregressive models. Either way, the conversation has changed, and that’s progress.