While Silicon Valley labs burn cash chasing trillion-parameter models, Tencent-backed Qwen just dropped a pair of tools that expose the emperor’s lack of clothes. Qwen3-Coder-Next packs 80 billion parameters into a sparse MoE architecture that only activates 3 billion per token, yet scores 70.6 on SWE-Bench Verified, nose-to-nose with DeepSeek-V3.2’s 671B-parameter monster. Even more telling: the community-built Qwen3-TTS Studio proves this isn’t a one-off model release, but a deliberate ecosystem play designed to run entirely on your hardware, no API credits required.

The 3B Parameter Model That Punches at 671B

The numbers from Qwen’s technical report read like a typo. On SWE-Bench Verified using the SWE-Agent scaffold, Qwen3-Coder-Next scores 70.6. DeepSeek-V3.2, with 671B total parameters, manages 70.2. GLM-4.7, another Chinese lab’s heavyweight at 358B parameters, edges ahead at 74.2. The gap is negligible, but the resource requirements are anything but.

Qwen3-Coder-Next’s secret sauce is its hybrid architecture that reads like a greatest-hits album of recent AI research:

# Model Overview from Qwen's technical specs

{

"total_parameters": "80B",

"active_parameters_per_token": "3B",

"architecture": "Hybrid MoE",

"layers": 48,

"hidden_dimension": 2048,

"context_length": 262144,

"layout": "12 × (3 × (Gated DeltaNet → MoE) → 1 × (Gated Attention → MoE))"

}

The Gated DeltaNet layers use linear attention with 32 heads for values and 16 for queries/keys, while Gated Attention layers deploy 16 query heads and 2 KV heads. The MoE component spreads knowledge across 512 experts, activating only 10 plus 1 shared expert per token. This design gives the model the capacity of a dense 80B model while keeping inference costs pegged to a 3B model’s footprint.

For developers who’ve watched their cloud bills spiral as agentic coding tools chain-call models, this math matters. As one Hacker News commenter calculated: "With Claude Sonnet at $3/$15 per 1M tokens, a typical agent loop with ~2K input tokens and ~500 output per call, 5 LLM calls per task, and 20% retry overhead… you’re looking at roughly $0.05-0.10 per agent task. At 1K tasks/day that’s ~$1.5K-3K/month." Local inference at 30-40 tokens per second on consumer hardware flips that equation to $0 marginal cost.

From Model to Ecosystem: Qwen3-TTS Studio Shows the Real Strategy

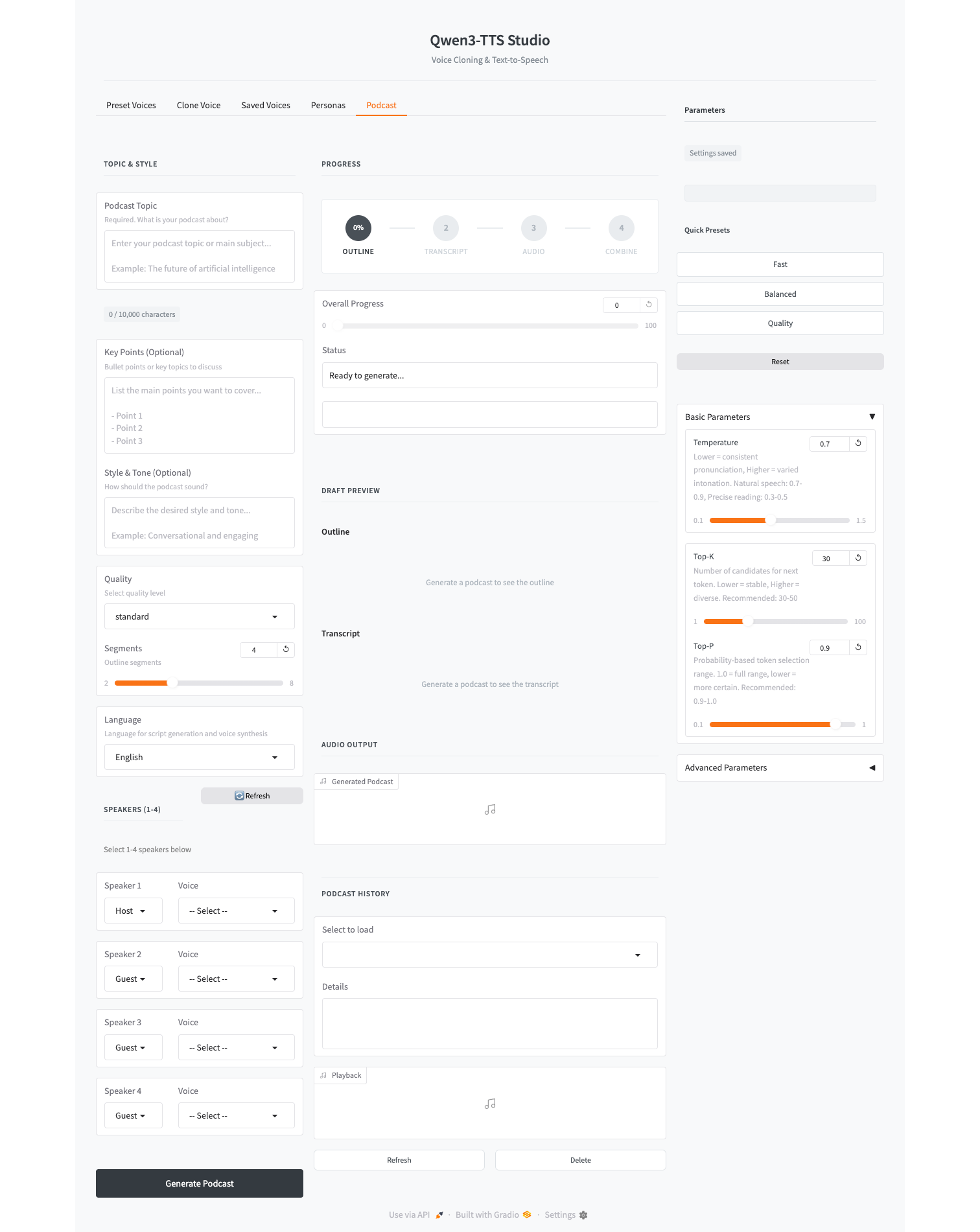

If Qwen3-Coder-Next were just another Hugging Face release, it would be impressive but not revolutionary. What signals Tencent’s true intentions is Qwen3-TTS Studio, a community-built interface that wraps Qwen3-TTS into a polished, ElevenLabs-competing tool that runs entirely local.

The tool’s capabilities read like a wishlist for content creators and developers:

- Voice cloning from 3-second samples in 10 languages (Korean, English, Chinese, Japanese, German, French, Russian, Portuguese, Spanish, Italian)

- Fine-grained parameter control (temperature, top-k, top-p) with quality presets

- Automated podcast generation from a single topic prompt

- Modular LLM integration, swap in any local model for script generation

The architecture is deliberately modular. While the default uses GPT-5.2 for script generation, the system accepts any OpenAI-compatible API endpoint, including local models running via Ollama or LM Studio. This isn’t accidental, it’s a direct challenge to the integrated, locked-down approach of commercial voice AI services.

As the project’s GitHub documentation notes: "The TTS runs entirely local on your machine (macOS MPS / Linux CUDA). No API calls for voice synthesis = unlimited generations, zero cost." The required models total under 15GB: 4.2GB for the base 1.7B model, 4.2GB for CustomVoice, and smaller tokenizer files.

The Deployment Reality: From RTX 3090 to MacBook Air

Benchmarks are meaningless if you need a data center to run the model. Qwen3-Coder-Next’s ecosystem includes aggressive quantization efforts that make it accessible to mere mortals.

Unsloth’s Daniel Han released GGUF quantizations within hours of the model dropping, offering variants from Q4_K_M (48GB RAM needed) down to IQ4_XS that can run on a 64GB MacBook with memory to spare. His benchmarks show the model maintaining reasoning capabilities even at 4-bit precision:

# Running the UD-Q4_K_XL variant on llama.cpp

./llama-server \

--model Qwen3-Coder-Next-UD-Q4_K_XL.gguf \

--ctx-size 32768 \

--n-gpu-layers 999 \

--temp 1.0 --top-p 0.95 --top-k 40 \

--jinja

Performance varies by hardware, but the community reports are telling:

– RTX 4090 + 128GB RAM: 35-40 tokens/sec at Q4_K_XL

– M3 Max MacBook (64GB): 20-30 tokens/sec with MLX, though KV cache issues can cause slowdowns

– Strix Halo (AMD 9955HX3D, 64GB): ~21 tokens/sec on Q4 quant

The quantization story gets technical fast. Unsloth’s "UD" (Unsloth-Dynamic) variants upcast critical layers to higher bit depths, preserving accuracy where it matters while compressing less-sensitive weights. As Daniel Han explains: "UD-Q4_K_XL (Unsloth Dynamic 4bits Extra Large) is what I generally recommend for most hardware – MXFP4_MOE is also ok." The approach yields perplexity scores close to BF16 while slashing memory requirements.

The Community Effect: Why This Isn’t Just Another Model Drop

What makes this release cycle different is the immediate ecosystem response. Within 9 hours of Qwen3-Coder-Next hitting Hugging Face, the community had:

- Working GGUF conversions for multiple quant levels

- Integration guides for Claude Code and Codex CLI

- Benchmark comparisons against established models

- Bug reports and fixes for llama.cpp compatibility

- Voice cloning UIs with podcast automation features

This velocity matters. As one Reddit commenter noted: "Goddamn that was fast" when Unsloth’s quantizations appeared. Another pointed out: "The community is what keeps us going!"

The open-source nature enables customization that commercial APIs can’t match. Developers are already experimenting with hybrid workflows: using Qwen3-Coder-Next for code generation while routing planning and architecture tasks to cloud models, or chaining multiple local models for specialized tasks.

The Elephant in the Room: China’s AI Strategy

Let’s address what everyone’s thinking. Qwen is backed by Alibaba Cloud and ultimately beholden to Chinese regulators. The models carry the same content restrictions as other Chinese AI systems. But the technical contributions are undeniable, and the Apache 2.0 license means the weights are free for commercial use, fine-tuning, and redistribution.

This creates a fascinating dynamic. While US firms build moats around their best models, Chinese labs are giving away state-of-the-art architectures that run locally. The geopolitical implications are secondary to the immediate practical value: developers worldwide gain access to powerful AI that doesn’t phone home to San Francisco or require a corporate credit card.

As one Hacker News commenter observed: "Chinese labs are acting as a disruption against Altman etc’s attempt to create big tech monopolies, and that’s why some of us cheer for them." Another noted: "They can only raise prices as long as people buy their subscriptions / pay for their api. The Chinese labs are closing in on the SOTA models (I would say they are already there) and offer insane cheap prices for their subscriptions."

Practical Implementation: Getting Started Today

For developers ready to test these claims, here’s the minimal viable setup:

For Qwen3-Coder-Next:

# Install latest llama.cpp

git clone https://github.com/ggerganov/llama.cpp

cd llama.cpp && make -j

# Download UD-Q4_K_XL quantization

wget https://huggingface.co/unsloth/Qwen3-Coder-Next-GGUF/resolve/main/Qwen3-Coder-Next-UD-Q4_K_XL.gguf

# Start server with OpenAI-compatible API

./llama-server \

--model Qwen3-Coder-Next-UD-Q4_K_XL.gguf \

--ctx-size 32768 \

--host 0.0.0.0 --port 8000 \

--jinja --temp 1.0 --top-p 0.95 --top-k 40

For Qwen3-TTS Studio:

# Clone and setup

git clone https://github.com/bc-dunia/qwen3-TTS-studio

cd qwen3-TTS-studio

conda create -n qwen3-tts python=3.12

conda activate qwen3-tts

# Install dependencies

pip install -U qwen-tts gradio soundfile numpy moviepy openai

# Download models

huggingface-cli download Qwen/Qwen3-TTS-12Hz-1.7B-Base --local-dir ./Qwen3-TTS-12Hz-1.7B-Base

# Run

python qwen_tts_ui.py

The TTS Studio’s podcast feature is particularly impressive. Feed it a topic like "the history of quantum computing" and it generates a complete script, assigns distinct voices to multiple speakers, synthesizes the audio, and outputs a ready-to-publish file. The quality won’t replace NPR, but for content marketing, educational materials, or prototyping, it’s shockingly capable.

The Road Ahead: Ecosystem vs. Monolith

Qwen’s strategy becomes clear when you connect the dots. Release powerful base models under permissive licenses. Support immediate community quantization and tooling. Enable modular integration where users can swap components. The goal isn’t to sell API access, it’s to make the entire AI stack accessible, customizable, and independent of any single vendor.

This contrasts sharply with the integrated approach of OpenAI’s GPTs or Anthropic’s Claude. Those systems optimize for convenience and capability at the cost of lock-in. Qwen’s ecosystem trades some polish for freedom: freedom from usage limits, from vendor dependence, from surveillance pricing models that charge based on how much they think you’re willing to pay.

The llama.cpp integration enabling local deployment of Qwen3 models exemplifies this philosophy. Rather than forcing developers into a managed service, Qwen meets them where they are: on their own hardware, with their own tools, under their own control.

Performance Reality Check: Where the Rubber Meets the Road

Benchmarks lie, but production workloads don’t. Early community testing reveals the practical tradeoffs:

Strengths:

– Excellent at code completion and generation within its context window

– Strong tool-use capabilities for agentic workflows

– Competitive with Sonnet 4.5 on isolated coding tasks

– Maintains coherence across 64K+ token contexts

Limitations:

– Quantization below Q4_K_M noticeably degrades reasoning

– MLX implementation on Apple Silicon has KV cache issues that hurt performance

– Not a drop-in replacement for frontier models on complex multi-step tasks

– Requires careful prompt engineering to avoid thinking loops

One developer reported: "I’m getting similar numbers on NVIDIA Spark around 25-30 tokens/sec output, 251 token/sec prompt processing… but I’m running with the Q4_K_XL quant. I’ll try the Q8 next, but that would leave less room for context."

The consensus seems to be that Qwen3-Coder-Next lands somewhere between Sonnet 3.7 and Sonnet 4.0 in capability, "good enough" for many tasks, especially when the alternative is a cloud API bill that scales with usage.

The Bottom Line: A Tipping Point for Local AI

Qwen3-Coder-Next and Qwen3-TTS Studio aren’t just new models. They’re proof that the AI development paradigm is shifting. The question is no longer "which API key gives me the best model?" but "can I run a model good enough on my hardware?"

For startups, this changes the math. A one-time $3,000 GPU investment versus recurring API costs that scale with success. For enterprises, it offers data sovereignty and predictable costs. For individual developers, it means unlimited experimentation without usage anxiety.

The Qwen3-TTS low-latency claims that sparked community debates show the ecosystem is still maturing. But the trajectory is clear: open weights, local deployment, and community innovation are creating a viable alternative to the cloud AI oligopoly.

Whether this challenges the dominance of US tech giants or simply creates a parallel track depends on execution. But one thing is certain: developers now have a real choice. And choice, more than any benchmark, is what drives innovation.

The AI race isn’t over. But for the first time, it feels like we’re running on our own hardware.