NVIDIA’s RTX PRO 6000 Blackwell Server Edition isn’t just another GPU launch, it’s a quiet revolution that rips up the multi-GPU playbook. For years, NVLink has been the sacred cow of GPU-to-GPU communication, the proprietary interconnect that let NVIDIA build tightly-coupled AI training monsters. Now, the RTX PRO 6000 line axes NVLink entirely and replaces it with garden-variety 400G networking baked directly onto each card. This isn’t evolution, it’s architectural heresy that could either democratize AI infrastructure or introduce latency landmines.

The End of an Era: NVLink Gets the Axe

NVLink has been NVIDIA’s not-so-secret weapon since 2016. It delivered 900GB/s of bidirectional bandwidth between GPUs, an order of magnitude faster than PCIe and purpose-built for all-to-all communication that AI training craves. The GB200 Grace Blackwell Superchip doubled down on this, pairing two B200 GPUs with a Grace CPU via NVLink to create a coherent memory monster.

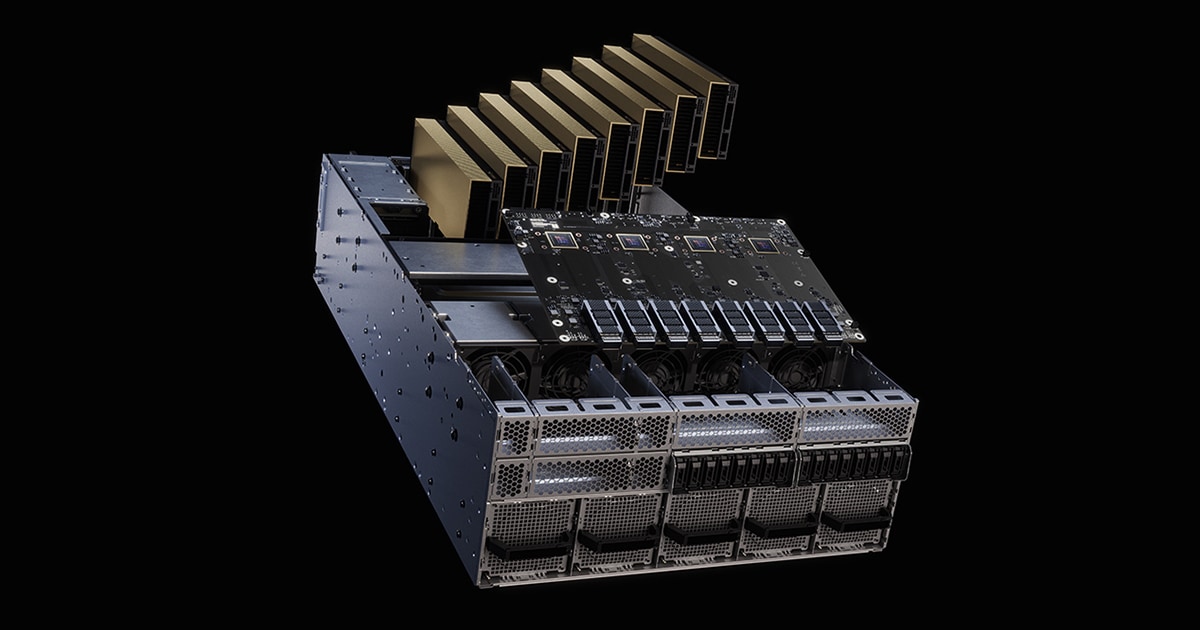

But the RTX PRO 6000 Server Edition takes a different path. Instead of proprietary interconnects, each GPU card integrates a NVIDIA ConnectX-8 1-port 400G QSFP112 network adapter directly on the board. No NVLink. No NVSwitch trays. Just high-speed Ethernet or InfiniBand, same as your storage and compute traffic.

This is a philosophical pivot. NVIDIA is essentially saying: “Your network fabric is your interconnect.” For data centers already building leaf-spine architectures with Quantum-X800 InfiniBand or Spectrum-4 Ethernet, this removes a layer of complexity. But it also means GPU-to-GPU traffic now competes with everything else on the wire.

What the RTX PRO 6000 Actually Does

The practical implementation looks like this: a single 4U server chassis packs eight RTX PRO 6000 Blackwell Server Edition GPUs, each with its own 400G port. The Reddit-sourced spec sheet reads like a power engineer’s fever dream:

- 8x Nvidia RTX PRO 6000 Blackwell Server Edition GPU

- 8x Nvidia ConnectX-8 1-port 400G QSFP112 (one per GPU)

- 1x Nvidia Bluefield-3 2-port 200G (optional management/offload)

- 2x Intel Xeon 6500/6700 CPUs

- 32x 6400 RDIMM or 8000 MRDIMM

- 6000W TDP total

- 4x High-efficiency 3200W PSU

- 70 kg (150 lbs) of copper, silicon, and hubris

The math is stark: eight GPUs × 400Gbps = 3.2Tbps of raw networking capacity per server. For comparison, a DGX B300 node with NVLink delivers 900GB/s (yes, bytes) of GPU-to-GPU bandwidth, roughly 7.2Tbps. The 400G approach provides less than half the theoretical bandwidth, and that’s before protocol overhead and contention.

The Tradeoff Game: Bandwidth vs. Latency

Here’s where it gets spicy. NVLink’s dominance wasn’t just about bandwidth, it was about latency and coherence. NVLink allows GPUs to address each other’s memory directly, enabling efficient model parallelism where layers are split across devices. A 400G network, even with RDMA and GPUDirect, introduces microsecond-scale latency where NVLink operates in nanoseconds.

But, and this is crucial, modern AI workloads are changing. Foundation model training relies on massive tensor parallelism that benefits from NVLink’s all-to-all nature. However, inference serving, fine-tuning, and emerging mixture-of-experts (MoE) architectures are more tolerant of network latency. They favor bandwidth flexibility over strict coherence.

The B300 GPU itself acknowledges this shift. Compared to the B200, it packs 288GB of HBM3e (up from 192GB) and 800Gbps networking via ConnectX-8 (doubling the B200’s 400Gbps). NVIDIA’s own specs show the B300 is “best suited for training large language models” with “higher throughput”, suggesting they’re targeting the networking approach for specific scalability advantages, not just cost-cutting.

Server Design Gets Rewired

Building an RTX PRO 6000 server isn’t just about slapping GPUs in PCIe slots. The entire system topology changes:

- No NVSwitch complexity: Gone are the NVSwitch trays that consumed 500W+ and added cost. Each GPU is an independent network endpoint.

- Switch becomes the backplane: Your leaf switches aren’t just for data, they’re the interconnect. A 64-port 400G switch can theoretically connect 64 GPUs in a single hop.

- Cabling explosion: Eight GPUs per server means eight DAC/AOC cables per node. A 16-node rack needs 128 cables just for GPU interconnect. Cable management becomes critical infrastructure.

- Power and cooling: That 6000W TDP is real. The Supermicro approach uses DLC-2 direct liquid cooling to capture up to 98% of heat, enabling 45°C warm water operation and eliminating chillers. Without this, you’re melting racks.

The Switch is the Thing

The RTX PRO 6000’s success hinges entirely on your network fabric. NVIDIA isn’t subtle about this, the recommended topology uses Quantum-X800 InfiniBand or Spectrum-4 Ethernet switches with specific multi-plane designs.

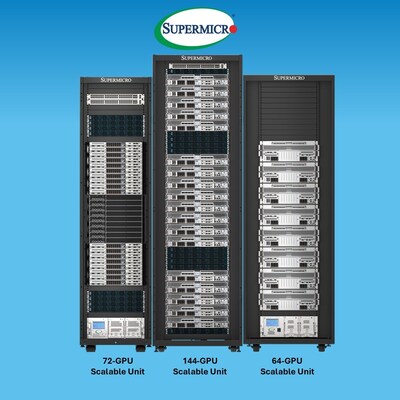

For large clusters, the B300 SuperPod architecture reveals the strategy: each 800G ConnectX-8 is split into two independent 400G network planes. This creates redundancy and doubles effective bandwidth for all-to-all communication. A single SU (scalable unit) contains 64 nodes with 512 GPUs, connected via 128 leaf switches, 64 per plane.

This dual-plane approach provides:

– Fault tolerance: One plane fails, training continues at half bandwidth

– Lower effective latency: Traffic can route across less-congested paths

– Massive scale: 16 SUs support 8,192 GPUs without network bottlenecks

But it also means your network budget just became your interconnect budget. A single 64-port 400G switch runs $30K-$50K. For a 512-GPU deployment, you’re looking at 128 leaf switches plus spine layers, easily a multi-million dollar networking bill that traditionally was baked into NVLink’s premium pricing.

When This Makes Sense (And When It Doesn’t)

This architecture shines when:

– You’re already building a high-speed fabric for storage and compute. The incremental cost of adding GPU traffic is marginal.

– Inference serving at scale: Independent GPUs with network isolation provide better QoS for multi-tenant serving.

– Heterogeneous workloads: Mixing training, fine-tuning, and inference on the same cluster becomes easier with standard networking.

– Avoiding vendor lock-in: 400G Ethernet is multi-vendor, NVLink is NVIDIA-only.

It struggles when:

– Pure training performance matters: GB300 NVL72 with NVLink still delivers 30P FP4 compute vs 13.5P on B300, and the memory coherence is unmatched.

– Latency-sensitive applications: Recommendation systems, real-time RL, and certain scientific computing workloads suffer from network jitter.

– Small clusters: The overhead of high-end switches doesn’t pay off until you reach dozens of nodes.

The Hidden Costs of “Just Add Networking”

The Reddit post’s “not much can go wrong” optimism masks serious practical challenges:

Cabling complexity: Eight cables per server × 16 servers per rack = 128 cables. At 3 meters each, that’s 384 meters of twinax or fiber per rack. DAC cables are thick and heavy, AOCs are expensive. Cable management becomes a full-time job.

Power distribution: 6000W per node means 96kW per rack. At 208V, that’s 462 amps, beyond most data center power feeds. You need specialized power infrastructure or lower density.

Switch port consumption: Each GPU consumes a switch port. A traditional 8-GPU NVLink server uses zero switch ports for GPU traffic. The RTX PRO 6000 approach consumes eight ports immediately. Your switch port count just became your scaling limiter.

Software stack: You’ll need robust NCCL (NVIDIA Collective Communications Library) configurations that understand the network topology, plus potentially custom routing to avoid congestion. The “it just works” factor is lower than NVLink’s transparent memory model.

Is This Really the Future?

NVIDIA’s move isn’t random, it’s a calculated bet on three trends:

-

Network technology maturity: 400G/800G switches from NVIDIA (and competitors like Arista, Cisco) now deliver the bandwidth and latency that previously required proprietary silicon.

-

Workload diversification: The AI market is shifting from pure training to inference-heavy, fine-tuning, and serving workloads where network flexibility trumps raw GPU-to-GPU bandwidth.

-

Economic pressure: NVLink adds significant cost and complexity. For enterprises not building exascale supercomputers, standard networking is cheaper and more familiar.

The RTX PRO 6000 isn’t replacing NVLink, it’s segmenting the market. GB300 Grace Blackwell with NVL72 remains the pinnacle for training massive models. The RTX PRO 6000 with 400G networking is the pragmatic choice for organizations building AI infrastructure that must serve multiple use cases and integrate with existing data center fabrics.

The real innovation isn’t the GPU, it’s the admission that sometimes, the best interconnect is the one you already have. For AI architects, this means your network design skills are now as critical as your GPU selection. The switch isn’t just a peripheral, it’s the heart of the system.

Bottom line: If you’re building a greenfield AI cluster for diverse workloads and already investing in high-speed networking, the RTX PRO 6000’s 400G approach could save millions in proprietary interconnect costs. But if pure training performance is your north star, NVLink remains king. The future isn’t one or the other, it’s a bifurcated world where you choose your interconnect based on workload, not dogma.