Lyft processes hundreds of millions of machine learning predictions daily, optimizing dispatch routes, setting dynamic pricing, and flagging fraud in real time. Behind the scenes, thousands of training jobs run every day, serving hundreds of data scientists and ML engineers. For years, all of this ran on Kubernetes. Then Lyft deliberately tore its platform in half.

The company’s ML infrastructure team moved offline training workloads to AWS SageMaker while keeping real-time inference on Kubernetes. This wasn’t a migration story. It was an architectural admission that one size doesn’t fit all, and sometimes the best way to fix a platform is to stop pretending it should be unified.

The Kubernetes Breaking Point

Lyft’s original platform, LyftLearn, was a Kubernetes-native system. In theory, this provided consistency: same orchestration layer, same tooling, same operational model for both training and serving. In practice, scale revealed a different reality.

Every new ML capability required custom orchestration logic. Synchronizing Kubernetes container states with the platform’s metadata database demanded multiple background watcher services just to handle out-of-order events and status transitions. The team spent engineering cycles managing cluster autoscaling quirks and wrestling with eventually-consistent state management. What started as a streamlined architecture became a maintenance burden that consumed capacity better spent on actual ML features.

The operational complexity grew linearly with scale, until it didn’t. The overhead of maintaining a unified system began to drag down the team’s ability to deliver platform improvements.

The Split Decision: SageMaker for Training, Kubernetes for Serving

Lyft’s new architecture makes a clean separation: LyftLearn Compute (training and batch processing) runs on SageMaker, LyftLearn Serving (real-time inference) stays on Kubernetes. The decision follows a simple heuristic: use managed services where operational complexity is highest, keep custom infrastructure where control matters most.

For offline workloads, SageMaker’s managed infrastructure eliminated the watcher services entirely. AWS EventBridge and SQS replaced the custom event-handling architecture with robust, managed event-driven state management. On-demand provisioning killed idle cluster capacity costs.

For online inference, Kubernetes remained the right tool. Lyft’s existing serving architecture already delivered required performance and integrated tightly with internal tooling. The cost efficiency and control were already proven. As Yaroslav Yatsiuk, the engineer behind the migration, put it: “We adopted SageMaker for training because managing custom batch compute infrastructure was consuming engineering capacity better spent on ML platform capabilities. We kept our serving infrastructure custom-built because it delivered the cost efficiency and control we needed.”

The Technical Meat: Making Hybrid Actually Work

The split architecture introduced a critical challenge: preventing the “train-serve skew” problem where models train in one environment but serve in another, leading to subtle inconsistencies. Lyft’s solution was radical simplicity, use the exact same Docker image for both.

Cross-Platform Image Compatibility

Lyft built a compatibility layer ensuring the same container that trains on SageMaker serves on Kubernetes. This eliminated an entire class of environment-drift bugs. The images handle credential injection, metrics collection, and configuration management transparently across both platforms.

For latency-sensitive workloads retraining every 15 minutes, the team couldn’t afford SageMaker’s cold-start penalty. They adopted Seekable OCI (SOCI) indexes for notebooks and SageMaker warm pools for training jobs, achieving Kubernetes-comparable startup times. SOCI indexes allow containers to launch without downloading entire images, while warm pools maintain pre-initialized compute environments.

The Spark Networking Nightmare

The most complex technical hurdle involved Spark’s bidirectional communication requirements. Spark executors need inbound connections to reach notebook drivers, but default SageMaker networking blocks these paths. This wasn’t a configuration issue, it was a fundamental architecture limitation.

Lyft partnered with AWS to enable custom networking configurations in their SageMaker Studio Domains, allowing the necessary cross-environment communication without performance degradation. This kind of deep platform customization highlights that “managed” doesn’t mean “inflexible”, but it does require vendor partnership at the infrastructure level.

The Migration: Parallel Operation, Repository by Repository

Rather than a risky big-bang cutover, Lyft ran both infrastructures in parallel during migration. Teams moved repository by repository, requiring only minimal configuration changes. The compatibility layer meant existing ML code needed almost no modification.

This incremental approach de-risked the transition while validating the hybrid model in production. When incidents occurred, teams could compare behavior between the old and new systems, creating a natural feedback loop that accelerated stabilization.

The Results: What “Better” Actually Looks Like

Post-migration, Lyft reports reduced infrastructure incidents and, more importantly, freed engineering capacity redirected toward actual platform capabilities. The operational burden of managing batch infrastructure vanished overnight.

The hybrid architecture also created natural cost optimization. Training workloads now scale to zero when idle, while serving workloads maintain predictable baseline capacity. No more over-provisioning Kubernetes clusters to handle sporadic training spikes.

But the real win is architectural clarity. Each platform component has a clear purpose and optimization target. Kubernetes excels at low-latency, high-availability serving with deep integration into Lyft’s service mesh. SageMaker excels at bursty, computationally intensive training with managed scale and robust experiment tracking.

The Broader Context: Elastic Training and Dynamic Scale

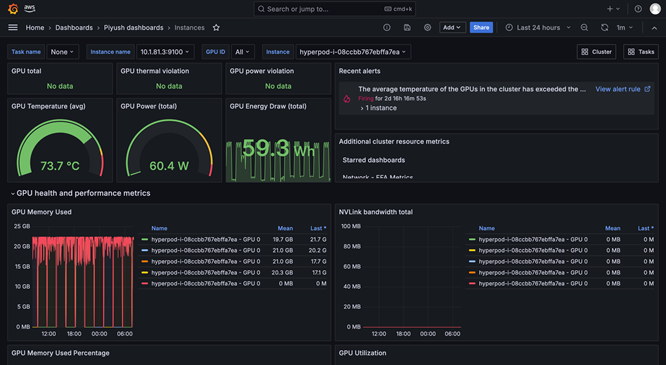

Lyft’s approach aligns with a broader industry shift toward dynamic infrastructure. AWS’s recent elastic training features for SageMaker HyperPod demonstrate the same principle: training jobs should automatically scale based on resource availability, adding replicas when capacity is idle and shedding them when higher-priority workloads need resources.

The HyperPod training operator integrates directly with the Kubernetes control plane, monitoring pod lifecycle events and scheduler signals to make scaling decisions. This blurs the line between managed service and container orchestration, not by hiding the complexity, but by making it programmable.

For organizations running mixed workloads, this pattern is becoming essential. Static resource allocation wastes capacity, manual reconfiguration wastes engineering time. The only viable path is infrastructure that understands workload priority and adapts automatically.

The Takeaway: Pragmatism Over Purity

Lyft’s hybrid architecture rejects the dogma that platforms must be unified to be manageable. Instead, it embraces a pragmatic truth: different workloads have different requirements, and the best infrastructure is the one that admits this.

For teams facing similar decisions, the key lessons are:

-

Split at the natural seam: Training and serving have fundamentally different operational profiles. The split should preserve data flow while allowing independent optimization.

-

Compatibility is non-negotiable: The same artifact must run in both environments. Any drift between training and serving environments creates subtle, hard-to-debug failures.

-

Migration is a product decision: The goal isn’t technical purity, it’s freeing engineering capacity. Measure success by what the team ships, not by what they manage.

-

Partnership matters: Deep customization of managed services requires vendor engagement. Don’t assume you can configure your way out of architectural mismatches.

The best platform engineering isn’t about the technology stack you run, it’s about the complexity you hide and the velocity you unlock. Sometimes the fastest way to unlock velocity is to stop trying to make one infrastructure be all things to all workloads.

Yatsiuk’s final assessment cuts through the hype: “The best platform engineering isn’t about the technology stack you run, it’s about the complexity you hide and the velocity you unlock.” For Lyft, hiding complexity meant admitting that two systems, properly integrated, create less complexity than one system stretched beyond its design center.

The hybrid approach isn’t a compromise, it’s an optimization.

Lyft’s ML Platform Architecture

/filters:no_upscale()/news/2025/12/lyft-ml-platform/en/resources/1Lyft-LyftLearn-Architecture-1765711434001.png

Lyft’s hybrid architecture separates training (SageMaker) from serving (Kubernetes), using cross-platform Docker images to maintain consistency. Source

For more on elastic training patterns, see the SageMaker HyperPod documentation and Lyft’s original architecture deep-dive.