The training cutoff date on your local LLM used to be a brick wall. Ask Qwen-3 about anything after 2024 and you’d hit that familiar “I cannot provide information beyond my knowledge cutoff”, a polite way of saying you’re stuck in the past. But that wall is crumbling, and fast. Users are plugging DuckDuckGo directly into LM Studio, spinning up web-search MCP servers, and watching their offline models transform into real-time agents that rival ChatGPT’s browsing capabilities. The kicker? It’s all happening on hardware that fits your desk, without a single API key or privacy policy to sign.

This shift isn’t just a neat trick for hobbyists. It’s a fundamental reimagining of what “agentic AI” means, one that doesn’t require enterprise contracts or CS degrees. The Reddit thread that lit this fire captures the moment perfectly: a user discovers the LM Studio DuckDuckGo plugin, installs it in 15 minutes, and suddenly has the same interface they saw in ChatGPT. Their reaction? “Am I having ‘agentic-AI’ now? haha.” The answer is yes, but that innocent question masks a deeper disruption.

The 15-Minute Agent

The technical barrier to entry has collapsed. What used to require orchestrating complex tool-calling pipelines is now a matter of editing a JSON config. The mrkrsl-web-search-mcp server demonstrates this beautifully: a TypeScript implementation that tries Google first, falls back to DuckDuckGo when bot detection triggers, and extracts full page content automatically. Configuration takes three lines in your mcp.json:

{

"mcpServers": {

"web-search": {

"command": "npx",

"args": ["web-search-mcp-server"]

}

}

}

That’s it. No Docker containers, no Kubernetes manifests, no cloud accounts. The server exposes a full-web-search tool that your local model can invoke, and suddenly your 16GB VRAM setup, what one Redditor called the “acceptable and generic userbase spec”, is grounding its responses in live data. Qwen-3 and Gemma 3 handle this particularly well, while Llama 3.1 and 3.2 stumble on tool calling, revealing a model capability gap that leaderboard benchmarks never captured.

Privacy Is the Feature, Not the Footnote

But here’s where the narrative splits from the cloud AI story. When you route through DuckDuckGo, you’re still leaking your IP and query patterns. The privacy-conscious crowd on Reddit didn’t mince words: “if you want real privacy, route the searches using searxng and Tor.” SearXNG strips identifiable information from queries before forwarding them to upstream engines, and Tor masks your IP entirely. The combination creates a search pipeline that even your VPN provider can’t see.

This isn’t theoretical. The Harbor framework packages this entire stack into a single command: harbor up searxng spins up Open WebUI pre-configured for web RAG with SearXNG, letting you choose between Ollama, llama.cpp, or llama-swap as your inference backend. One user notes it pairs well with TTS/STT, turning your private agent into a voice assistant that never phones home. The setup is so streamlined that another user admits they were told to “avoid ollama” as a newbie, yet Harbor makes it trivial to switch backends without rewriting your entire workflow.

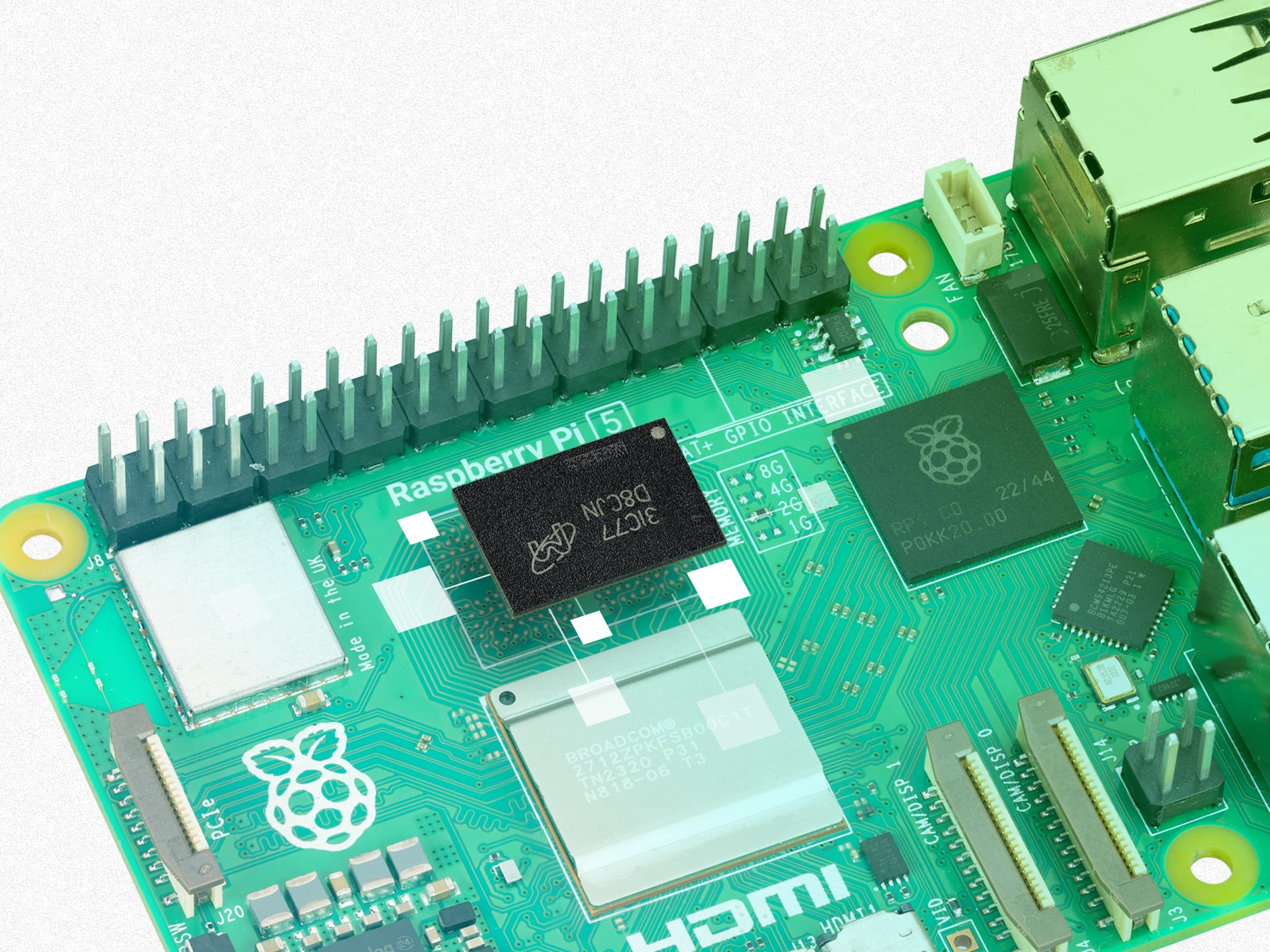

The Raspberry Pi Reality Check

If 16GB VRAM is the “generic spec”, what about the hardware most people actually own? That’s where the Raspberry Pi experiment becomes impossible to ignore. Utkarsh Mehta built a 24/7 personal news curator on a 4GB Pi 4, using Playwright to scrape whitelisted sites and Qwen2.5:0.5b to judge article relevance. The pipeline is brutally efficient: scrape → categorize → store in SQLite → push top 5 to phone via Pushover. No cloud inference costs. No hosting fees. Just a $50 computer that “transformed from a simple hobbyist board into a 24/7 personal assistant that filters the noise of the internet.”

The architecture reveals the secret sauce. When functionGemma’s 270M parameters proved too picky, switching to Qwen2.5:0.5b delivered better judgment calls. The model runs inference locally, but the real magic is in the orchestration, PM2 for process management, ngrok for remote access, cron for scheduled discovery. It’s a full agentic system, just not the kind you’d see in a press release. And yes, Qwen3:1.7b pushed the Pi’s limits, but for focused tasks with fewer tokens, it worked. The message is clear: modest hardware can deliver high-end AI experiences when you optimize the pipeline instead of throwing compute at the problem.

When “Agentic” Becomes a Marketing Term

Yahoo DSP’s announcement of “agentic AI capabilities” highlights the tension. Their framework, “Yours, Mine, and Ours”, lets advertisers bring custom models, use Yahoo’s agents, or connect both via MCP. It’s enterprise-grade, with always-on agents for troubleshooting, audience discovery, and campaign activation. The press release quotes Adam Roodman: “Agentic AI changes how media buying actually gets done.”

But the Reddit thread exposes a semantic drift. Tool calling in LM Studio feels agentic because it’s your first time seeing a local model make decisions. Yahoo’s definition involves multi-agent orchestration, API governance, and enterprise scale. Both are true, yet they’re describing fundamentally different things. The controversy isn’t whether local setups are “real” agentic AI, it’s that the term has become so diluted that a Raspberry Pi script and a DSP platform share the same label.

The Cloud AI Monopoly’s Blind Spot

This is what should worry cloud providers. Their moat has always been threefold: model quality, real-time data access, and managed infrastructure. Local LLMs with internet plugins are attacking all three simultaneously. The model quality gap is narrowing, Qwen-3 and Gemma 3 prove that open models can handle tool calling. Real-time data is now a plugin, not a platform feature. And the managed infrastructure? Harbor and similar frameworks are abstracting that away, turning deployment into a single command.

The enterprise response is to embrace the interoperability. Yahoo’s MCP integration is a recognition that agents will live everywhere, not just in their cloud. But the pricing model breaks down when users realize they can replicate 80% of the functionality on hardware they already own. The remaining 20%, enterprise governance, advanced measurement, cross-platform optimization, matters for Fortune 500 companies. For everyone else, the math is shifting.

The Workflow Design Philosophy

The most telling question from the Reddit thread isn’t technical, it’s philosophical: “what other things you design in your workflow to make locally run LLM so potent and, most importantly, private?” This is the design pattern emerging from the trenches. It’s not about building a better ChatGPT, it’s about creating systems that reflect personal priorities.

One user mentions Letta as their “best kept secret”, a framework for stateful agents with memory. Another suggests Brave Leo with BYOM (Bring Your Own Model) and custom storage/retrieval pipelines. The pattern is consistent: modular, composable, privacy-first. You’re not just running a model, you’re designing a personal information architecture where every component, search, storage, inference, UI, can be swapped based on your threat model and hardware constraints.

The Counterargument Nobody Wants to Hear

Before we declare the cloud obsolete, the limitations are real. Local models still hallucinate. Web search plugins can be brittle, Google’s bot detection triggers randomly, DuckDuckGo results vary in quality, and SearXNG requires maintenance. The 16GB VRAM “sweet spot” excludes most laptops. And orchestrating these tools remains a command-line exercise that sends non-technical users running back to ChatGPT’s comforting UI.

More critically, the agentic behavior is narrow. Your local system can search and summarize, but it won’t book flights, modify your calendar, or execute trades. Those actions require authenticated API access, which introduces identity and security problems that local-first systems haven’t solved. The MCP protocol is young, and standards for agent-to-agent communication are still coalescing.

The Real Revolution Is Boring

The most disruptive aspect might be how un-disruptive it looks. A Raspberry Pi quietly scraping RSS feeds. A JSON config pointing to a DuckDuckGo plugin. A cron job running twice daily. This isn’t the AI revolution of sci-fi movies, it’s the incremental replacement of cloud services with self-hosted equivalents that are good enough, private enough, and cheap enough to matter.

The spiciness isn’t in the technology itself, but in the economics. Every local agent is a potential lost subscription to a cloud AI service. Every Harbor deployment is one less customer for managed RAG platforms. The “wow moment” isn’t about capability, it’s about ownership. You control the model, the data, the search pipeline, and the memory. In an era where AI companies train on user interactions, that ownership feels radical.

The cloud AI monopoly isn’t collapsing overnight. But it’s facing death by a thousand self-hosted cuts, each one a weekend project that proves the barrier to entry was artificial all along. The question isn’t whether local LLMs can match cloud capabilities. It’s whether cloud providers can justify their premium when the alternative is a Raspberry Pi that gets smarter every time you correct it.