The AI code assistant in your IDE can generate a slick React component from a Javadoc comment, but ask it whether changing Service A‘s database schema will break Service B‘s API contract, and you’ll get confident nonsense. The problem isn’t the model, it’s the architectural bankruptcy of vector-only retrieval.

For the past two years, we’ve watched the RAG hype cycle unfold like a predictable sitcom. Vector embeddings were pitched as the universal solvent for AI context problems: chunk your code, embed it, and let similarity search work its magic. The reality? That approach collapses the moment you need to understand anything more complex than “this function looks like that function.” It treats your codebase as a bag of text rather than a graph of decisions, dependencies, and contracts.

The Structural Failure of “Similar Text”

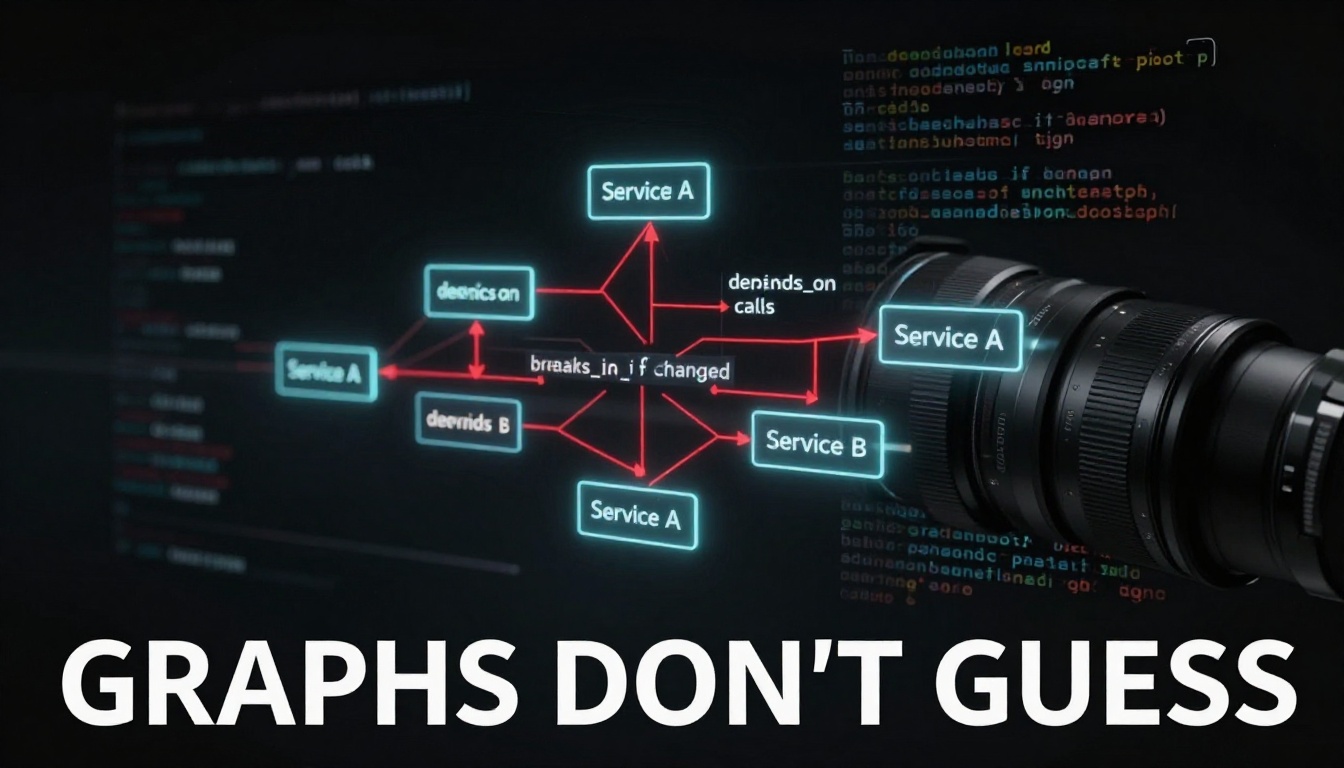

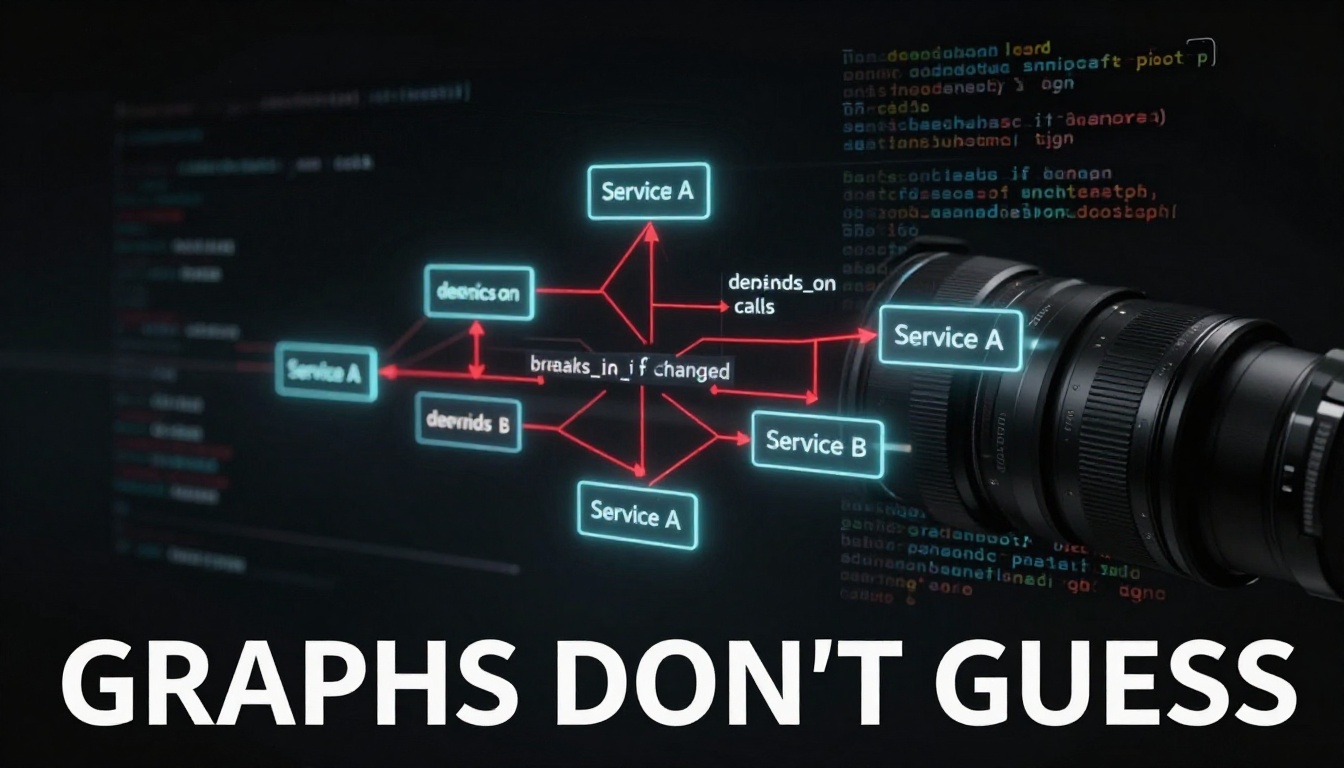

Vector search excels at finding semantically related concepts. Ask it for “authentication patterns” and it’ll surface OAuth implementations, JWT handling, and session management code. But software architecture doesn’t run on semantic similarity, it runs on explicit relationships: depends_on, calls, implements, breaks_if_changed.

A developer on r/softwarearchitecture recently articulated this gap perfectly while building internal AI tools. Their vector-based system could find “similar” documentation but completely failed to model the relationship Service A → depends_on → Service B. This isn’t a minor gap, it’s a categorical failure to represent how systems actually work.

The problem is baked into the math. Vector embeddings compress meaning into dense numerical representations, optimizing for cosine similarity. When you search for “payment processing”, you get things that sound like payment processing. But you don’t get the call graph showing that your checkout service indirectly depends on a legacy fraud detection API that times out under load. That relationship isn’t semantic, it’s structural.

Pure vector search has another critical weakness: it misses exact keyword matches that matter. As highlighted in recent RAG analysis, searching for “error code ERR-4012” can fail if no training document contained that exact string near semantically similar content. In codebases, precision matters. A function name, configuration key, or API endpoint isn’t “semantically similar” to anything, it’s either exactly right or completely wrong.

Graphs Don’t Guess, They Know

The alternative is to stop treating code as text and start treating it as a graph. This means explicitly modeling:

- Services as nodes with typed edges:

depends_on,calls,subscribes_to - APIs as nodes with versioning relationships:

v2_supersedes_v1 - Decisions as nodes linking to affected code:

slack_decision_#arch→implements→refactor_branch - People as nodes connecting to ownership:

developer_X→owns→microservice_Y

This isn’t theoretical. Teams are already experimenting with graph databases like ArangoDB to map both “hard knowledge” (code, APIs) and “soft knowledge” (Slack decisions, PR comments, architectural RFCs). The key insight is that relationships have types, and those types carry meaning that vector similarity can’t approximate.

Consider dependency resolution. Vector search might tell you that your package.json file is “similar to” other Node.js projects. A graph approach runs a topological sort and tells you that upgrading lodash will break three downstream services because of a transitive dependency through some-legacy-util-package. One gives you a vibe check, the other gives you a build plan.

The graph algorithms that power coding interviews, topological sort for prerequisites, DFS for cycle detection, Union-Find for connectivity, aren’t just academic exercises. They’re the exact operations needed to reason about real systems. When your AI assistant needs to answer “can I deploy service A?” it should be running a connectivity check, not a cosine similarity calculation.

The Hybrid Reality: Graphs + Vectors (But Not How You Think)

The Reddit thread’s author asked about “Hybrid Retrieval” systems combining graph and vector approaches. The answer isn’t to run both in parallel and merge results, that’s like using a map and a compass separately and hoping you end up in the right place.

The winning pattern is graph-first, vector-second. Use the graph for structure and vectors for content within that structure. For example:

1. Navigate the graph to find all services dependent on a database

2. Use vector search within that subgraph to find relevant Slack discussions about migration plans

3. Return context that is both structurally correct and semantically rich

This avoids the “graph explosion” problem that plagues naive implementations. One commenter suggested promoting only “durable facts” to nodes while keeping ephemeral content as linked evidence blobs with TTL or summarization. A Slack thread about a transient bug isn’t a graph node, it’s metadata attached to the service node it discusses. But the architectural decision to adopt gRPC? That’s a durable node with edges to every service affected.

Tools like LangSmith and Traycer AI are emerging to trace these hybrid retrievals, showing exactly why a model pulled specific context. This traceability isn’t optional, it’s the difference between trusting your AI assistant and debugging yet another black box.

The Infrastructure Reckoning

This architectural shift has infrastructure implications that can’t be ignored. The vector database hype train is already showing cracks. As we’ve documented, SQL is proving a viable alternative to vector databases for LLM memory, and embedded vector databases like PGVector have serious production limitations.

The graph RAG movement is part of a broader push toward local-first architectures that reject the RAG infrastructure complex. When your context graph lives in a $200/month cloud vector service, every query costs money and latency. When it lives in a local graph database queried via MCP (Model Context Protocol), you get architectural reasoning at the speed of local compute.

This isn’t just about cost. It’s about control. Enterprise RAG implementations are failing at a 40% rate largely because “clean” documents are actually trash when they lack structural relationships. A pristine API spec in a vector database is useless if the AI can’t connect it to the services that implement it.

The Model Context Protocol: Graphs as First-Class Citizens

The Model Context Protocol (MCP) mentioned in the Reddit post is crucial here. MCP servers expose structured context to AI models, and graph databases are the natural backend. Instead of stuffing a prompt with semantically similar but structurally irrelevant code chunks, an MCP server can:

- Execute a graph query to find the actual dependency tree

- Serialize it into a structured format (JSON-LD, GraphQL, etc.)

- Let the model reason over explicit relationships

This is where AI engineering reveals itself as data engineering in disguise. Building effective MCP servers requires the same skills as building data pipelines: schema design, incremental updates, TTL policies, and observability. The “prompt engineer” who just crafts clever strings is being replaced by the AI engineer who designs context graphs.

Architectural Fragility and Trust Boundaries

There’s a darker side to this structural blindness. When AI assistants generate code based on vector-similar examples without understanding dependency graphs, they create architectural fragility. A suggested code change might compile and pass tests but introduce a circular dependency that breaks your microservices architecture in production.

The trust boundary issues are even more severe. If your AI can’t distinguish between a trusted internal service and a third-party dependency when generating code, it’s essentially flying blind through your security model. Vector similarity won’t tell you that internal-auth-service is not the same as random-npm-package-with-similar-name.

The Path Forward: Typed Relationships as Non-Negotiable

The controversy here isn’t that vector search is useless, it’s that vector-only RAG is architecturally incomplete for codebases. The debate should shift from “which embedding model is better” to “how do we model typed relationships at scale.”

For teams building AI developer tools, this means:

- Start with the graph: Use static analysis, runtime introspection, and API catalogs to build a living graph of your systems

- Augment with vectors: Use embeddings for fuzzy search within graph boundaries, not as the primary retrieval mechanism

- Expose via MCP: Make your graph queryable through standardized protocols that preserve relationship semantics

- Design for TTL: Not everything deserves to be a node. Ephemeral discussions stay as evidence, decisions become nodes

The spiciest take? Within two years, “vector-only RAG” will sound as outdated as “XML-over-SOAP” does today. The teams that figure out graph-native context modeling now will have AI assistants that actually understand their systems, not just pattern-match their code.

The rest will be stuck debugging why their AI suggested a change that broke production, because it couldn’t see the dependency graph that every junior developer can read from the architecture diagram.