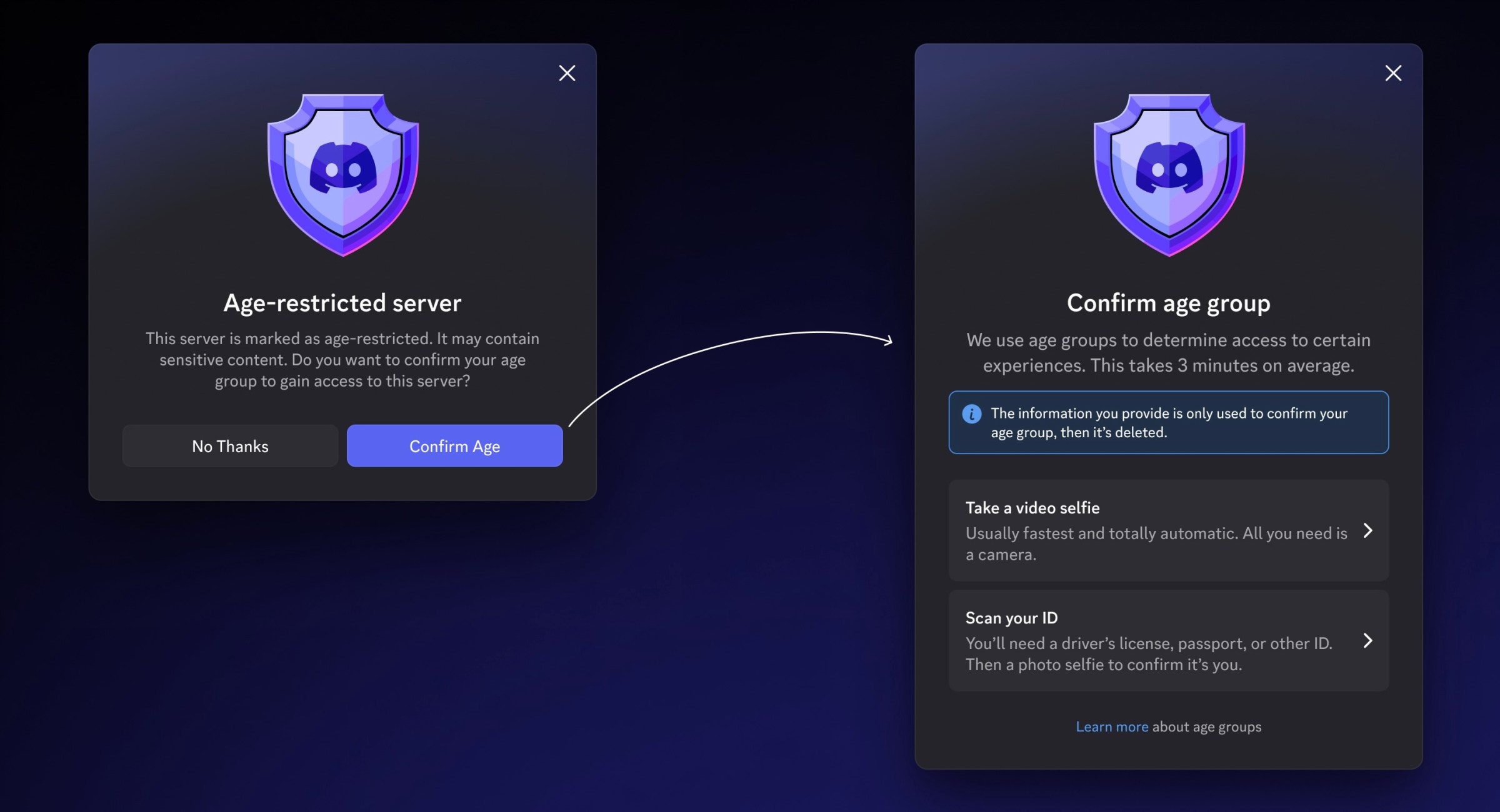

Discord just volunteered to become the internet’s largest case study in how not to architect identity services. Starting next month, the platform will require users to submit to facial scans or government ID verification to access age-restricted content. The company frames this as a safety measure. The reality is a masterclass in architectural overreach, privacy erosion, and the kind of technical hubris that keeps security engineers awake at night.

The announcement reads like a privacy advocate’s worst nightmare: “users can choose to use facial age estimation or submit a form of identification to Discord’s vendor partners.” What they don’t mention is that these “choices” represent two equally problematic architectural patterns, both of which collapse under scrutiny at scale.

The Illusion of “Facial Age Estimation”

Discord insists they’re using “facial estimation”, not facial recognition. This semantic distinction is technically accurate but functionally meaningless. The system still requires capturing a video selfie, processing it through an AI model, and making a determination about your identity characteristics. The fact that Discord claims the video “never leaves the user’s device” only raises more questions about the architecture.

How does verification work if data stays local? The answer reveals the first architectural compromise: either the model runs on-device (creating massive client-side bloat and inconsistent results across devices), or “never leaves” means “is immediately transmitted to a vendor for processing and then deleted.” Discord’s own history suggests the latter interpretation is more likely, especially given that they previously suffered a data breach through a third-party vendor that exposed users’ age verification data, including images of government IDs.

This pattern mirrors the catastrophic failures in large-scale API key exposure due to poor architectural design. When identity systems rely on third-party vendors without robust zero-knowledge architectures, they create single points of failure that compromise millions of users simultaneously.

The Government ID Trap: Centralization’s False Promise

The alternative, submitting government IDs, represents an even more dangerous architectural pattern: centralized storage of highly sensitive identity documents. Discord claims IDs are “deleted quickly, in most cases, immediately after age confirmation.” But this promise ignores the fundamental reality of distributed systems: deletion is never immediate, backups exist, and vendor systems maintain their own retention policies.

The architectural burden here is staggering. To process IDs at Discord’s scale (150 million monthly active users), the system must:

- Ingest and temporarily store high-resolution document images

- Run OCR and fraud detection algorithms

- Cross-reference against internal databases

- Maintain audit trails for compliance

- Coordinate deletion across multiple vendor systems

Each step introduces latency, cost, and attack surface. The system must be designed to handle peak loads during major gaming releases or viral events, when millions might attempt verification simultaneously. This requires auto-scaling infrastructure that, by definition, replicates data across multiple availability zones, making “immediate deletion” a logistical impossibility.

The DHS Cautionary Tale: When Identity Systems Become Surveillance Tools

Discord’s architecture becomes even more troubling when compared to the Department of Homeland Security’s Mobile Fortify program. As documented in recent investigations, DHS deployed a facial recognition app to immigration agents that suffers from the same architectural flaws Discord is now embracing, but with life-altering consequences.

Mobile Fortify uses NEC’s facial recognition algorithms to generate “candidate matches” rather than definitive identifications. The system converts photos into mathematical templates and returns entries that score above adjustable thresholds. As one expert noted, “Every manufacturer of this technology, every police department with a policy makes very clear that face recognition technology is not capable of providing a positive identification.”

The architectural parallels are striking. Both systems:

– Prioritize speed over accuracy

– Use adjustable confidence thresholds to meet operational demands

– Store biometric templates that can be re-used across contexts

– Lack meaningful oversight or redress mechanisms

Discord’s system will process millions of selfies. DHS’s system has already been used over 100,000 times in the field. Both operate under the same flawed assumption: that biometric data can be processed at scale without creating permanent surveillance infrastructure.

The difference? Discord’s system will block teens from seeing NSFW channels. DHS’s system has led to US citizens being detained based on “possible matches” and agents using Spanish language skills as probable cause. The architectural pattern is identical, only the stakes differ.

Privacy-Preserving Alternatives: The Architecture Discord Ignored

The technical community has spent years developing privacy-preserving biometric architectures that Discord could have adopted. Zero-knowledge proofs, secure multi-party computation, and on-device processing with cryptographic attestation all offer paths to age verification without creating centralized identity honeypots.

As one industry analysis notes, “Privacy-preserving, zero-knowledge biometric technologies make it possible to re-verify identity at scale without centralizing sensitive data, dramatically reducing both cost and risk.” These approaches allow organizations to verify age group membership without accessing the underlying biometric data.

Discord’s choice to ignore these architectures reflects a deeper problem: the growing user skepticism toward AI-based identity and surveillance features. Users increasingly recognize that “AI-powered” often means “privacy-violating.” When companies like Discord deploy invasive biometric systems under the banner of safety, they accelerate this distrust.

The Scale Problem: Why Discord’s Architecture Will Fail

Let’s talk numbers. Discord handles 150 million monthly active users. If even 10% need verification, that’s 15 million verification events. Each facial scan requires:

– 2-5 seconds of video capture

– 1-3 seconds of AI processing

– Network transmission overhead

– Vendor API response time

At peak load, this could mean thousands of concurrent verification sessions. The system must maintain sub-second response times while ensuring data consistency and security. This requires:

Load balancers distributing requests across regions

Message queues handling asynchronous verification callbacks

Distributed caches storing temporary verification states

Database replicas maintaining audit trails

Each component adds cost and complexity. A conservative estimate puts the infrastructure cost at $0.10-$0.25 per verification event. For 15 million verifications, that’s $1.5-$3.75 million in direct costs, not including engineering time, vendor fees, or breach insurance.

But the real cost is architectural debt. Every component becomes a permanent part of Discord’s infrastructure. The verification system must be maintained, patched, and scaled indefinitely. It becomes a security risk in autonomous agent identity management and API access control, where compromised verification tokens could grant access to restricted content across the platform.

The Trust Collapse: When Verification Becomes Surveillance

Discord’s head of product policy admits they “do expect that there will be some sort of hit” to user numbers. This understatement masks a deeper architectural truth: identity systems that feel like surveillance destroy the social trust that makes platforms viable.

The friction is immediate and severe. Users must:

– Navigate unclear verification flows

– Trust Discord’s vendor partnerships

– Accept that their biometric data might be breached

– Face false positives that lock them out of communities

This mirrors the risks of centralized AI-controlled identities and false autonomy in agent systems. Just as Moltbook’s “autonomous agents” were revealed to be human-controlled puppets, Discord’s “privacy-preserving verification” is a centralized surveillance system wearing a trust-and-safety costume.

The architectural burden extends beyond Discord. Every platform watching this rollout learns that age verification requires massive infrastructure investment, creates permanent privacy liabilities, and alienates users. The result is a chilling effect on innovation in age-appropriate content delivery.

The Inevitable Breach: Architecture’s Law of Large Numbers

Discord’s vendor already suffered one breach. The architectural reality is that another is inevitable. When you process millions of biometric samples, the probability of a breach approaches 1. The question is not if but when, and how severe.

A breach of Discord’s system would expose:

– Facial templates that can’t be reset like passwords

– Government IDs with name, address, and date of birth

– Correlation between online personas and real identities

– Age verification decisions that could be used for discrimination

The architectural failure here is fundamental: Discord is building a system that assumes it can protect data forever. No system can. The only defensible architecture is one where a breach reveals nothing, where zero-knowledge proofs verify age without storing biometrics.

The Path Forward: Architecting for Privacy by Design

The alternative architecture exists. It requires:

– On-device processing with cryptographic attestation

– Zero-knowledge proofs that verify age group membership

– Decentralized identity where users control their credentials

– Ephemeral data that exists only during verification

– Transparency through open-source verification protocols

This architecture costs more to develop initially but eliminates the permanent infrastructure burden and breach risk. It’s the difference between building a vault that must be defended forever and creating a system where there’s nothing to steal.

Discord’s current path chooses short-term implementation speed over long-term architectural sustainability. They’ve prioritized checking a regulatory box over building a trustworthy system. The result is security risks in autonomous agent identity management applied to human users, a pattern that never ends well.

The Real Controversy: Architecture as Policy

The most insidious aspect of Discord’s verification system is how architectural choices become policy enforcement. When the system misclassifies a user as a teen, that decision isn’t reviewable by a human. The architecture itself becomes the judge, jury, and executioner of access rights.

This is the ultimate architectural burden: your system design now determines who can speak, what they can see, and which communities they can join. The code isn’t just processing identity, it’s governing human interaction at scale.

Discord’s verification rollout reveals a truth the tech industry keeps ignoring: identity infrastructure is too critical to be treated as a feature. It requires architectural patterns that prioritize privacy, decentralization, and user control. The alternatives aren’t just technically inferior, they’re dangerous.

As Discord users face the choice between facial scanning and ID submission, they’re not just verifying their age. They’re participating in an architectural experiment that will define how identity works online for the next decade. The question is whether that architecture will protect them or betray them.

The answer, based on Discord’s current design, is already clear. And it’s not the one users deserve.

The Verdict: Discord’s age verification architecture fails every critical test. It centralizes sensitive data, relies on untrustworthy vendors, ignores privacy-preserving alternatives, and creates surveillance infrastructure under the guise of safety. The system will be breached, users will be misclassified, and trust will collapse. The only winners are the vendors selling invasive biometric systems and the lawyers who will litigate the inevitable class-action lawsuits.

For a platform built on community trust, Discord just architected its own privacy nightmare. The only question now is how many users will stick around to see it collapse.