The headline practically writes itself: tech enthusiast sees promising new headset, clicks eagerly, then recoils at the final detail, “it will double as an AI wearable.” Interest evaporates. The tab closes. Another potential customer lost not despite the AI features, but because of them.

This isn’t a hypothetical. It’s a real anecdote from Reddit’s r/ArtificialIntelligence that crystallizes a phenomenon spreading through consumer tech: AI aversion. After years of relentless AI evangelism, users aren’t just skeptical, they’re actively repelled. The technology that was supposed to be the next big thing might be the thing that pushes people back to “touching grass.”

The Great AI Hangover

Two years ago, the same users now clicking away from AI products were teaching others how to prompt, setting up agents, and preaching the gospel of artificial intelligence. What happened? The research points to a perfect storm of corporate overreach, degraded user experience, and a fundamental breach of trust.

According to a WalkMe study of 1,200 office workers, 71% believe new AI tools are appearing faster than they can learn to use them. Nearly half (47%) feel they should be excited about AI but instead report feelings of worry. This isn’t technophobia, it’s exhaustion. The technology has moved from novelty to nuisance at warp speed.

The problem isn’t AI itself, but what one Reddit commenter called “corporate AI.” The distinction matters. Open-source models might glitch in Discord groups, but they don’t shove unwanted features down your throat while harvesting your data for model training and demanding subscription fees. The resentment is targeted squarely at the tech giants who’ve turned AI from a tool into a platform obligation.

The “Microslop” Moment

Microsoft’s AI push has become the poster child for this backlash. The term “Microslop”, a portmanteau that trended across X, Reddit, and Instagram, captures the sentiment perfectly. It’s not just a meme, it’s a shorthand for concrete, reproducible failures: Copilot generating incorrect steps, intrusive UI placements that can’t be disabled, performance degradation on older hardware, and privacy concerns about agents with memory access.

The company’s response has only deepened the wound. When CEO Satya Nadella urged the industry to “move on” from discussing AI’s quality problems, critics saw it as dismissive of legitimate concerns. Microsoft AI leader Mustafa Suleyman’s public incredulity that anyone could be unimpressed by multimodal AI, comparing skeptics to people who’d be amazed by Snake on a Nokia phone, amplified the perception that executives are celebrating technical wonder while ignoring day-to-day usability disasters.

The backlash has real teeth. Windows Forum documents how concentrated social-media sentiment shapes press coverage, influences enterprise procurement, and accelerates regulatory scrutiny. When users feel forced into AI features they don’t want, “Microslop” migrates from joke to market behavior.

The Authenticity Crisis

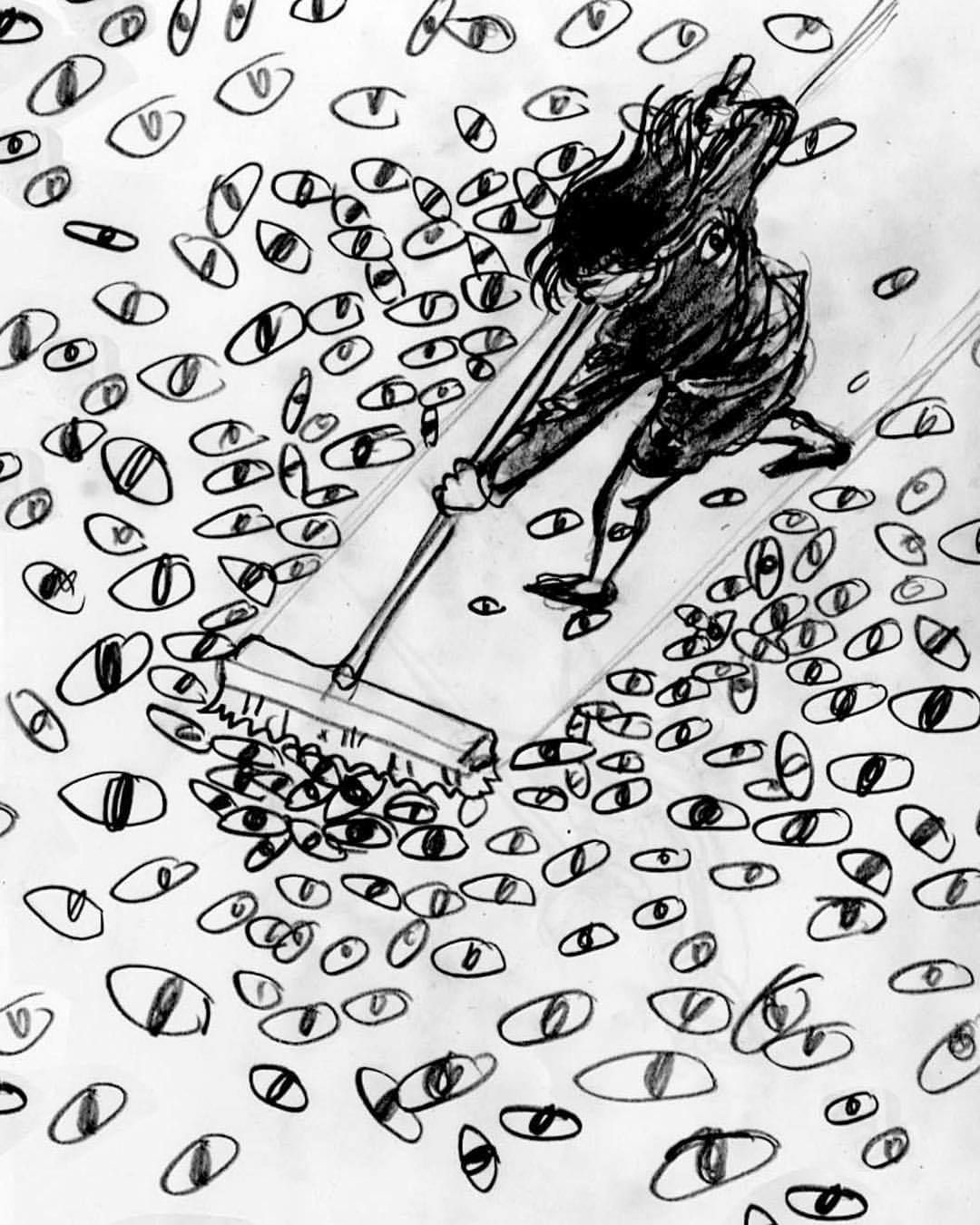

But the aversion runs deeper than buggy features. There’s a creeping sense that AI is poisoning the well of human interaction itself. Reddit users report detecting AI-written content instantly, the “it’s not about X, it’s about Y” formulations, the mediocre insights, the summaries that summarize nothing. The result? They stop reading. They stop engaging.

One user described the violation of realizing a YouTube video of a trusted political pundit was actually AI-generated: “Shut that shit down immediately and felt violated for being conned.” This isn’t just about preference, it’s about a fundamental breakdown in trust. When bosses use ChatGPT outputs over their technical experts’ advice, when LinkedIn posts and comments are obviously AI-generated, when even journaling becomes an AI-assisted activity, we’re not just using tools, we’re outsourcing our voice.

The irony is thick: people turn to AI companions because human forums like Reddit are “snarky, rude, and judgmental”, yet the AI content flooding those same forums makes them less valuable. The technology promises connection while eroding the authenticity that makes connection meaningful.

The Privacy-Control Paradox

Microsoft’s Copilot+ PCs, with their 40+ TOPS NPU requirements, exemplify the hardware lock-in problem. The promise of on-device AI processing is shadowed by the reality of forced obsolescence and opaque telemetry. Users can’t inspect what the NPU is actually doing, can’t verify privacy claims, and can’t opt out without disabling core OS features.

This creates what researchers call “surveillance pricing” concerns. When Consumer Reports tested Instacart’s AI-driven pricing, they found the same items cost different users up to 23% more based on algorithmic profiling. Some paid $2.99 for Skippy peanut butter while others paid $3.69, at the same store, same time, same product. Instacart’s stock dropped 6-7% when exposed, but the practice is likely endemic.

The economic model is clear: AI isn’t just a feature, it’s a vector for extracting maximum value from each transaction. When companies know your willingness to pay, they can take all the surplus, leaving consumers with “fairness” as the only thing they can demand, and not receive.

Investor Fatigue Meets Consumer Revolt

The skepticism isn’t limited to users. Bloomberg reports that “AI fatigue” is hitting investors, with money flowing out of the Magnificent Seven and into the “other 493” S&P companies. Ed Yardeni, president of Yardeni Research, stated plainly: “I’m tired of it and I suspect a lot of other people are sort of wary of the whole issue.”

Since the S&P 500’s late-October record, Bloomberg’s Magnificent Seven gauge has fallen 2% while the S&P 493 climbed 1.8%. The shift is subtle but meaningful: after three years of AI-driven 78% gains, the market is questioning whether the technology can deliver the promised seismic economic changes.

Goldman Sachs now predicts the Magnificent Seven’s contribution to S&P 500 earnings growth will drop from 50% in 2025 to 46% in 2026, while the rest of the index accelerates from 7% to 9% growth. The message: AI’s economic impact may be real but narrower than promised, and the easy wins are gone.

The Three Traps of AI Adoption

For product managers and engineering leaders, the research reveals three critical failure modes:

-

The Perfect Use Case Trap: Waiting for the ideal AI application while competitors iterate and learn. As one expert noted, “None of these are good reasons for not moving forward, but they are often cited as reasons why progress cannot commence.”

-

The Initiative Overload Trap: When every department adopts its own AI solution, organizations incur a hidden “AI tax” of duplicated effort, misaligned data, and mounting security risk. This fragmentation, not AI itself, creates the fatigue.

-

The Governance Gap Trap: AI agents require new procedural and governance-centric controls: auditable consent flows, granular permissions, transparent telemetry, and third-party verification. Without these, even technically sound solutions fail adoption because trust erodes faster than it builds.

The Path Forward: From Spectacle to Substance

The backlash isn’t a rejection of AI’s potential. It’s a rejection of AI as spectacle. Users aren’t anti-technology, they’re anti-broken-promises. The way forward requires a fundamental shift:

- Ship durable defaults: Privacy-preserving configurations should be baseline, with explicit opt-in for persistent agents

- Publish reproducible benchmarks: Third-party validated performance metrics that separate marketing from reality

- Slow the demo cycle: Fewer, higher-quality previews with concrete success criteria

- Center trust as a product metric: Not just capability, but reliability, auditability, and user control

As one Reddit commenter put it, the goal should be AI that “just gets the F out of the way.” Not AI that assists with everything, but AI that assists with the right things and disappears otherwise.

The “Microslop” moment is a cultural inflection point. It signals that consumers and enterprise customers will not accept an AI-first future delivered through volume, spectacle, and opaque defaults. For Microsoft and other major AI vendors, success now depends on operational discipline: demonstrable reliability, auditable governance, and user-centric design.

If they fail? The technology that was supposed to revolutionize everything might instead become the reason people buy simpler, dumber, more trustworthy devices. And for an industry built on perpetual upgrade cycles, that might be the most terrifying outcome of all.

What do you think? Are you experiencing AI fatigue? Have you avoided products specifically because they were “AI-enabled”? The comments are open, no AI-generated responses allowed.