Configuration Is Code at Runtime, But We Design It Like a Text File: The Architectural Inconsistency Costing You Outages

Configuration directly controls system behavior at runtime, yet we apply a fraction of the rigor used for application code. This architectural blind spot explains why most major outages trace back to config changes, and why stronger typing, semantic validation, and gradual rollouts aren’t just nice-to-haves but fundamental requirements.

The Runtime Reality: Configuration Is Code

When your application boots, it doesn’t magically know how to behave. It reads configuration, JSON, YAML, environment variables, config maps, and transforms those values into conditional logic, network calls, and resource allocations. The runtime doesn’t distinguish between if (featureEnabled) coming from a compiled binary versus a configuration flag. Both execute with equal power.

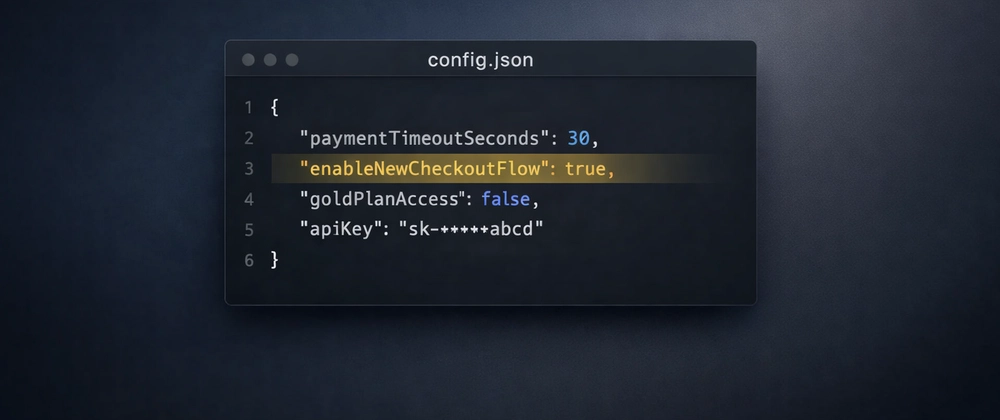

Consider this typical configuration snippet:

{

"paymentTimeoutSeconds": 30,

"enableNewCheckoutFlow": true,

"goldPlanAccess": true,

"stripeApiKey": "sk_live_..."

}Technically, these are just values. But semantically, they represent four fundamentally different concerns:

– Baseline behavior (timeout)

– Feature rollout (checkout flow toggle)

– Entitlement (plan access)

– Security boundary (API key)

The runtime treats them identically. A boolean is a boolean. But the system implications couldn’t be more different. Change the timeout to 0 and you’ve triggered a cascade of failures. Flip the feature flag and you’ve rolled out untested code paths to millions. Expose the API key and you’ve created a security incident. The code consuming this configuration doesn’t care, it executes what’s given. This is precisely why uncoordinated system design leading to emergent failures remains such a persistent problem.

The Design Gap: Where Rigor Goes to Die

Application code gets type systems, unit tests, integration suites, code review, static analysis, and gradual rollouts. Configuration gets a JSON schema if you’re lucky. The architectural inconsistency is stark:

| Aspect | Application Code | Configuration |

|---|---|---|

| Type Safety | Strong typing, compile-time checks | Stringly-typed, runtime parsing |

| Validation | Unit tests, integration tests | Syntactic schema validation (maybe) |

| Review | Mandatory code review, approval gates | Often direct to production |

| Deployment | Canary, blue-green, feature flags | Atomic replacement, instant propagation |

| Tooling | IDEs, linters, debuggers | Text editors, manual verification |

| Failure Visibility | Stack traces, logs, metrics | Silent misbehavior, cryptic errors |

This gap isn’t theoretical. The GitHub issue for Claude Desktop’s network egress settings reveals a perfect microcosm: selecting “All domains” in the UI sets a * pattern that the application’s own validation rejects on startup. The UI and validation logic disagree on what’s valid, creating a contradictory state that blocks users entirely. This is configuration failing at the semantic level, technically valid according to one part of the system, semantically invalid according to another.

The prevailing sentiment among experienced engineers is that configuration validation is a solved problem: just exit on invalid config. But this misses the point entirely. If you roll out a malformed config to thousands of pods simultaneously and they all refuse to start, you’ve achieved a denial-of-service attack against yourself. Code changes fail gradually, configuration changes fail instantly and catastrophically.

The Failure Patterns: When Config Becomes a Weapon

Most big tech outages from the 2010s share a root cause: bad configuration updates. Unlike code deployments that naturally support gradual rollouts, configuration changes often propagate instantly across entire fleets. Update a config map in Kubernetes and every pod sees it more or less immediately. The system replaces resources synchronously rather than rolling them gradually.

This isn’t just historical. Cloudflare’s major outage last year traced directly to configuration changes, prompting arguments for universal gradual configuration rollouts. The pattern repeats because the tooling and culture around configuration hasn’t evolved with its impact.

The problem runs deeper than deployment strategy. Configuration often gets validated only syntactically, not semantically. A config file might pass schema validation but contain:

– Network addresses that are syntactically valid but point to non-existent services

– Timeout values that are positive integers but cause systemic latency

– Feature flags that are correctly formatted but create circular dependencies

– Secrets that are properly encoded but exposed in logs due to being treated as strings

Semantic validation requires understanding intent, not just structure. When everything is a string or boolean, the system can’t distinguish between a timeout and a feature flag. This is where treating architectural decisions as code with version control and documentation rigor becomes essential, configuration is architecture, frozen in YAML.

The Semantic Void: Why Strong Typing Isn’t Enough

The DEV Community article “Configuration Needs Semantics” argues persuasively that configuration requires meaning beyond data types. Four categories, configuration, secrets, feature flags, and entitlements, often get conflated despite answering different questions:

Configuration sets baseline system behavior (timeouts, thresholds). It’s stable, changes infrequently, and isn’t security-sensitive.

Secrets represent confidential data requiring protection. A secret may be “just a string” technically, but semantically it’s a security constraint. Store it as a string and it will eventually be logged, not from malice, but invisibility.

Feature Flags are temporary activations of behavior. They have expected end-of-life and create maintenance pressure. Without expiration tracking, warnings, or mechanisms making overstaying visible, you’ve created permanent configuration with a temporary label.

Entitlements grant long-term access to behavior. They’re contractually relevant, audit-sensitive, and rarely removed.

From an implementation perspective, feature flags and entitlements look identical: a boolean, a rule evaluated against context. But semantically, they create wildly different expectations:

| Aspect | Feature Flag | Entitlement |

|---|---|---|

| Expected Lifetime | Temporary | Long-term |

| Removal | Expected | Rare |

| Review Strictness | Often relaxed | High |

| Audit Relevance | Low | High |

When these distinctions blur, systems don’t break immediately, they accumulate misunderstandings. A capability might start as a feature flag during rollout, then become an entitlement for a paid plan. Without explicit migration, you end up with permanent flags and unclear contracts.

The Tooling Problem: Why We Can’t Have Nice Things

If configuration is so critical, why don’t we have better tools? Partly because configuration spans multiple domains: developers write it, operators deploy it, product managers request changes to it. No single group owns it entirely, so no group prioritizes tooling for it.

The suggestion to “just use a strongly typed language” instead of YAML misses the ecosystem reality. Configuration needs to be human-readable, version-controlled, diffable, and editable by non-developers. A compiled language fails on accessibility. But that doesn’t mean we accept the status quo.

What’s needed is a middle ground: configuration languages with:

– Gradual type systems that catch errors before deployment

– Semantic validators that understand intent, not just syntax

– Built-in expiration for temporary flags with automated warnings

– Access control distinguishing secrets from plain values

– Deployment strategies supporting gradual rollouts, canaries, and automatic rollback

Dhall-lang.org represents one attempt at this middle ground, offering a programmable configuration language with strong guarantees. Yet adoption remains limited because the ecosystem around it, IDE support, debugging tools, migration paths, remains immature.

The Cultural Problem: “It’s Just a Config Change”

The most dangerous phrase in software engineering is “it’s just a config change.” This mindset leads to:

– Skipping code review for config updates

– Deploying config changes during off-hours without proper monitoring

– Treating configuration as “not real code” in team processes

– Accumulating technical debt in YAML files that never get refactored

This self-deception creates maintenance nightmares. Teams tell themselves “we’ll clean this up later” about feature flags that become permanent. They say “it’s basically a permission” about entitlements managed as config. The system still runs, but planning becomes unreliable and maintenance decisions lose clarity.

The cultural gap mirrors the tooling gap. Until configuration gets first-class status in team processes, reviewed, tested, and owned with the same rigor as application code, the failures will continue.

A Path Forward: Configuration as Code, For Real This Time

1. Semantic Validation at the Edge

Validate configuration semantically before it reaches production. This means:

– Type-checking that understands domain-specific constraints (timeouts must be > 0, addresses must be reachable)

– Dependency analysis ensuring flags don’t create circular logic

– Security scanning treating secrets as first-class citizens with different rules

– Impact simulation predicting blast radius of config changes

2. Gradual Rollouts for Config

Configuration changes need the same deployment sophistication as code:

– Canary deployments for config changes, testing new values on a small subset of instances

– Automatic rollback when metrics degrade after a config update

– Versioning and branching for configuration, allowing A/B testing of different config values

– Blue-green deployment swapping entire config sets rather than mutating in place

3. Explicit Semantic Categories

Adopt the four-category model explicitly in your systems:

– Configuration: Baseline behavior, infrequently changed, no expiration

– Secrets: Confidential data, security boundaries, rotated regularly

– Feature Flags: Temporary activations with mandatory expiration dates and automated warnings

– Entitlements: Long-term access, contractually relevant, audit-sensitive

Moving between categories should require explicit migration, not accidental drift. A feature flag that becomes permanent must be promoted to an entitlement through a deliberate process.

4. Tooling That Understands Intent

Build or adopt tooling that treats configuration as a first-class artifact:

– IDE extensions providing autocomplete, type hints, and real-time validation

– CI pipelines running config linters and semantic validators

– Observability tracking which config values changed before incidents

– Documentation generators extracting intent from config schemas

This approach aligns with extreme testing rigor in mission-critical monolithic systems. SQLite’s 590x test-to-code ratio exists because they treat every input, including configuration, as potentially malicious. We need similar paranoia for distributed systems.

The Internal Linkage: Where This Fits in Modern Architecture

This configuration crisis doesn’t exist in isolation. It connects to broader architectural challenges:

Uncoordinated system design creates emergent failures where no single code change is wrong, but everything breaks. Configuration is often the coordination mechanism that fails silently. The risks of treating working systems as secure or stable without rigorous governance apply directly, configuration that “works” can still be a massive security liability.

Long-term system reliability requires understanding how systems evolve over decades. The Internet Archive’s infrastructure challenges include managing configuration for 20-year-old codebases. Their approach to long-term system reliability and legacy infrastructure challenges offers lessons in treating configuration as a preserved artifact, not a transient value.

Architectural decision records need to include configuration changes. If we believe in treating architectural decisions as code with version control and documentation rigor, then configuration changes, especially semantic ones, must be captured as architectural decisions with the same review and approval process.

The Controversial Take: Configuration Should Be Harder to Change Than Code

Here’s the spicy conclusion: Configuration changes should require more rigor than code changes, not less. **

Code changes are typically isolated, reviewed, tested, and gradually deployed. Configuration changes affect entire systems instantly, often bypass review, and get tested only in production. This is backwards.

A configuration change that disables authentication or sets a timeout to zero can cause more damage than a buggy code deployment. Yet we allow direct edits to production config while requiring three approvals for a one-line code change.

The solution isn’t to make configuration changes harder in a bureaucratic sense. It’s to apply the same engineering discipline: type safety, automated testing, gradual rollouts, and semantic validation. Configuration should be code, version-controlled, tested, and deployed with tooling that understands its impact.

Until then, we’ll keep treating our most powerful runtime code like a shopping list, and the outages will keep coming. The question isn’t whether configuration is code. The question is how many outages it takes before we start acting like it.