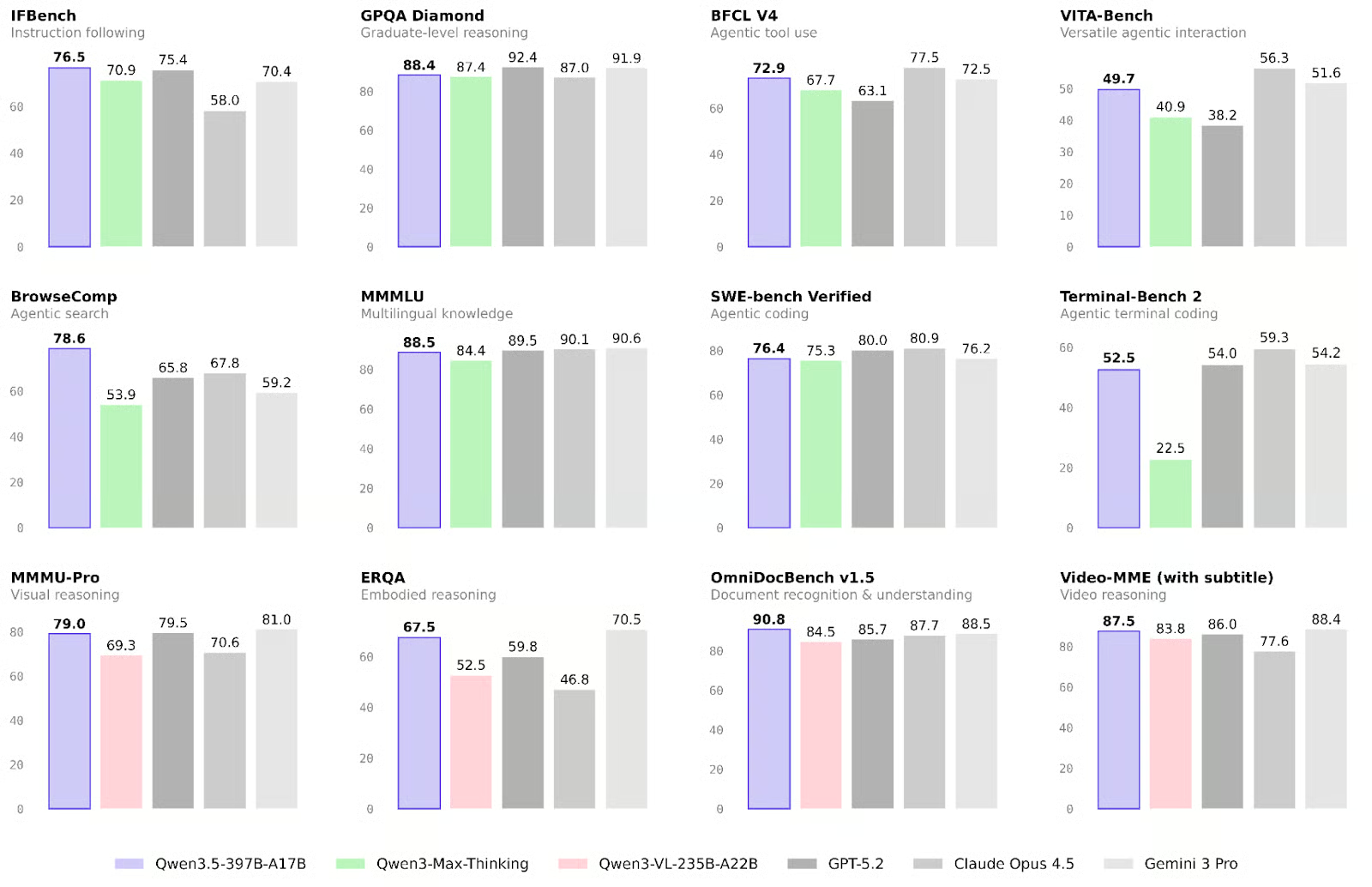

Alibaba dropped Qwen3.5-397B-A17B on Lunar New Year’s Eve while Western labs were still nursing their holiday hangovers. The timing wasn’t subtle, and neither are the claims: a 397-billion-parameter model that activates only 17 billion per token, delivers top-tier performance without chain-of-thought reasoning, and ranks #3 on the Artificial Analysis Intelligence Index, right behind GPT-5.2 and Claude Opus 4.5.

The AI community’s reaction? A collective shrug and a Reddit thread titled “We are so spoiled, that a 400b model stronger than Sonnet isn’t impressing us.”

The Efficiency Revolution Nobody Asked For (But Everyone Needed)

Let’s cut through the marketing fluff and look at the architecture. Qwen3.5-397B-A17B is a sparse Mixture-of-Experts (MoE) model with a 4.3% activation ratio. That means for any given token, only 17 billion parameters fire up while the other 380 billion sit idle.

One Reddit user captured the significance: “The efficiency of Qwen 3.5 is actually insane. 397B total parameters but only 17B active? That’s a massive win for inference costs while keeping performance on par with much ‘heavier’ models.”

But here’s where it gets controversial. That 4.3% sparsity ratio? It’s actually less aggressive than some competitors. Kimi’s latest model runs at 3.25% sparsity. So is Alibaba being conservative, or are they hiding inefficiencies in their routing algorithm?

The “No Chain-of-Thought” Claim: Innovation or Limitation?

Here’s where Alibaba’s marketing gets spicy. They’re explicitly positioning Qwen3.5 as a model that doesn’t need chain-of-thought reasoning, claiming this makes it “2x cheaper” than competitors that rely on extensive internal deliberation.

One developer on r/LocalLLaMA put it bluntly: “What is really good is fact that it is capable of good outputs even without thinking! Some latest models depend on thinking part really much and that makes them ie 2x more expensive.”

But is this a feature or a bug? Chain-of-thought reasoning emerged because models needed to show their work to maintain accuracy on complex tasks. If Qwen3.5 can match performance without that intermediate step, it suggests a more efficient internal representation.

The Llama Dominance Challenge: Real or Mirage?

Let’s address the elephant in the room. Llama has become synonymous with open-source AI, with Meta’s models forming the backbone of countless startups and research projects. But Llama’s “openness” comes with significant strings attached, the license restricts commercial use for companies with over 700 million monthly active users, and the weights are released under a custom commercial license, not a true open-source license.

Alibaba is playing a different game. Qwen3.5-397B-A17B is released under Apache 2.0, the gold standard for open-source software. No usage restrictions. No commercial limitations. No strings attached.

This is where Alibaba’s Qwen3.5-397B-A17B’s performance and open-source challenge to closed models becomes more than a technical comparison, it’s a philosophical statement. While Meta hedges its bets with semi-open models, Alibaba is betting that true openness will win the enterprise market.

The Multimodal Angle: Where Qwen3.5 Actually Shines

While everyone’s obsessing over parameter counts, Qwen3.5’s native multimodal capabilities might be its real differentiator. Unlike models that bolt vision capabilities onto a language backbone, Qwen3.5 fuses text, image, and video tokens from the first pretraining stage.

The results are impressive: 90.8 on OmniDocBench v1.5, outperforming GPT-5.2 (85.7), Claude Opus 4.5 (87.7), and Gemini 3 Pro (88.5). For document understanding and visual reasoning tasks, this puts Qwen3.5 in elite company.

But the truly innovative feature is visual agentic capability. The model can analyze UI screenshots, detect interactive elements, and execute multi-step workflows across applications. This isn’t just about understanding images, it’s about acting on visual information.

The Cold Hard Reality of Local Deployment

Here’s where the hype meets the hard drive. Qwen3.5’s efficiency claims are based on activation sparsity, but you still need to load all 397 billion parameters into memory. The model weights alone are over 800GB in FP16.

The community has found workarounds. One developer reported running a quantized version on a 128GB Mac with reasonable performance. Another linked to a GGUF quant that runs in under 128GB RAM+VRAM total, noting it “can fit a good enough quant on a 128GB mac too.”

But as one engineer pointed out: “Yes but why? What use is a Q1? It’d be completely brain dead!” The extreme quantization needed to fit these models on consumer hardware comes with significant quality degradation.

The Efficiency Paradox: Are We Measuring the Right Thing?

The deeper controversy around Qwen3.5 isn’t about benchmarks or licenses, it’s about how we define progress in AI. For three years, the community has chased parameter counts, reasoning that bigger models equal better performance. Alibaba is arguing that we’ve been measuring the wrong thing.

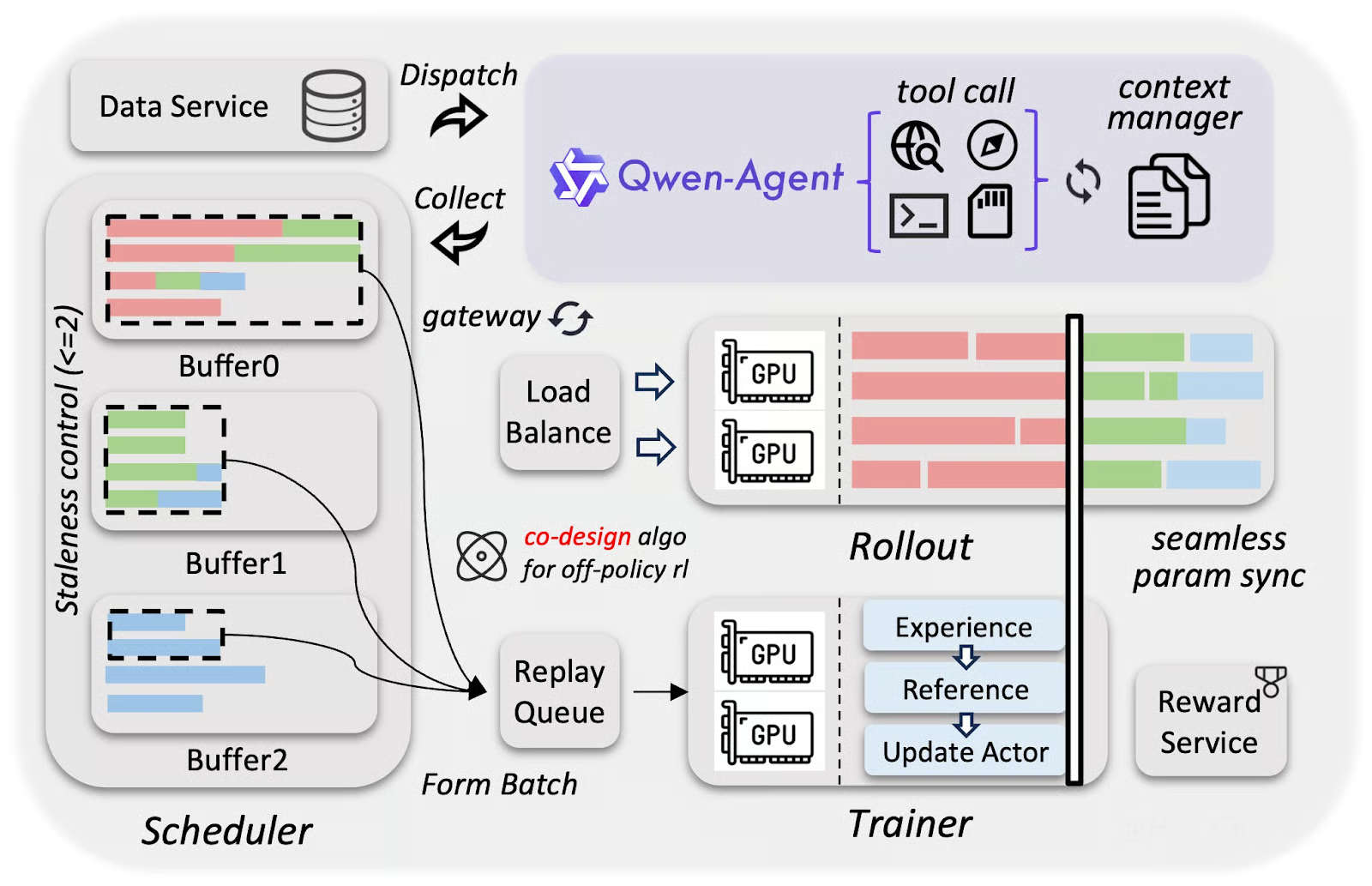

The model’s development process reveals the strategy. Alibaba used asynchronous reinforcement learning and FP8 compression to train complex agent skills 3-5x faster than traditional methods. The heterogeneous infrastructure trains vision and language components simultaneously, achieving almost 100% training throughput compared to pure text models.

This isn’t just about making models cheaper to run, it’s about making them faster to train, easier to iterate, and more accessible to deploy. In a world where GPU clusters are the new oil fields, efficiency isn’t just a feature, it’s a strategic advantage.

The Verdict: Llama’s Reign Isn’t Over, But It’s No Longer Uncontested

So does Qwen3.5-397B actually challenge Llama dominance? The answer is nuanced.

On raw performance, it matches or exceeds Llama 3.1 405B on most benchmarks while being significantly more efficient. On licensing, Apache 2.0 is objectively more permissive than Llama’s custom commercial license. On capabilities, native multimodal and visual agentic features put it in a different category entirely.

What This Means for Developers

If you’re building AI applications, Qwen3.5-397B-A17B deserves your attention, not because it’s perfect, but because it represents a different philosophy. The Apache 2.0 license means true freedom to build commercial products without legal ambiguity. The efficiency gains translate directly to lower hosting costs. The multimodal capabilities open new application possibilities.

But you should also be skeptical. Test the model on your specific use cases. Validate the benchmark claims. Measure the actual latency and cost savings in production. The AI community’s collective yawn at a #3 ranking on AAII reflects a healthy skepticism born from watching too many models promise the moon and deliver a telescope.

The most important contribution Qwen3.5 makes might not be its performance, but its proof that efficiency and openness can be competitive advantages. In a field dominated by scaling laws and compute clusters, that’s a genuinely revolutionary idea.

Whether you’re evaluating Qwen3.5-397B-A17B’s performance and open-source challenge to closed models for production deployment or exploring Qwen3-VL making multimodal AI more capable and accessible, the landscape is shifting. The question is: are you building for the past, or for the efficient future Alibaba is betting on?

The efficiency revolution isn’t coming. It’s already here, quietly ranking #3 while everyone else was on vacation.