“Why do people say AI uses water? Doesn’t it just evaporate and go back into the cycle?” The top reply, sitting at 46 upvotes, offered a technically correct but morally bankrupt answer: “It doesn’t use water in the sense that it disappears from earth, but it does turn potable water into other forms of water.”

This is the kind of pedantic nonsense that lets the AI industry hide its growing environmental theft behind middle-school science. Yes, water molecules aren’t destroyed when data centers suck them in. No, that doesn’t mean your ChatGPT queries are carbon-neutral or consequence-free. The controversy isn’t about the global water cycle, it’s about who gets to drink and who gets to code.

The Technical Reality: Evaporative Cooling Isn’t a Victimless Crime

Modern AI data centers run hot. A single NVIDIA GB200 NVL72 rack pulls 132 kilowatts, enough to power 100 homes. Traditional air cooling collapsed around 15 kilowatts per rack. The industry’s solution? Evaporative cooling towers that turn drinking water into water vapor, dumping it into the atmosphere.

Here’s what the “it just evaporates” crowd misses: that water comes from somewhere specific. Data centers tap municipal drinking supplies, not mythical global reserves. A small-to-mid-sized facility in Texas consumes roughly 300,000 gallons of potable water daily. For perspective, that’s 120 households’ worth of water turned into humidity so your chatbot can generate slightly better haiku.

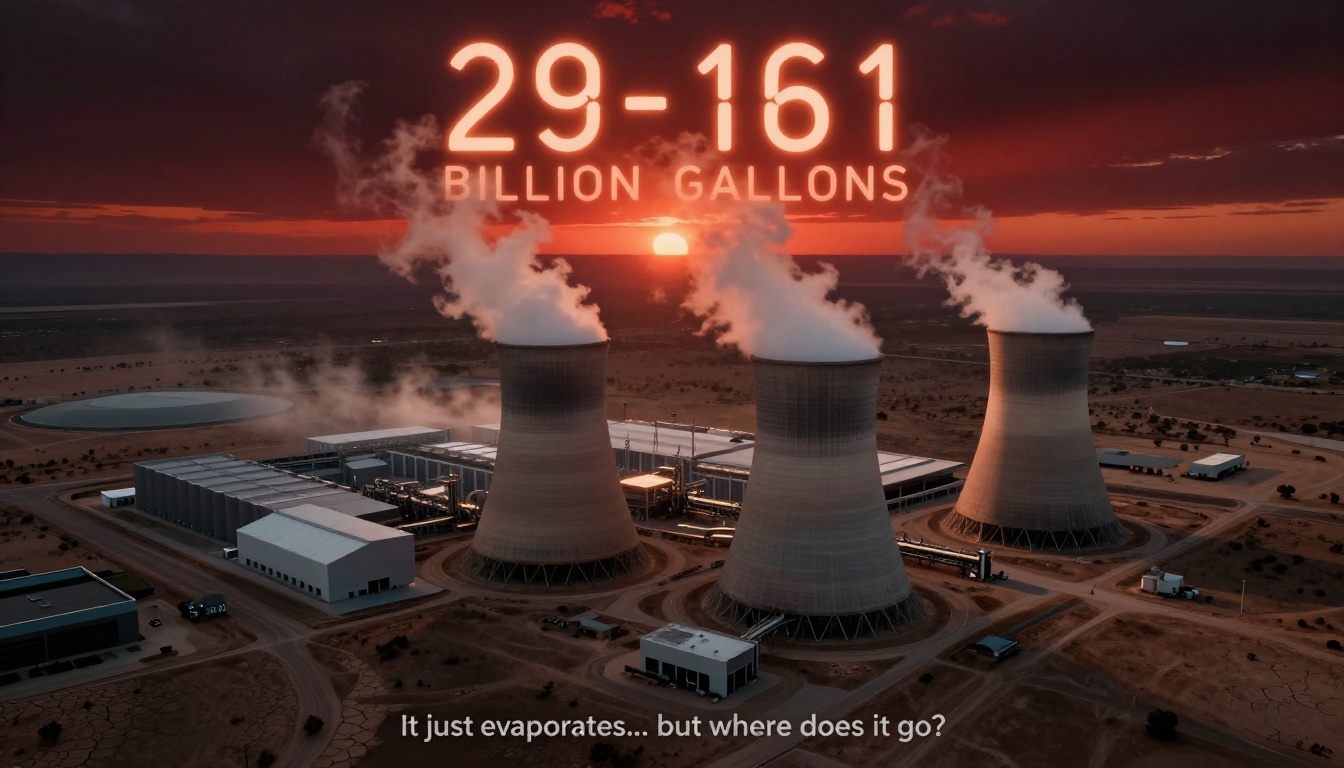

The Houston Advanced Research Center dropped a bombshell report in January: Texas data centers already guzzle 25 billion gallons annually. By 2030, that explodes to 29-161 billion gallons. The high end represents nearly 3% of Texas’ entire water usage, for reference, that’s more than the entire state uses for manufacturing. All this while Texas voters just approved a $20 billion water program to address existing shortages.

The Regulatory Black Hole

Texas doesn’t require data centers to disclose water consumption. Not projected use. Not actual use. Nothing. This creates what former Texas water regulator Carlos Rubinstein calls a “structural blind spot” in state planning. Regional water groups can’t petition for plan amendments because they have no data. Data centers approach small towns with promises of tax revenue while conveniently omitting they’ll become the community’s largest water consumer overnight.

Project Matador, Rick Perry’s 18-million-square-foot AI campus near Amarillo, will partner with four 1-gigawatt nuclear reactors. The developers refused to comment on water use. Why would they? There’s no law forcing disclosure. This is how you build infrastructure that locks in decades of freshwater demand before regulators can blink.

The Texas GOP’s solution? Force AI data centers to follow oil and gas water protocols. Let that sink in. The party of deregulation wants AI held to the same standards as fracking operations because the water crisis is that obvious. ExxonMobil now uses 87% recycled “produced water” in the Permian Basin. AI data centers? Zero percent required.

The Efficiency Mirage: Liquid Cooling as Savior and Enabler

Schneider Electric’s analysis shows direct-to-chip liquid cooling cuts water use by up to 60% and eliminates cooling tower consumption entirely. NVIDIA’s Blackwell platform claims 300x water efficiency gains through closed-loop systems. The market is surging from $2.8 billion in 2025 to $21 billion by 2032.

But here’s the perverse incentive: efficiency gains enable bigger facilities. Liquid cooling lets operators pack 250 kilowatts into a single rack. They use warm water (45°C) instead of chilled, eliminating energy-intensive chillers. This unlocks “gigawatt-scale” AI superfactories that consume less water per compute unit but vastly more water overall.

It’s Jevons paradox with a vengeance. Making AI cooling more efficient doesn’t reduce total consumption, it accelerates the arms race. Google’s infrastructure chief admitted they’re doubling capacity every six months. That 300x efficiency gain evaporates when you’re building 1000x more capacity.

The Misdirection: Throughput vs. Consumption

Data center operators love to cite “water use efficiency” metrics that measure total water circulated, not consumed. Cycling 1,000 gallons through a system 1,000 times gets reported as “1 million gallons used”, when only 1,000 gallons were actually extracted from local supplies.

This is how companies greenwash their impact. They’ll report “water recycling rates” of 95% while still evaporating 15,000 gallons per day from a rural Texas town’s aquifer. The water they “recycle” is the same water they’re actively depleting. It’s accounting fraud with a sustainability label.

The Real Cost: Local Stress, Global Excuses

The “it just evaporates” argument conflates planetary physics with political economy. Yes, Earth is a closed system. No, that doesn’t help a West Texas farmer whose well runs dry because a data center vaporized their water table.

Margaret Cook from HARC nails it: “If a speculative developer is a bad actor, they could approach small communities who manage their own water supplies and see dollar signs… without proper planning, they might be trading their water supply for a short-term win.”

This is already happening. In North Carolina’s New Hill community, a proposed data center would use 1 million gallons of reclaimed water daily during peak summer. One-third evaporates permanently from the Cape Fear River basin. The developer promises 275 jobs. The town gets to shoulder the water risk forever.

The Bigger Picture: Water Is Just the Symptom

Water consumption is the canary in the coal mine for AI’s resource intensity. While AI data centers quietly raise your electric bill even as you use less power, water reveals the physical extraction happening behind the digital curtain.

The same facilities driving water stress are also delaying coal plant retirements and increasing emissions. Duke Energy admits data center demand is pushing them to keep coal plants online longer, adding “millions of tons of CO2” to meet near-term AI growth. In Texas, the 400+ data centers are forcing a complete rewrite of grid planning.

Yet the industry keeps promising breakthroughs. Efficient smaller models like Maincoder-1B prove we don’t need trillion-parameter monstrosities for every task. Algorithmic advances like Google’s Sequential Attention could cut energy use by computing less. Decentralized GPU scavenging shows alternative infrastructure models.

But none of these solutions matter if the economic incentives reward waste. Data centers get tax breaks for promising jobs while externalizing water and power costs onto communities. The “it just evaporates” argument is perfect for this system, it sounds scientific while absolving everyone of local responsibility.

What Actually Matters: Metrics That Can’t Be Gamed

If we want real sustainability, we need metrics that reflect local constraints:

- Water consumption per query, per token, per dollar of revenue, not circular recycling claims

- Disclosure requirements tied to municipal water permits, not voluntary reports

- Impact assessments that count evaporated water as lost to the local basin

- Community water rights that let towns reject facilities that threaten supply

- Alternative cooling mandates for new construction in water-stressed regions

Schneider Electric’s analysis shows warm-water cooling with dry coolers can eliminate evaporative losses entirely. The technology exists. But it’s 15% more expensive upfront, so operators default to water-guzzling towers until forced to change.

The water can’t “disappear from the water cycle.” Technically correct. But water can disappear from your town’s aquifer, your farm’s irrigation system, your community’s future. That’s what AI data centers are doing, transforming local, potable, finite water into global, diffuse, useless vapor.

The hydrological cycle doesn’t care about Texas water law. But Texans should. The AI industry is betting $21 billion on liquid cooling that might just enable a bigger heist. And we’re all paying for it, whether through higher utility bills, depleted aquifers, or the cognitive dissonance of pretending digital infinity has no physical cost.

The question isn’t whether AI uses water. It’s whether we’re okay with AI using our water while telling us it doesn’t matter. The answer, if we keep letting operators hide behind eighth-grade science, will be written in dry wells and broken communities across the American West.

Next time you get a surprisingly high electric bill despite using less power, remember: you’re subsidizing AI’s physical footprint. The water evaporates. The costs don’t.