The argument started with a simple question: why should Americans pay for the electricity that powers ChatGPT queries from India and China? When Trump trade adviser Peter Navarro floated this idea on a recent forum, he tapped into a resentment that’s been building among policymakers and grid operators. The framing was deliberately provocative, foreign AI usage as an “implicit subsidy”, but it exposed a policy vacuum that Washington is now scrambling to fill.

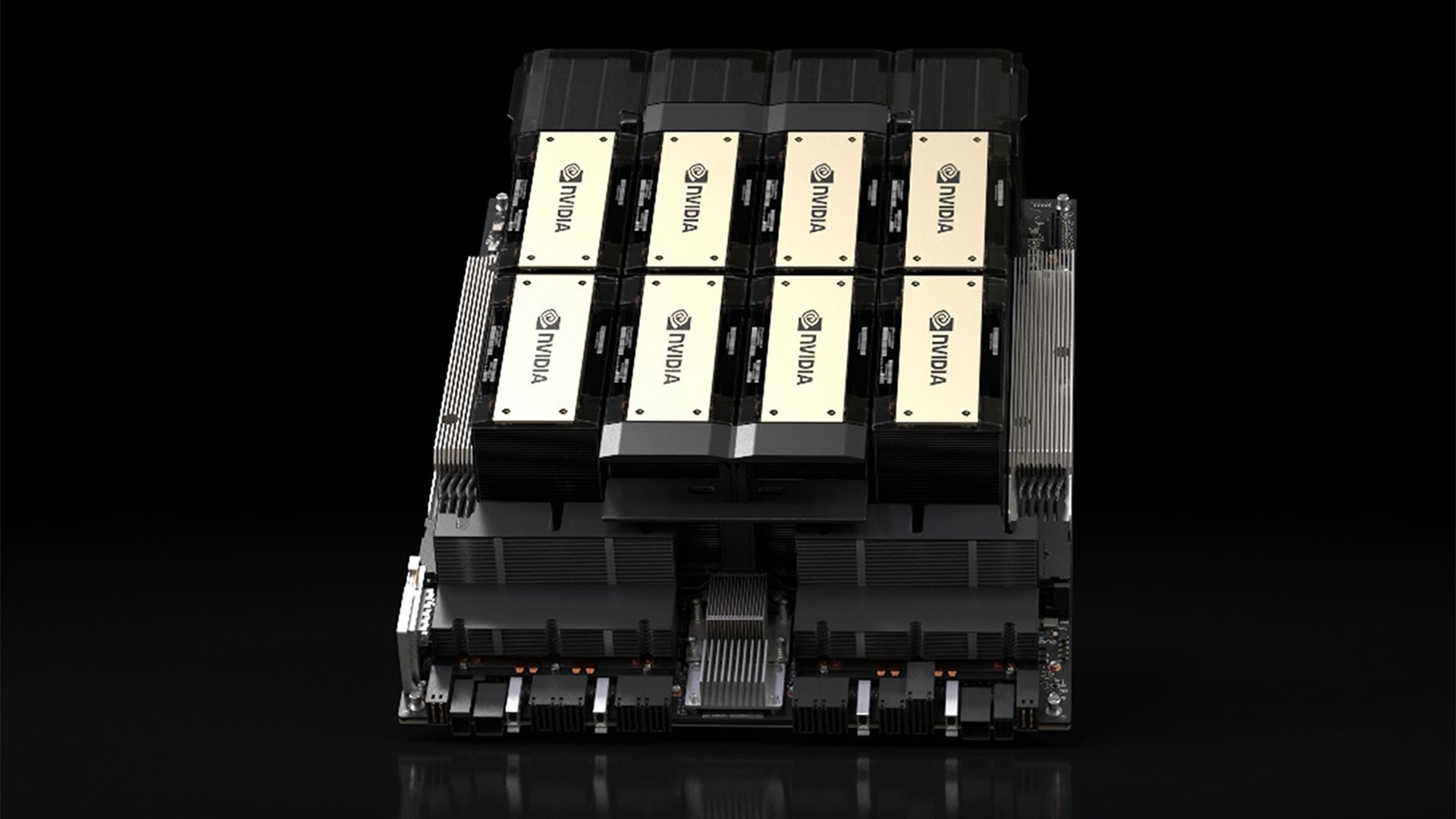

The technical reality behind Navarro’s complaint is stark. Modern AI infrastructure doesn’t run on goodwill and venture capital alone. It runs on a physical substrate of hyperscale data centers consuming terawatt-hours of electricity, advanced semiconductor fabrication plants that cost billions, and water-cooled server farms that can strain municipal supplies. When a developer in Shanghai prompts GPT-4, that request likely hits a U.S.-based server cluster, draws power from the American grid, and contributes to infrastructure wear that local ratepayers ultimately fund.

The New Geography of AI Compute

The Biden administration’s “AI Diffusion Rule” established a three-tier global hierarchy that remains the foundation of current policy. Tier 1 includes the United States and 18 close allies (UK, Canada, Germany, Japan, South Korea, Taiwan, Australia, New Zealand) with unrestricted access. Tier 2 covers most countries facing quantity caps, approximately 50,000 GPUs between 2025-2027. Tier 3 prohibits exports to roughly 20 nations including China, Russia, Iran, and North Korea.

But the rulebook is evolving fast. The Trump administration has already modified Middle Eastern restrictions, expanding access for Saudi Arabia and UAE while tightening enforcement against China. This creates a complex compliance landscape where the same Nvidia H200 GPU might be freely sold to a German research lab, require licensing for a Brazilian startup, and be completely banned from a Chinese university.

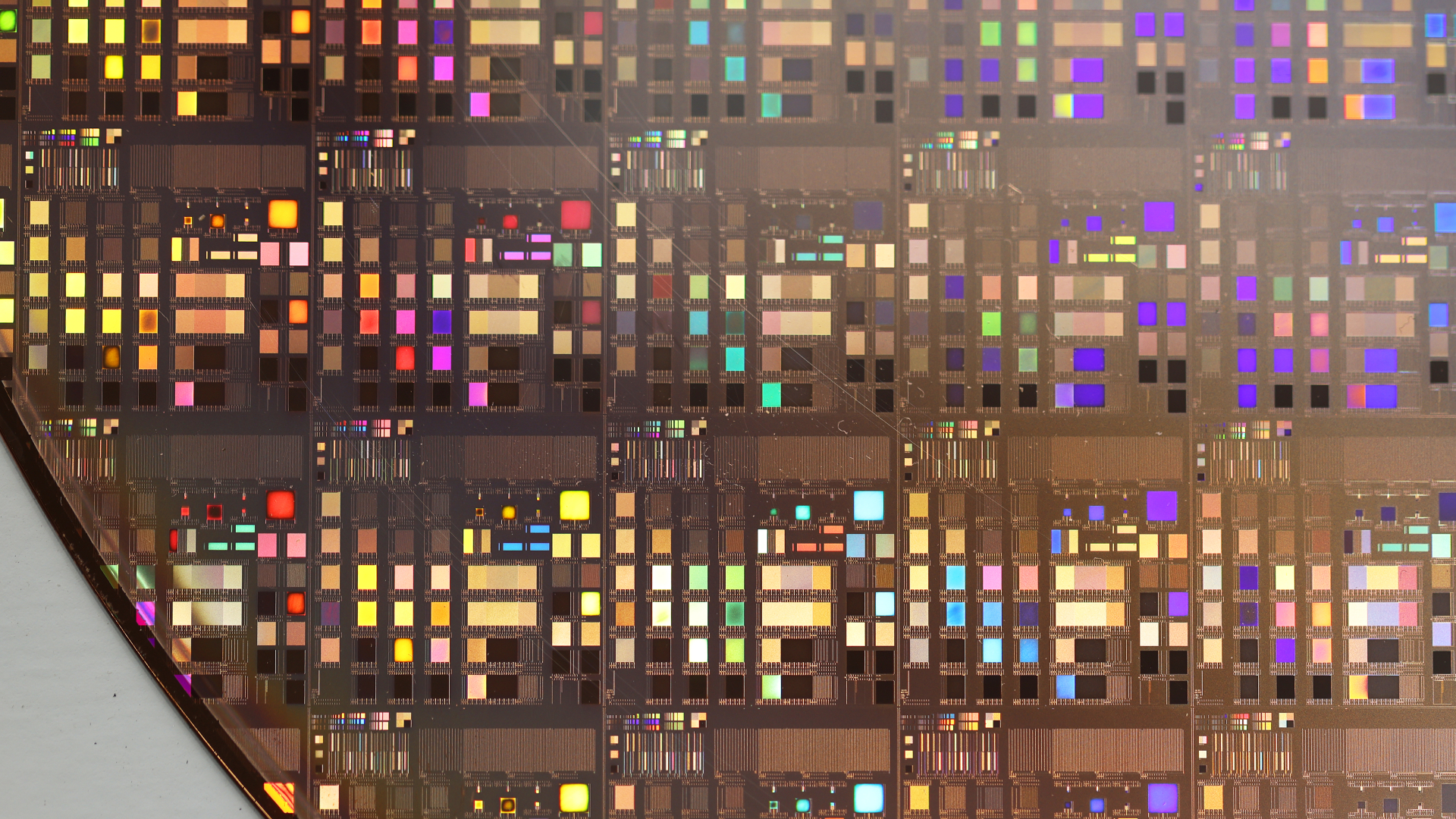

The technical specifications matter enormously. Export licenses for China now depend on precise performance metrics: Total Processing Performance (TPP) below 21,000 and DRAM bandwidth under 6,500 GB/s. The Nvidia H200 squeaks through at 15,832 TPP and 4.8 TB/s bandwidth, while AMD’s MI325X hits 20,800 TPP with 6 TB/s, both technically eligible but subject to strict case-by-case review.

When Export Controls Meet Ratepayer Revolt

The semiconductor restrictions are only half the story. The energy dimension is where Navarro’s argument gains real traction. Data centers already consume 2-3% of U.S. electricity, but the IEA projects global data center demand will more than double to 945 TWh by 2030, with America absorbing nearly half that growth.

This surge is hitting local grids hard. Researchers at Carnegie Mellon warn that heavy data center concentrations could push residential electricity bills up 25% in some regions. In Wisconsin, Microsoft is already supporting a new rate structure that charges “Very Large Customers” the full cost of electricity infrastructure, a direct response to community backlash.

The political response is accelerating. Senator Tom Cotton’s DATA Act of 2026 proposes a radical solution: let AI data centers bypass federal power regulations entirely by building off-grid infrastructure. The bill would create “consumer-regulated electric utilities” (CREUs) that operate as islanded power systems, exempt from Federal Energy Regulatory Commission oversight. It’s a blunt instrument that utilities will fight, but it signals how seriously lawmakers are taking the energy question.

Microsoft is trying to get ahead of this with its “Community-First AI Infrastructure” pledge. The company promises to cover full electricity costs, improve water-use intensity by 40% by 2030, and replenish more water than it withdraws. But these are voluntary commitments, not policy mandates, and they do nothing to address the underlying question Navarro raised: should foreign users get a free ride?

The China Calculation

The geopolitical stakes make this more than a utility billing dispute. Export controls have created a compute gap: the U.S. controls roughly 70% of global AI compute capacity, while China holds about 10%. That advantage is narrowing. DeepSeek’s R1 model demonstrated “near-frontier” performance using older H800 chips, and Huawei’s Ascend 910 series reaches 60-70% of Nvidia H100 performance on some workloads.

The Trump administration’s decision to allow H200 sales to China, despite adding 65 Chinese entities to the Entity List in 2025, reflects a calculated bet. The thinking: generate revenue for U.S. chipmakers while preserving leadership at the frontier. But analysis from the Institute for Progress suggests aggressive H200 exports could shrink America’s compute advantage to single digits by 2026.

This is where the subsidy argument gets complicated. If U.S. infrastructure enables Chinese AI development that eventually competes with American firms, the “implicit subsidy” becomes a strategic liability. But if that same infrastructure generates revenue that funds R&D and maintains technological leadership, it’s an investment, not a subsidy.

The Compliance Burden No One Talks About

For engineering managers actually deploying AI infrastructure, the policy debate translates into operational nightmares. New export rules require third-party testing by independent U.S. labs to verify TPP, memory bandwidth, and interconnect specs. Every shipment needs end-user certificates, KYC documentation, and proof that China-bound volumes don’t exceed 50% of U.S. shipments.

Smaller players get squeezed. While Nvidia and AMD can navigate this bureaucracy, startups building compliant accelerators must still prove they sell 50% more units in the U.S. than China, effectively requiring them to outperform the incumbents in their home market just to access foreign customers. The result is a moat that protects the very giants export controls were meant to regulate.

Cloud deployments add another layer of complexity. Technology access rules apply regardless of physical location. A multinational team with researchers in restricted countries may need licenses even if the GPUs sit in a Tier 1 data center. The deemed export rules mean that sharing model weights or granting remote access can trigger licensing requirements that most engineering organizations are ill-equipped to handle.

The Unresolved Tension

The core conflict remains: AI is simultaneously a global public good, a strategic national asset, and a massive infrastructure burden. Navarro’s “implicit subsidy” framing forces a choice between these roles. If AI is strategic infrastructure like highways or ports, then user fees or export restrictions make sense. If it’s a global platform like the internet, then openness and scale advantages dominate.

Congressman Gabe Amo’s challenge to the Trump administration highlights the legal contradictions. The Export Control Reform Act of 2018 prohibits charging fees for export licenses, yet the administration negotiated a 25% revenue share on H200 sales to China. Amo called this “likely illegal” and warned it creates “perverse incentives” to approve more chips than national security interests warrant.

Meanwhile, energy policy is fragmenting. Microsoft’s voluntary cost-covering is one approach. Cotton’s deregulation bill is another. Senator Sanders wants to restrict data center growth entirely, citing electricity and water concerns. Former Energy Secretary Rick Perry argues we need “fossil fuels and nuclear power as base loads and a lot of it” to win the AI race with China.

None of these positions resolve the fundamental question: who pays for the infrastructure that enables global AI access? The venture capital model assumed hyperscalers would absorb the costs and monetize through services. Navarro’s intervention suggests that model is politically unsustainable when American voters see their utility bills spike.

The most likely outcome isn’t a clean resolution but a patchwork: tighter controls on the most advanced chips, usage-based fees for certain foreign customers, corporate pledges to cover local infrastructure costs, and continued legislative experimentation. For engineering leaders, this means building AI infrastructure strategies that assume regulatory volatility, not stability.

The AI race isn’t just about who builds the best models. It’s about who pays for the power, who controls the silicon, and who decides who gets to play. Navarro’s subsidy argument may have been politically crude, but it revealed a truth the tech industry preferred to ignore: there is no such thing as a free compute lunch.

Key Takeaways for Infrastructure Leaders:

-

Export compliance is now core competency: The three-tier system, TPP thresholds, and entity list restrictions require dedicated legal and engineering resources. Generic cloud strategies won’t cut it.

-

Energy costs are political flashpoints: Whether through utility rate reforms, off-grid development, or corporate cost-covering, expect to justify your power consumption to regulators and communities.

-

China access is a moving target: H200 licenses may be available today, but the policy whipsaws between administrations. Build architectures that aren’t dependent on any single market.

-

Document everything: From end-user certificates to water replenishment metrics, comprehensive records are your only defense when regulations shift or communities push back.

The AI infrastructure boom isn’t slowing down. But the era of frictionless global scale is over. Every terawatt, every chip shipment, every data center location is now a policy decision, and someone has to pay the bill.