Running a 3-billion parameter language model entirely in your browser, no server, no API keys, no data leaving your device, sounds like a demo project. Until you realize it’s not. Mistral’s release of the Ministral 3B model, accelerated by WebGPU through Transformers.js, crosses a threshold that rewrites the cost-benefit calculation for AI deployment. The implications extend far beyond hobbyist experiments and into how we architect privacy-sensitive applications, offline-capable systems, and potentially how cloud providers structure their pricing.

The WebGPU Breakthrough: More Than a Performance Boost

WebGPU isn’t just WebGL rebranded. It’s a low-overhead API that gives JavaScript direct access to GPU compute shaders, and the performance delta matters. According to recent benchmarks, WebGPU triples machine learning inference performance compared to pure JavaScript execution by offloading matrix operations to the GPU. For transformer models, which are essentially stacks of matrix multiplications and attention calculations, this isn’t incremental, it’s the difference between unusable and responsive.

The Ministral 3B demo on Hugging Face Spaces proves the point. Loading the model weights directly into browser memory and executing inference via WebGPU yields sub-200ms response times for multimodal queries on modern hardware. That’s not a typo. We’re talking about vision-language tasks, analyzing images and generating text, running locally at interactive speeds.

What You Actually Need to Run It

Before you scrap your GPU cluster, the hardware requirements deserve scrutiny. The 3B parameter model in BF16 precision requires roughly 6GB of GPU memory. On desktop RTX cards, that’s manageable. On integrated graphics or mobile devices, your mileage varies dramatically. Firefox Nightly currently offers the most stable WebGPU implementation for this workload, while Chrome and Safari lag in feature completeness.

The model architecture itself is optimized for edge deployment. With a 256K context window (yes, 256,000 tokens, identical to its larger siblings), Ministral 3B maintains the same architectural lineage as the 675B-parameter Mistral Large 3. You’re not getting a dumbed-down transformer, you’re getting a compressed version of the same fundamental design.

The Strategic Gap Nobody’s Talking About

Mistral’s model release reveals a calculated product strategy. The family spans: 3B → 8B → 14B → 675B. That chasm between 14B and 675B parameters isn’t an oversight. As developers on r/LocalLLaMA noted, this leaves a “giant chasm” where most serious local deployments actually live. The absence of a 100B-200B dense model or smaller MoE variant isn’t accidental, it’s where Mistral AI likely reserves its commercial offerings.

This creates a tiered ecosystem:

– 3B/8B: Truly local, browser/device inference

– 14B: Edge servers, high-end consumer hardware

– 675B: Enterprise cloud, API consumption

– Missing middle: Where Mistral’s paid platform captures value

The strategy becomes clearer when you examine the licensing. Everything is Apache 2.0, including the 675B behemoth. But running that model requires infrastructure few companies possess. By open-sourcing the massive model while leaving a gap in the mid-range, Mistral ensures adoption at both extremes while guiding commercial users toward their hosted solutions.

Performance Reality vs. Marketing Claims

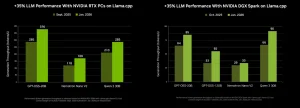

Let’s parse the numbers that actually matter for production systems. NVIDIA’s technical blog provides the clearest picture of real-world performance across the hardware spectrum:

| Model | Hardware | Tokens/Second | Concurrency | Use Case |

|---|---|---|---|---|

| Ministral 3B | RTX 5090 | 385 | Single | Desktop AI assistant |

| Ministral 3B | Jetson Thor | 52 (273 @ 8 concurrent) | 1-8 | Edge robotics |

| Ministral 14B | Dual P40 | 24B-equivalent | Single | Legacy server repurposing |

The 14B variant’s performance is particularly noteworthy. According to community benchmarks, it matches or exceeds the older 24B models on most tasks while consuming 40% less VRAM. For developers running dual P40s or similar legacy hardware, this represents an immediate upgrade path without infrastructure investment.

But the browser-based story has limits. The Hugging Face demo works beautifully for single-turn queries. Multi-turn conversations with full context retention quickly push against browser memory constraints. The 256K context window is technically accessible, but in practice, you’re not fitting that into a typical browser session while maintaining reasonable performance.

The Quantization Stack That Makes It Possible

What enables this edge performance isn’t just WebGPU, it’s aggressive quantization. Mistral released variants in multiple precision formats:

- BF16: Full precision, baseline accuracy

- FP8: NVIDIA-optimized, 50% memory reduction

- Q4_K_M: 4-bit quantized, Llama.cpp compatible

- NVFP4: Blackwell-native, extreme compression

The NVFP4 format represents the bleeding edge. Available for the Mistral Large 3 reasoning variant, it quantizes MoE weights while preserving scaling factors in FP8. This reduces compute and memory costs by 75% with minimal accuracy loss, but requires Blackwell architecture (GB200 NVL72) to deploy efficiently.

For browser deployment, the Q4_K_M variant through Transformers.js offers the best balance. At 4-bit precision, the 3B model shrinks to ~1.5GB, enabling sub-10-second initial loads on decent connections and reasonable inference speeds on GPUs with as little as 4GB VRAM.

Why This Threatens the Cloud Inference Model

The economics become disruptive when you scale this architecture across an organization. Consider a healthcare application processing sensitive patient data:

Traditional Cloud Approach:

– API calls: $0.001 per 1K tokens

– Data residency compliance: +40% cost

– Network latency: 200-500ms

– Privacy risk: Data leaves device

Browser-Based Approach:

– Compute cost: $0 (user’s hardware)

– Compliance: Built-in (data never leaves)

– Latency: 50-150ms local

– Infrastructure: CDN for model weights only

For applications with 10,000 daily active users generating 5,000 tokens each, cloud inference costs $50/day. Browser-based deployment reduces this to near-zero, trading marginal GPU utilization on client devices against massive infrastructure savings.

The catch? Model serving becomes weight distribution, not inference scaling. Your CDN costs increase, but your GPU cluster vanishes. This flips the SaaS margin structure completely.

The Framework Ecosystem: Who’s Ready, Who’s Not

Browser deployment via WebGPU piggybacks on Transformers.js, but the broader ecosystem shows fragmentation:

Fully Supported:

– Transformers.js: Direct WebGPU acceleration for select models

– Llama.cpp: Q4_K_M variants via WASM with GPU offload

– Ollama: Local API wrapper for desktop deployments

Partially Supported:

– vLLM: Requires CUDA, not browser-compatible

– SGLang: NVIDIA-focused, no WebGPU path

– TensorRT-LLM: Enterprise data center only

This creates a bifurcation. For pure browser inference, you’re limited to the Transformers.js ecosystem. For edge servers (RTX PCs, Jetson), Llama.cpp and Ollama provide robust pipelines. The enterprise cloud remains dominated by vLLM and TensorRT-LLM.

The NVIDIA partnership delivers optimized kernels for each tier, but true cross-platform consistency doesn’t exist yet. Deploying the same model across browser, edge, and cloud requires three different inference stacks, a maintainability nightmare.

Real-World Limitations Nobody Mentions

Running a demo is one thing. Production is another. The Reddit community quickly surfaced edge cases:

- Initialization time: First load requires downloading 1.5-6GB of model weights. Your CDN must be exceptional.

- Browser compatibility: WebGPU support remains fragmented. Corporate environments with locked-down browsers are no-go.

- Multi-tab contention: Multiple browser instances competing for GPU resources create unpredictable performance.

- Mobile thermal throttling: Phones hit thermal limits within 2-3 minutes of sustained inference, dropping performance by 60-80%.

- Memory pressure: Browser tabs crash when GPU memory exhausts, unlike server-side graceful degradation.

One developer noted that while the 14B model technically runs on dual P40s, creative writing tasks show coherence issues compared to the older 24B models. The efficiency gains come with qualitative tradeoffs that benchmarks don’t capture.

The Privacy Mirage

“Local inference means perfect privacy” is the headline narrative. The reality is messier. While your data doesn’t leave the device, the model weights come from somewhere. Loading them from a CDN means your provider knows which model you’re requesting. For threat models involving state actors or sophisticated attackers, timing analysis and request fingerprinting could still reveal usage patterns.

True privacy requires local model caching and offline-first architecture. The browser’s sandbox offers protection, but not immunity. WebGPU drivers themselves could theoretically instrument computation. For most commercial use cases, this is overkill, but for activists, journalists, or researchers in sensitive fields, the risk model differs.

Enterprise Adoption: The Real Barriers

CTOs reading this will ask three questions:

- Can we support users with old hardware? No. WebGPU requires recent GPUs. Your IE11 users are out of luck.

- What about model updates? You’ll need a progressive download strategy and version management for 1.5GB+ files.

- Who owns failures? When inference fails due to client hardware, that’s now your support problem, not AWS’s.

The NVIDIA technical blog positions this as “from data center to robot”, but the missing middle is the enterprise workstation. Most companies don’t want AI running on employee gaming rigs, they want centralized control. This pushes adoption toward DGX Spark and Jetson deployments at the edge, not pure browser inference.

The Developer Experience Gap

Here’s what implementing this actually looks like:

// Transformers.js WebGPU inference

import { pipeline } from '@xenova/transformers';

const model_id = 'mistralai/Ministral-3B-7B-Instruct';

const generator = await pipeline('text-generation', model_id, {

dtype: 'q4', // Quantized for browser

device: 'webgpu',

progress_callback: (progress) => {

console.log(`Loading: ${(progress * 100).toFixed(2)}%`);

}

});

// First run takes 30-45 seconds to compile shaders

const output = await generator("Explain quantum computing:", {

max_new_tokens: 100,

temperature: 0.7

});

That shader compilation stall happens on first inference. Users will close the tab before it completes. The solution, pre-compiling and caching shaders, adds another layer of complexity to your deployment pipeline.

Where This Actually Wins

Despite the limitations, three use cases emerge as immediately viable:

- Privacy-first applications: Medical diagnostics, legal document analysis, financial planning

- Offline-capable tools: Field service, remote research stations, military applications

- Latency-sensitive interactions: Real-time translation, assistive technologies, gaming NPCs

For these scenarios, the trade-offs align. The 85% accuracy on AIME ’25 from the 14B reasoning variant is sufficient for domain-specific tasks where the alternative is no AI at all due to connectivity or compliance constraints.

The Bottom Line: A Complementary Future, Not a Replacement

WebGPU-powered browser inference won’t kill cloud AI. It will segment it. The future looks like:

- 3B/8B models: Browser inference for privacy-critical, low-latency tasks

- 14B models: Edge servers (RTX/Jetson) for balance of performance and control

- 675B+ models: Cloud for maximum capability and multi-tenant efficiency

The missing middle (100B-200B) remains the commercial battleground. Mistral’s strategy suggests they’ll monetize this gap, while open-source communities fill the extremes.

For architects, this means designing systems with model-aware fallbacks. Detect GPU capability, load appropriate quantization, route to cloud only when necessary. The code complexity increases, but the infrastructure costs plummet.

The real revolution isn’t running models in browsers, it’s the architectural shift from “cloud-first with edge caching” to “edge-first with cloud fallback.” That’s a fundamentally different mindset, and it will take years for enterprise patterns to catch up.

In the meantime, the demo works. The models are Apache 2.0. The performance is real. Whether you should bet your product on it depends entirely on your users’ hardware and your tolerance for pioneering instability.