For years, the AI industry has operated under a simple, brutal logic: bigger models need bigger GPUs. The conversation around fine-tuning LLMs has devolved into a VRAM arms race where training a 4-billion parameter model meant securing at least 16GB of memory, preferably 24GB, ideally 48GB. Developers without access to A100s or H100s were essentially told to sit this one out.

Unsloth just called bullshit on that entire premise.

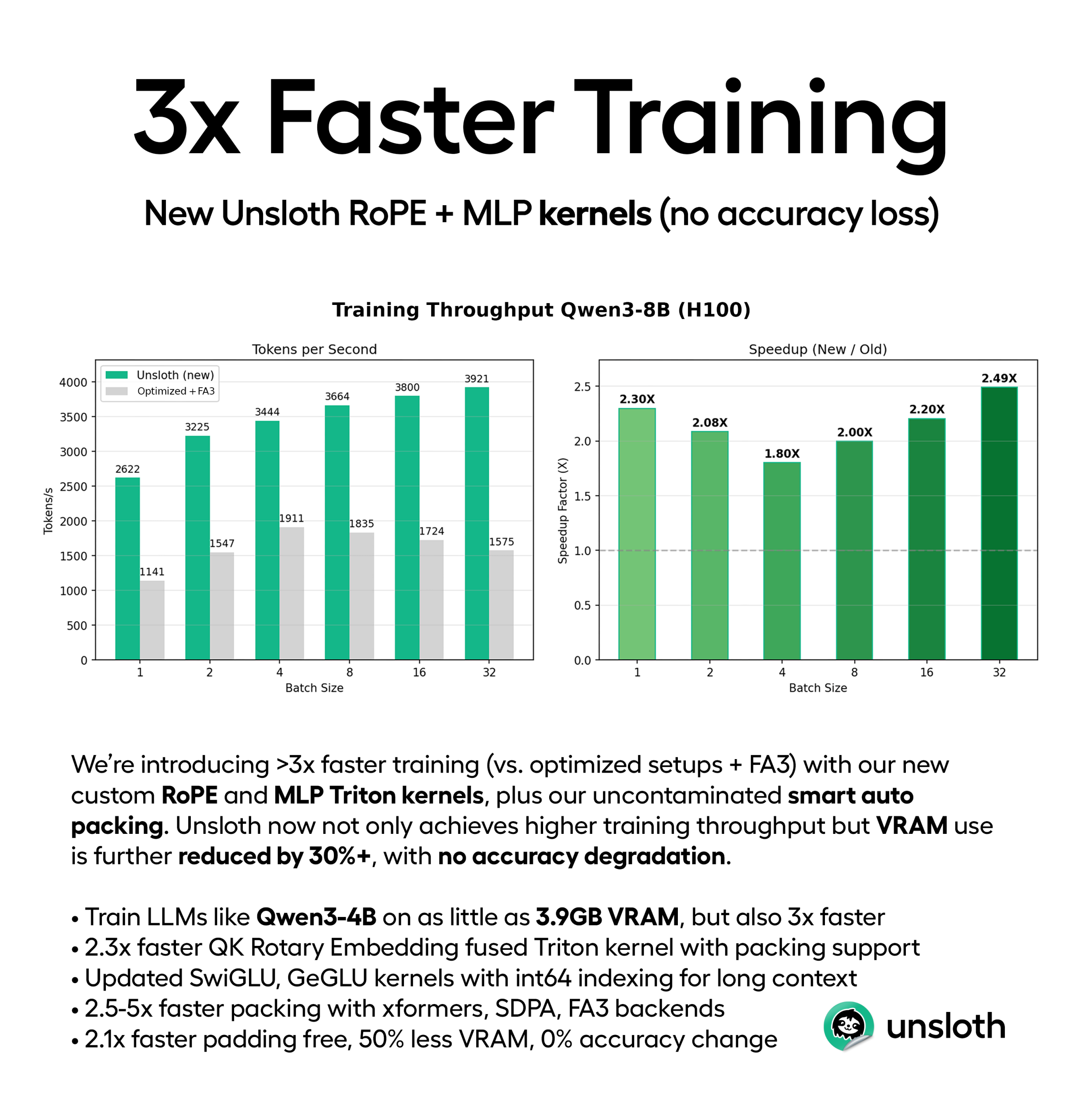

Their latest release, custom Triton kernels fused with intelligent auto-packing, cuts training times by up to 5x and slashes VRAM requirements by 30-90% with zero accuracy degradation. The kicker? You can now fine-tune a Qwen3-4B model on a 3.9GB GPU. That’s not a typo. Four billion parameters on a graphics card that costs less than a weekend in San Francisco.

The Technical Stack Behind the Magic

This isn’t another quantization hack. Unsloth’s engineering team rewrote fundamental training operations at the kernel level, then added a packing strategy so clever it makes traditional batching look like a rounding error.

Fused RoPE Kernels: Where the Real Speed Lives

The heart of the optimization lies in a fused Rotary Position Embedding (RoPE) kernel. Previous implementations required separate Triton kernels for Q and K projections, each launching independently, each burning memory and cycles. Unsloth merged them into a single kernel that runs 2.3x faster on long sequences and 1.9x faster on short ones.

But they didn’t stop at fusion. The kernel now operates fully in-place, eliminating the memory-hungry clone and transpose operations that plague standard implementations. The result? RoPE calculations happen without allocating a single extra byte of VRAM.

# The fused kernel eliminates this inefficiency:

# Q * cos + rotate_half(Q) * sin # Old: clones, transposes, wastes memory

# Becomes this:

# Q * cos + Q @ R * sin # New: in-place, zero overhead

# where R is the rotation matrix [ 0, I]

# [-I, 0]

For the backward pass, the math is equally elegant. The gradient dC/dY flows through the same rotation matrix transposed, with the negative sign flipping sides. No magic, just linear algebra optimized to the metal.

Int64 Indexing: Solving the Long-Context Problem

When Unsloth announced 500K context length support last quarter, they hit a wall: MLP kernels for SwiGLU and GeGLU operations used int32 indexing by default. At half-a-million tokens, that overflows. The naive fix, casting everything to int64, adds overhead that slows down every single operation.

Their solution is surgical. They introduced a LONG_INDEXING: tl.constexpr flag that lets the Triton compiler specialize the kernel at compile time. When you’re training on short contexts, you get the speed of int32. When you need 500K tokens, the compiler generates an int64 variant without runtime branching overhead.

block_idx = tl.program_id(0)

if LONG_INDEXING:

offsets = block_idx.to(tl.int64) * BLOCK_SIZE + tl.arange(0, BLOCK_SIZE).to(tl.int64)

n_elements = tl.cast(n_elements, tl.int64)

else:

offsets = block_idx * BLOCK_SIZE + tl.arange(0, BLOCK_SIZE)

This isn’t just clever, it’s the kind of optimization that separates research prototypes from production-ready tools.

Uncontaminated Packing: The 5x Speedup Nobody’s Talking About

Here’s where things get actually interesting. Standard training wastes obscene amounts of compute on padding tokens. When you batch sequences of different lengths, say, a 128-token example with a 2048-token example, your framework pads the short sequence with zeros to match the longest. Those zeros get fed through every layer, consuming FLOPs and memory for absolutely nothing.

In a typical dataset with mixed sequence lengths, padding can waste up to 50% of your batch. Unsloth’s “uncontaminated packing” eliminates this entirely by concatenating multiple examples into a single long sequence, then using attention masks to prevent cross-contamination between samples.

The math is brutal in its simplicity. Assume a dataset with 50% short sequences (length S) and 50% long sequences (length L):

Traditional batching: Token Usage = batch_size × L

Packed batching: Token Usage = (batch_size/2) × L + (batch_size/2) × S

The theoretical speedup? Speedup = 2L / (L + S)

When your dataset skews toward shorter sequences, a common scenario in instruction fine-tuning, the speedup explodes. With 20% long and 80% short sequences, you hit 5x faster training. The Reddit community picked up on this immediately, noting that the gains compound multiplicatively with Unsloth’s existing speedups.

The images from Unsloth’s docs make this stark: unpacked batches drown in padding as batch size increases, while packed batches maintain 95%+ efficiency regardless of sequence length.

The “Uncontaminated” Part Matters

Packing isn’t new. What is new is doing it without leaking information between examples. Unsloth’s RoPE kernel resets position IDs at each example boundary, ensuring that token #127 in example A doesn’t attend to token #0 in example B. The attention mask blocks cross-example visibility, making the packed sequence mathematically equivalent to separate forward passes, just orders of magnitude faster.

Benchmarks That Actually Mean Something

Unsloth ran head-to-head comparisons on Qwen3-8B, Qwen3-32B, and Llama 3 8B using the yahma/alpaca-cleaned dataset. They fixed max_length=1024 and varied batch sizes from 1 to 32.

The results:

- Tokens/second throughput: 1.7x to 3x faster across the board, hitting 5x+ on batches with high variance in sequence length

- VRAM reduction: 30% minimum, up to 90% on certain configurations

- Loss curves: Exactly matching non-packed runs, down to the third decimal

- Wall-clock time: Packed runs completed nearly 40% of an epoch in the same time unpacked runs finished 5%

The community stress-tested these claims immediately. One developer noted the multiplicative effect: “This isn’t 3x faster, it’s 3x faster compared to Unsloth’s old >2.5x faster”, meaning you’re looking at a cumulative 7.5x improvement over baseline PyTorch.

What This Actually Enables

Democratization of Fine-Tuning

A 6GB GPU isn’t a paperweight anymore. The Reddit threads exploded with questions from developers on consumer cards: “Is this good news for low VRAM users like me? 6GB?” The answer: depending on your dataset, VRAM can drop as much as 90%. You can fine-tune a 14B model on a 5060ti with 16GB VRAM. With QLoRA, you can squeeze it into 10GB.

This isn’t marginal improvement, it’s a category shift. Researchers in emerging markets, independent developers, and small startups just got access to capabilities that previously required enterprise hardware budgets.

Research Velocity

The speedup isn’t just about saving money, it’s about accelerating iteration cycles. A training run that took 3 hours now finishes in 36 minutes. That means you can test 5x more hypotheses per day, explore hyperparameter spaces more thoroughly, and actually complete experiments before deadlines.

The improved SFT loss stability also matters. Fluctuating gradients during supervised fine-tuning have plagued practitioners for years. Unsloth’s optimizations produce “more predictable GPU utilization”, code for “your loss curves won’t look like a seismograph during an earthquake.”

Multi-GPU Without the Pain

For those with multiple cards, Unsloth now supports DDP (Distributed Data Parallel) with proper documentation. The Magistral 24B notebook demonstrates using both of Kaggle’s free GPUs to fit models that previously required paid tiers. Early access to FSDP2 support is rolling out next month, promising even better scaling for the multi-GPU crowd.

The Reddit thread reveals the frustration this solves: developers with two 3090s were stranded, unable to split models across cards. Unsloth’s roadmap addresses this directly, though as one team member noted, proper model-parallel training is still in early access.

Implementation: It’s Just Two Lines

The elegance of Unsloth’s approach is that you don’t rewrite your training loop. Update the library, and padding-free optimization happens automatically.

pip install --upgrade --force-reinstall --no-cache-dir --no-deps unsloth

pip install --upgrade --force-reinstall --no-cache-dir --no-deps unsloth_zoo

That’s it. Your existing notebooks run 1.1x to 2x faster immediately, with 30% less memory usage, and the loss curve doesn’t budge.

To enable full packing and unlock the 5x speedup:

from unsloth import FastLanguageModel

from trl import SFTTrainer, SFTConfig

model, tokenizer = FastLanguageModel.from_pretrained("unsloth/Qwen3-14B")

trainer = SFTTrainer(

model = model,

processing_class = tokenizer,

train_dataset = dataset,

args = SFTConfig(

per_device_train_batch_size = 1,

max_length = 4096,

packing = True, # This is the magic flag

),

)

trainer.train()

All notebooks in Unsloth’s catalog, from Qwen3-14B reasoning to Llama 3.2 conversational, are automatically accelerated. No code changes required.

The Caveats That Actually Matter

This isn’t universal. Speedups depend on your dataset’s sequence length variance. If every example is exactly 2048 tokens, packing gains shrink. The 30-90% VRAM reduction range reflects real-world variance, it depends on batch size, model architecture, and whether you’re using QLoRA.

The Reddit community also flagged the multiplicative nature of these improvements. When you’re already running 2.5x faster than baseline, an additional 3x multiplier isn’t trivial. It compounds to 7.5x total speedup, but that requires optimal conditions: short sequences, large effective batch size, and a model that fits comfortably in your VRAM budget.

Why This Changes Everything

The AI industry has been racing toward centralization, fewer companies with bigger clusters training larger models. Unsloth’s optimizations pull in the opposite direction. They prove that algorithmic innovation can outpace hardware scaling, at least for fine-tuning workloads.

For the cost of a single A100 hour on a cloud provider, you can now buy a used RTX 3060 and fine-tune models locally for months. That’s not incremental. That’s a power shift.

The research implications are equally profound. When a PhD student can iterate on a 32B model on their personal machine, the barrier to entry for novel architectures and training methods collapses. We’re likely to see an explosion of niche models trained on domain-specific data, the kind of work that cloud costs previously rendered economically irrational.

The Bottom Line

Unsloth didn’t just optimize training. They rewrote the economics of fine-tuning. The combination of fused Triton kernels, compile-time specializations for long contexts, and mathematically rigorous packing eliminates the VRAM bottleneck that has defined LLM development for three years.

Your 6GB GPU isn’t obsolete, it’s a fine-tuning workhorse. Your 3090 isn’t just for inference anymore. And that $6000 A100 you’ve been eyeing? You might want to wait.

The VRAM arms race is over. Unsloth won.