The data engineering community is experiencing a familiar cycle: a breakthrough technology emerges, and within months, everyone’s scrambling to wedge it into their stack. Large language models are the latest culprit. Teams are pitching AI-powered ingestion pipelines that promise to eliminate manual schema mapping, auto-detect anomalies, and generate ETL code from natural language. The reality? Most of these projects stall at the prototype stage or quietly get ripped out after generating more debugging work than they saved.

The gap between conference-talk demos and production-grade systems is widening. While vendors tout revolutionary capabilities, engineers on the ground report a more nuanced story: AI is useful, but only in specific, narrowly-defined contexts. The rest is wishful thinking that turns your data pipeline into a black box of unpredictable behavior.

Where AI Actually Moves the Needle

Unstructured Data Extraction: The One Clear Win

The most consistent success story involves using LLMs to extract structured data from unstructured sources. Scraping product pages and getting back clean JSON with price, description, and specs beats maintaining brittle CSS selector pipelines that break every time a site changes a div class. This isn’t theoretical, it’s happening in production today.

The pattern is straightforward: scrape the raw HTML, feed it to a model with a clear schema definition, and get structured data back. When the site changes, you re-run the extraction instead of rewriting selectors. One engineer noted this approach collapses multi-day maintenance cycles into minutes. The key is treating the model as a flexible parser rather than an intelligent agent.

This is where efficient small AI models for practical pipeline automation become relevant. You don’t need a massive frontier model to extract fields from a product page. A distilled 7B parameter model running on modest hardware can handle this at a fraction of the cost and latency.

Semantic Validation: Catching What Rules Miss

Traditional validation checks data types, ranges, and null constraints. It doesn’t catch when a field contains “N/A”, “TBD”, and “pending”, all technically strings but with drastically different downstream meanings. LLMs excel at this semantic nuance.

The winning implementation pattern is lightweight: run the validator as a post-ingestion step, tagging records with semantic flags before they hit your bronze layer. This catches data quality issues that would otherwise poison analytics or trigger incorrect business logic. One team reported this single step eliminated 40% of their production data incidents.

The cost is minimal when you’re processing batches rather than streaming events. For real-time pipelines, the latency overhead becomes a genuine tradeoff, though real-time data ingestion using modern lakehouse streaming architectures are beginning to close that gap.

Schema Inference for Unknown Sources

Inheriting a data source with garbage documentation is a rite of passage. Engineers are now throwing sample records at LLMs and asking “what do these fields probably mean and what types should they be?” The models get you 80% of the way there, turning a day of detective work into a 15-minute exercise.

Databricks users are experimenting with running smaller models directly in their environment to infer schemas on messy source data before it hits the bronze layer. This saves manual mapping work, but comes with a critical caveat: sometimes you want the pipeline to fail loudly when something breaks, not silently adapt and cause problems downstream.

The dark side of “intelligent” schema evolution is that it masks breaking changes. A field that suddenly contains UUIDs instead of integers might parse correctly but silently break your business logic. The best implementations use AI for suggestion, not enforcement, human review remains the gatekeeper.

Code Scaffolding, Not Code Generation

Claude Code and similar tools are proving useful for scaffolding ingestion jobs. Feed them a sample API response or file structure, and they generate boilerplate for REST API connectors or SFTP drop processors. But no one is shipping that code directly.

The pattern is: generate, review heavily, and treat the output as a starting point that cuts initial dev time by half. It’s sophisticated autocomplete, not an engineer replacement. The generated code still needs error handling, retry logic, and compliance checks baked in.

This is where running AI agents locally for secure, low-latency data processing becomes compelling. Keeping code generation on-premise avoids sending sensitive schema information to external APIs, a concern that’s top of mind for regulated industries.

The Enterprise Reality Check: FactSet’s Approach

FactSet’s recent launch of AI Doc Ingest for Cobalt reveals how conservative enterprise AI adoption actually is. Their solution automates extraction from portfolio company documents, PDFs, Excels, board decks, but positions AI as a friction-reduction layer, not a magic bullet.

The key differentiator: zero client-side training. The system adapts to evolving document formats without requiring customers to fine-tune models. This is crucial because it eliminates the hidden cost of AI maintenance. Enterprise buyers are rightly skeptical of solutions that promise automation but require constant tuning by expensive data scientists.

Early beta users report reducing multi-day document processing to minutes. But notice the scope: it’s a narrowly-defined document type (portfolio company reporting) with clear validation interfaces. Users can view extracted metrics side-by-side with source documents, maintaining human oversight. This isn’t “set it and forget it”, it’s “AI handles the grunt work, humans handle the decisions.”

The 50x faster time-to-value claim compared to legacy OCR stacks sounds impressive, but it reflects a specific use case: structured financial documents with predictable layouts. Don’t expect those numbers when ingesting random web content or engineering logs.

The Vision AI Revolution in Document Processing

LandingAI’s Agentic Document Extraction represents a different approach: computer vision models that parse documents holistically. Instead of treating a PDF as text, it understands layout, tables, and visual relationships. This matters for complex documents where position carries meaning, think medical forms, engineering schematics, or financial statements.

Their benchmarks show 99.16% accuracy on document question answering without requiring images in the QA context. The real innovation is visual grounding: every extracted field links back to its source location with page coordinates and confidence scores. This traceability is non-negotiable for audit trails and regulatory compliance.

The platform handles zero-shot parsing of diverse formats without layout-specific training. For data ingestion pipelines dealing with heterogenous document streams, this eliminates the traditional tradeoff between accuracy and flexibility. You’re not choosing between a brittle template-based parser and an expensive custom model for each format.

But the infrastructure requirements are real. Running vision models at scale isn’t cheap, and while LandingAI offers cloud APIs, the real cost is integration. You need to rearchitect your ingestion pipeline to handle asynchronous extraction with proper retry logic and error handling.

The “Around the Pipeline” Pattern

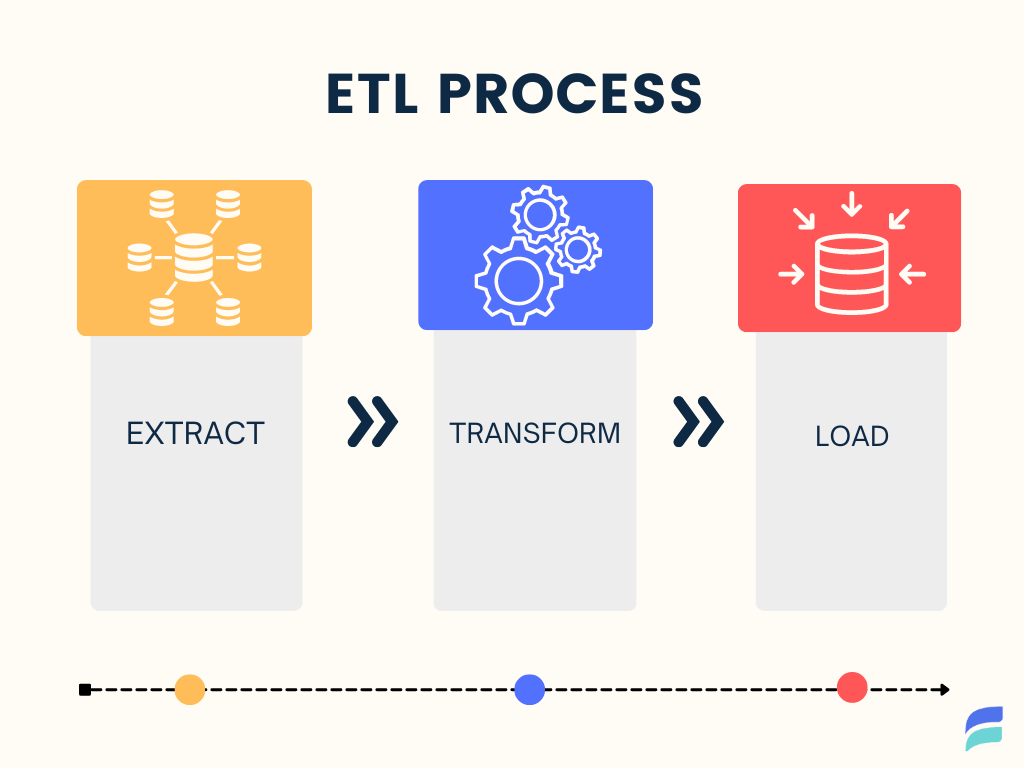

A consistent theme emerges from practitioner discussions: most AI adds value around the pipeline, not in the pipeline core. Classification, routing, metadata enrichment, log summarization, and connector code generation are the sweet spots. The actual Extract-Transform-Load mechanics remain largely unchanged.

Why? Data integration is a solved problem. Adding LLMs to a working CDC stream or batch ETL job introduces latency, cost, and non-determinism without clear benefits. The ingestion layer needs to be fast, reliable, and predictable. AI’s probabilistic nature is fundamentally at odds with these requirements.

This explains why practical AI applications in data engineering beyond hype focus on orchestration and augmentation rather than core transformation. AI is the assistant, not the engine.

The Hidden Dangers: When AI Becomes a Liability

The Silent Failure Problem

The most dangerous anti-pattern is dynamic schema evolution that adapts without alerting humans. When your pipeline silently handles a format change, you lose the opportunity to update downstream dependencies. The data flows, but your dashboards, ML models, and operational systems break in subtle ways.

One engineer warned: “sometimes you want the pipeline to fail loudly when something breaks, not silently adapt and cause problems downstream.” This is the opposite of traditional software engineering wisdom, where graceful degradation is prized. In data pipelines, silent failures are catastrophic failures.

The Cost Explosion

LLM-based ingestion looks cheap at small scale. Processing 1,000 documents costs pennies. But at production volumes, millions of records daily, the math shifts dramatically. One team found their “intelligent” ingestion pipeline cost 40x more than their traditional ETL, with latency that violated their SLAs.

The problem compounds when you’re paying per token for data that’s mostly noise. Web pages are 90% navigation, ads, and boilerplate. Feeding entire HTML documents to a model is burning money. The smarter approach: pre-process with cheap heuristics, apply AI only to the ambiguous fragments.

The Compliance Nightmare

For regulated industries, using external LLM APIs for ingestion creates auditability issues. You can’t explain why a model extracted a particular value from a document if you can’t reproduce the exact model state and prompt. This is why local AI inference optimizations for efficient pipelines are gaining traction, keeping data processing on-premise maintains governance boundaries.

The Infrastructure Question: Build vs. Buy vs. Self-Host

The build vs. buy calculus for AI-powered ingestion is more complex than traditional ETL. Cloud APIs offer instant gratification but create lock-in and variable costs. Self-hosting open-source models gives control but requires ML ops expertise most data teams lack.

The emerging consensus: start with cloud APIs for prototyping, but plan your migration path to self-hosted small models for production. The economics only work at scale when you’re not paying per API call. Tools like llama.cpp and quantized models make this feasible on commodity hardware.

This is particularly relevant for running AI agents locally for secure, low-latency data processing, where keeping sensitive data within your VPC isn’t just preferred, it’s mandatory.

What Actually Works: A Decision Framework

Based on production deployments, here’s where AI ingestion makes sense:

Use AI when:

– Extracting structured data from unstructured sources with variable formats

– Performing semantic validation that rules-based systems can’t capture

– Generating initial schema mappings for unknown data sources

– Scaffolding boilerplate ingestion code

– Classifying documents into processing streams

Avoid AI when:

– Processing high-volume streaming data with tight latency requirements

– Dealing with well-structured, predictable data sources

– Regulatory requirements demand full reproducibility

– Cost per record exceeds business value

– The pipeline needs to fail deterministically on schema changes

The Bottom Line

AI in data ingestion isn’t a revolution, it’s a specialized tool for specific pain points. The teams seeing real value are those who treat LLMs as sophisticated parsers and validators, not magic wands. They implement human-in-the-loop oversight, keep costs in check with small models, and maintain traditional ETL for the core pipeline.

The gold rush mentality is creating a graveyard of over-engineered prototypes. The winners are taking a pragmatic approach: identify the bottleneck, apply AI surgically, and measure relentlessly. Everything else is just vendor noise.

Before you add that LLM step to your pipeline, ask: would a regex and a lookup table solve this? If the answer is yes, you’re digging in the wrong place.