Mistral’s Devstral 2 launch should have been a victory lap. A 123-billion-parameter coding model that matches trillion-parameter rivals, scoring 72.2% on SWE-bench Verified while running on a fraction of the hardware. Instead, it became a case study in how quickly technical brilliance can collapse into community distrust when testing becomes an afterthought.

Within hours of release, developers hunting for the model found themselves in a maze of broken integrations, contradictory benchmark claims, and licensing terms that read like a bait-and-switch. The backlash wasn’t about whether the model was good, the engineering is real, but about whether Mistral respected the community enough to ship something that worked out of the box.

The Release That Broke Trust, Not Just Code

The controversy started where most AI controversies start: on GitHub and Reddit. Developers attempting to run Devstral 2 through standard community tooling, llama.cpp, MLX-Engine, Ollama, LM Studio, encountered failures that ranged from repetition loops to wildly inconsistent benchmark scores. One developer reported the same benchmark producing a 2% score, a far cry from Mistral’s published results.

The core issue wasn’t model intelligence. No one believed Mistral shipped a broken 1B-parameter brain. The problem was in the unsexy details: misconfigured templates, incomplete documentation, and a release process that seemed to skip the final hour of community validation. As one frustrated developer put it, the release team spent months training the model, then couldn’t be bothered to spend a day reproducing benchmarks on the same tools their target audience uses daily.

Mistral’s own release notes admitted Ollama and LM Studio support weren’t ready, which only fueled the fire. For a company marketing to developers who live in these ecosystems, announcing a model before the infrastructure supports it is like shipping a car without wheels and calling it “transportation-ready.” The defense, that every major release needs tooling adjustments, misses the point. When Qwen3 launched with similar issues, the community grumbled but moved on. Devstral 2 hit different because Mistral had positioned itself as Europe’s open-source champion, the transparent alternative to black-box American labs.

The Benchmark Crisis Nobody Wants to Talk About

Here’s where the story connects to a deeper rot in AI development. Stanford researchers recently revealed that as many as 1 in 20 AI benchmarks contain “fantastic bugs”, outright errors, mismatched labeling, ambiguous questions, or formatting issues that mark correct answers as wrong. In one case, a benchmark accepted “$5” but rejected “5 dollars” and “$5.00.” These aren’t edge cases, they’re systemic failures that can shift model rankings and misdirect millions in research funding.

The timing is brutal for Mistral. Claims of fudged benchmarks started circulating almost immediately, with developers unable to reproduce the celebrated 72.2% SWE-bench score. Mistral’s announcement provided no verification links to official leaderboards, a strange omission for a company supposedly championing transparency. The Stanford study validates community skepticism: if 5% of benchmarks are fundamentally broken, and many more are subtly flawed, then “trust us, it scored well” becomes an impossible sell.

This crisis of measurement means every benchmark score now lives under a cloud of suspicion. When DeepSeek-R1’s ranking jumped from third-lowest to second-place after fixing benchmark errors, it proved that model performance and benchmark accuracy are intertwined. Mistral’s inability to immediately point to reproducible results on community-standard tools made them look like they were hiding something, even if the model itself is capable.

Open Source Theater and the $20 Million Revenue Trap

While developers wrestled with broken tooling, lawyers were reading the license. Devstral 2 ships under a “modified MIT license” that includes a bombshell clause: companies with global consolidated monthly revenue exceeding $20 million can’t use it freely. That’s $240 million annually, a threshold that excludes Deutsche Bank, Siemens, Airbus, Carrefour, and most European enterprises Mistral claims to serve.

The smaller Devstral 2 variant runs under Apache 2.0, genuinely open and permissive. But it also scores lower on benchmarks, creating a two-tier system: a “free” version that’s good but not great, and a “restricted” version that actually competes with Claude Sonnet. This structure lets Mistral harvest developer goodwill and free QA while reserving the right to charge enterprises for the model that actually matters.

Hacker News caught this within hours. The revenue cap explicitly discriminates against fields of endeavor, violating the Open Source Initiative’s definition. Whatever Mistral is shipping, it isn’t open source by any historical standard. It’s commercial software with a generous free tier, wrapped in marketing that exploits a community’s trust.

ASML’s €1.3 billion investment in Mistral complicates the picture. The deal was framed as Europe’s AI sovereignty play, a way to keep critical capabilities out of American and Chinese control. But sovereignty requires actual independence. A license that excludes strategic industries doesn’t deliver independence, it delivers a different vendor negotiation. Deutsche Bank still needs a commercial license. Siemens still needs a commercial license. The “European alternative” looks suspiciously like the American model, just with French accents.

Why Community Testing Isn’t Optional

The core lesson from Devstral 2’s release isn’t about model architecture or parameter efficiency. It’s about validation culture. Google’s recent FACTS Benchmark Suite revealed that even the best AI models, Gemini 3 Pro, achieve only 69% factual accuracy. For context, any reporter filing stories at 69% accuracy would be fired. In law, medicine, or finance, that error rate is catastrophic.

This baseline reality makes community testing essential. Enterprise buyers can’t trust vendor benchmarks. Researchers can’t trust leaderboard scores. Developers can’t trust release announcements. The only validation that matters is reproducible results on the tools actually used in production.

Mistral skipped this step. They shipped a model that required custom behavior, poorly documented templates, and specific scaffolding to perform as advertised. For developers who make technology recommendations at work based on their personal homelab experiments, a pattern that drives enterprise adoption, this failure is a dealbreaker. The attention of AI geeks is worth gold because it translates directly into corporate purchasing decisions. When those geeks encounter broken implementations, they don’t just get frustrated, they blacklist vendors.

The counterargument, that Mistral isn’t responsible for third-party projects like llama.cpp, misses the point of modern AI deployment. Companies don’t release models into a vacuum, they release them into ecosystems. When Meta ships Llama, they ensure compatibility. When Google ships Gemma, they provide quantization scripts. The expectation isn’t entitlement, it’s the baseline cost of doing business in open AI.

The Path Forward: Continuous Stewardship Over Publish-and-Forget

Stanford researchers are advocating for a shift from “publish-and-forget” benchmarking to continuous stewardship. Their framework, using statistical methods to flag outliers, then LLMs to justify flags, achieved 84% precision in identifying flawed questions. This approach reduces human review time while catching errors that poison the entire evaluation pipeline.

AI labs need to adopt similar practices for model releases. That means:

– Pre-release validation on community-standard tools, not just internal infrastructure

– Transparent benchmark reproduction with links to live leaderboard entries

– Clear licensing that doesn’t exploit open-source terminology for commercial gain

– Rapid iteration when community members identify blocking issues

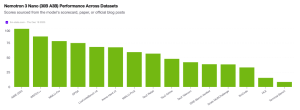

Mistral’s own human evaluations show Claude Sonnet 4.5 remains “significantly preferred” over Devstral 2 for real coding tasks. Devstral wins on tool-calling success rates, a narrow metric where it matches closed models, but loses on overall code quality. That honesty is refreshing, but it’s undercut by the inability to reproduce the numbers that matter.

The Takeaway: Trust Is the Real Infrastructure

The AI community is approaching an inflection point. Models are becoming commodities, trust is becoming scarce. When benchmarks are broken, licenses are deceptive, and releases require days of community debugging to work, the value proposition collapses.

Mistral’s engineering is real. Devstral 2 delivers remarkable efficiency, and the smaller Apache 2.0 variant is genuinely useful for hobbyists and small teams. But trust once broken is expensive to rebuild. The company that positioned itself as Europe’s transparent alternative now looks like another vendor optimizing for hype cycles over user success.

Google’s FACTS benchmark, Stanford’s “fantastic bugs” research, and the Devstral 2 backlash share a common thread: the AI industry has a validation crisis. Community testing isn’t a nice-to-have, it’s the only mechanism left that can separate real progress from marketing theater. Labs that treat developers as partners rather than free QA will win. Those that don’t will find their models downloaded, dissected, and discarded before the first invoice ever gets paid.

For enterprise buyers, the message is clear: ignore open-source marketing. Evaluate on reproducible performance and commercial terms. For developers, the lesson is simpler: wait for community validation before committing your weekend to a new model. And for Mistral, the path forward requires more than impressive benchmarks. It requires proving those benchmarks matter in the real world, on real hardware, for real users.

The attention of AI geeks is worth gold. But only if you’re willing to earn it.