LG AI Research just dropped a 236-billion-parameter bombshell in Seoul, and the global AI community should be paying attention, not because K-EXAONE represents a revolutionary breakthrough, but because it exposes the uncomfortable tensions between engineering pragmatism and geopolitical AI ambitions.

At the government-backed “Independent AI Foundation Model” presentation on December 30, LG unveiled a model that supposedly outperforms Alibaba’s Qwen3 235B and OpenAI’s GPT-OSS while using a fraction of the active parameters. The claims are bold: 104% of Qwen3’s performance with just 23 billion active parameters, 1.5x inference speedup through multi-token prediction, and a 256K context window that doesn’t melt your GPU cluster. But as with most AI announcements these days, the devil lives in the architectural details and the politically charged framing.

The MoE Architecture That’s Not Just Another Mixture

K-EXAONE’s technical architecture reveals a fascinating exercise in efficiency engineering. The model employs 128 total experts with only 8 activated per token, plus a shared expert that presumably handles common linguistic patterns. This 16:1 ratio isn’t extreme, DeepSeek-V3 uses 256 experts with 8 active, but LG’s implementation includes some genuinely interesting choices:

# Simplified MoE configuration from LG's technical specs

moe_config = {

"num_experts": 128,

"num_activated_experts": 8,

"num_shared_experts": 1,

"moe_intermediate_size": 2048,

"hidden_dimension": 6144

}The 6,144 hidden dimension is notably smaller than comparable models, suggesting LG prioritized memory bandwidth over raw capacity. This becomes crucial when you realize they’re targeting A100 deployment environments, not just H100 clusters. The hybrid attention scheme, alternating between 128-token sliding windows and global attention layers, reportedly cuts memory usage by 70% compared to full attention. That’s not marketing fluff, it’s a legitimate approach to making long-context inference economically viable.

But here’s where the Reddit crowd raises valid concerns: Multi-Token Prediction (MTP) might not deliver the promised 1.5x speedup in practice. As one technical commenter noted, when you’re running batch size 1 (the typical production scenario), correctly predicting the next 2-3 tokens often means invoking 2-3 times as many experts. You’re still bandwidth-limited, and you’ve added computational overhead for the MTP heads. The speedup likely materializes only at larger batch sizes where expert utilization averages out, a detail LG’s marketing glosses over.

Benchmark Wars: When 104% Actually Means “It’s Complicated”

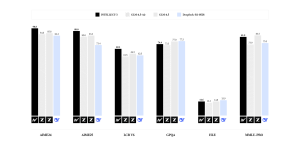

LG’s performance claims deserve scrutiny, not because they’re obviously false, but because they highlight how benchmark gaming has become standard practice. Their evaluation table shows K-EXAONE scoring 72.03 on their 13-benchmark suite, versus Qwen3’s 69.37:

| Model | Avg Score | MMLU-Pro | AIME 2025 | LiveCodeBench | τ2-Bench |

|---|---|---|---|---|---|

| K-EXAONE | 72.03 | 83.9 | 92.6 | 81.1 | 71.9 |

| Qwen3-Thinking | 69.37 | 84.4 | 92.3 | 74.1 | 45.6 |

| DeepSeek-V3.2 | 73.8 | 85.0 | 93.1 | 79.4 | 85.8 |

The numbers look impressive until you notice the selective comparison. K-EXAONE beats Qwen3 on LiveCodeBench by 7 points but trails on MMLU-Pro. It crushes Qwen3 on the telecom-specific τ2-Bench (71.9 vs 45.6) but gets demolished by DeepSeek-V3.2 on the same metric (85.8). This is classic benchmark cherry-picking: choose the suite that flatters your model’s strengths.

Industry watchers on developer forums have seen this movie before. The sentiment is clear: when official benchmarks look good, it’s “benchmaxxing”, when they don’t, it’s “underwhelming.” LG’s decision to emphasize their 104% figure while burying the fact that DeepSeek-V3.2 still leads on several key metrics feels like strategic positioning, not scientific transparency.

The “Korean Cultural Context” Landmine

Perhaps the most controversial aspect of K-EXAONE isn’t technical at all. LG’s safety section claims the model “uniquely incorporates Korean cultural and historical contexts to address regional sensitivities often overlooked by other models.” This innocuous-sounding statement triggered immediate skepticism in the AI community.

What does this actually mean? Does K-EXAONE suppress discussions of comfort women or forced labor issues that remain sensitive in Japan-Korea relations? Does it present revisionist historical perspectives on the Korean War or collaboration with Japanese colonial authorities? The model card provides no specifics, only vague assurances about “universal human values” and “high reliability across diverse risk categories.”

This isn’t just academic speculation. Korea’s ongoing “gender war” and intense political polarization mean any model claiming to reflect “Korean cultural contexts” is walking through a minefield. One developer noted that the gender discourse in Korea has gone “absolutely wild,” and a model trying to navigate these waters risks alienating half the population regardless of its stance.

The broader concern is more philosophical: as AI becomes a geopolitical instrument, are we witnessing the balkanization of language models along national identity lines? When LG explicitly positions K-EXAONE as a “sovereign AI” alternative to American and Chinese models, they’re not just selling software, they’re selling digital nationalism.

Efficiency vs. Scale: The Real Korean AI Strategy

K-EXAONE’s most honest innovation might be its pragmatic focus on deployment economics. By optimizing for A100-class hardware instead of demanding H100 clusters, LG is making a calculated bet that most organizations can’t afford the GPU arms race. This aligns with Korea’s broader industrial strategy: dominate the mid-market with cost-effective solutions while the superpowers fight over trillion-parameter models.

The model’s 256K context window, twice EXAONE 4.0’s capacity, targets document-heavy enterprise use cases: legal contract analysis, financial report synthesis, and academic research. The 6-language support (Korean, English, Spanish, German, Japanese, Vietnamese) reflects Korea’s export-oriented economy and regional ambitions.

But the deployment reality remains messy. LG admits you need forked versions of Transformers, vLLM, SGLang, and llama.cpp to run K-EXAONE. Their vLLM integration requires:

vllm serve LGAI-EXAONE/K-EXAONE-236B-A23B \

--reasoning-parser deepseek_v3 \

--enable-auto-tool-choice \

--tool-call-parser hermesThis isn’t “pip install and go.” It’s the typical friction of a research model not yet integrated into mainstream tooling. The promised TensorRT-LLM support is “being prepared”, which means production deployments are still months away for most teams.

The Sovereign AI Paradox

K-EXAONE embodies a fundamental tension in the current AI race. Korea is investing 530 billion won ($380M USD) to develop “independent” AI models while simultaneously building on American CUDA infrastructure, using Nvidia GPUs, and releasing weights under licenses that still restrict commercial use.

LG’s consortium includes domestic champions like LG Uplus, LG CNS, and FuriosaAI, but the underlying technology stack remains globally interdependent. The model’s impressive performance on Korean benchmarks (KMMLU-Pro: 67.3 vs GPT-OSS’s 62.4) demonstrates the value of culturally-specific training data, but raises questions about generalization beyond the Korean context.

The geopolitical framing also creates a trust problem. When a model is marketed as “Korea’s answer to GPT-5”, developers wonder if technical decisions are being driven by national pride rather than empirical optimization. The Reddit discussion around “censorship” concerns, whether justified or not, reflects a growing wariness of AI models as instruments of state narrative control.

What This Means for the AI Landscape

K-EXAONE matters not because it’s the best model available, but because it represents a viable third path. While American labs chase scale and Chinese labs chase efficiency, Korean researchers are demonstrating that targeted optimization for specific hardware and cultural contexts can produce competitive results.

The model’s technical achievements, particularly the hybrid attention scheme and pragmatic deployment targets, offer real lessons for teams building production AI systems. The 70% memory reduction isn’t magic, it’s careful engineering tradeoffs that sacrifice some theoretical capacity for practical deployability.

But the benchmark claims deserve the same skepticism we’d apply to any vendor announcement. Until independent researchers reproduce LG’s results and probe the model’s limitations, the 104% figure is marketing, not science. The true test will come when Korean startups and SMEs actually deploy K-EXAONE in production, revealing whether the efficiency gains hold up under real-world load.

For now, K-EXAONE stands as a statement of intent: Korea won’t cede the AI race to the US and China. Whether that ambition translates into lasting technical leadership depends less on government press releases and more on the open-source community’s ability to independently verify and improve upon LG’s work. The model is available on Hugging Face, the real evaluation starts now.