GLM-4.7-Flash: The Local LLL That Actually Does What It Promises (Mostly)

The local LLM community has a new obsession, and for once, it might be justified. GLM-4.7-Flash isn’t just another quantized model promising the moon and delivering a buggy mess. Early reports show it actually handles agentic workflows, cloning repos, running commands, editing files, without the typical MoE meltdown. But getting there required a community sprint that exposes exactly how fragile the local AI ecosystem remains.

The Promise: Agentic Reliability on Modest Hardware

What makes GLM-4.7-Flash genuinely interesting isn’t the benchmark numbers (though those are solid). It’s that developers are running it for hours in agentic frameworks like OpenCode without a single tool-calling error. One developer reported generating “hundreds of thousands of tokens in one session with context compacting” while the model cloned repositories, executed commands, and committed changes flawlessly.

The architecture backs this up: a 30B-A3B MoE (that’s 30 billion total parameters, 3 billion active) with a 200,000-token context window. On paper, it’s the same size class as Qwen3-30B-A3B, but the performance characteristics tell a different story. The model hits 95 tokens/second on short prompts with dual RTX 3090s, settling to a steady 80 t/s. Even with 15K context, it maintains a usable 20-30 t/s, speeds that make actual interactive development possible.

The benchmarks confirm the real-world sentiment. In the Unsloth evaluation, GLM-4.7-Flash scores 59.2% on SWE-bench Verified and 79.5% on τ²-Bench, both measuring multi-turn agentic performance. That’s not just good, it’s competitive with models twice its size and miles ahead of the 22% SWE-bench score from Qwen3-30B.

The Reality: A Deployment Saga

Here’s where the story gets messy. The model dropped on Hugging Face, and immediately the community hit walls. The official vLLM implementation? Broken in the nightly Docker images. Building from scratch? A day-long download on hotel WiFi. As one developer put it after wasting an afternoon: “Really should have just trusted the llama.cpp gang to get there first.”

And they did. Within hours, a community member submitted PR #18936 to llama.cpp, adding support for the Glm4MoeLite architecture. The implementation treats it as “just a renamed version of DeepSeekV3 with some code moved around”, which turned out to be both accurate and problematic.

The Flash Attention Trap

The first major gotcha: Flash Attention is broken. Not just slow, actually broken. Multiple developers reported 3x performance decreases with -fa 1 compared to -fa 0. The issue appears architecture-specific, as one contributor noted, “the model is only running with -fa off (or it disables it if set to auto). Explicitly setting it to -fa on seems to fall back to CPU.”

This isn’t a CUDA-only problem. AMD users on ROCm see the same slowdown. The root cause seems to be missing MLA (Multi-head Latent Attention) kernel support for GLM-4.7-Flash’s specific tensor dimensions. The llama.cpp team acknowledged this is “above my pay-grade at this point” and requires a separate PR to fix.

The Quantization Quagmire

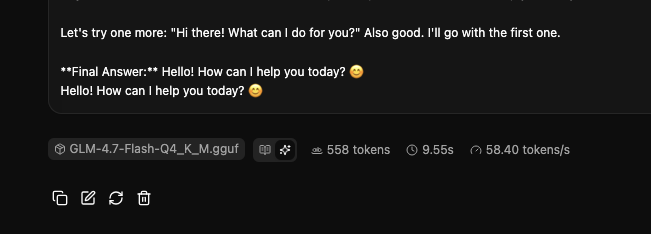

Then came the quantization issues. Early GGUF conversions crashed with segmentation faults or produced garbled output. The problem? Tokenizer mismatches. GLM-4.7-Flash uses 154,856 tokens vs GLM-4.5’s 151,365, and the special token IDs don’t align. The <|user|> token is supposed to be an EOS signal, but early GGUFs only set <|endoftext|>, causing infinite generation loops where the model talks to itself:

Wait - looking at `User` (the first line of my internal thought block)...

Ah, I see. In this specific simulation/turn-based interface:

1. User says "hello"

2. Model responds with weirdness

3. **Current Turn**: The user is sending the exact same command...The fix required hardcoding <|user|> and <|observation|> as end-of-generation tokens in llama.cpp’s vocabulary handling. But this created a new problem: quantized versions started looping or producing gibberish. The Unsloth team eventually recommended BF16 as the only stable format, with specific sampling parameters (--temp 0.2 --top-k 50 --top-p 0.95 --min-p 0.01 --dry-multiplier 1.1) to reduce repetition.

The Numbers That Matter

Let’s talk concrete performance. On a dual RTX 3090 setup, the model delivers:

| Metric | Performance |

|---|---|

| Short prompts | ~95 t/s initial, 80 t/s sustained |

| Long context (15K) | 20-30 t/s |

| Max context tested | 26,624 tokens |

| VRAM usage (AWQ-4bit) | ~24GB with MTP disabled |

Disabling Multi-Token Prediction (MTP) saved 5GB of VRAM and fixed logic errors that dropped accuracy to 1% on complex tasks. The vLLM deployment required transformers installed from Git source and careful GPU memory management:

podman run -it --rm \

--device nvidia.com/gpu=all \

--ipc=host \

-v ~/.cache/huggingface:/root/.cache/huggingface:z \

glm-4.7-custom \

serve cyankiwi/GLM-4.7-Flash-AWQ-4bit \

--tensor-parallel-size 2 \

--tool-call-parser glm47 \

--reasoning-parser glm45 \

--enable-auto-tool-choice \

--max-model-len 26624 \

--gpu-memory-utilization 0.94The Competition: Where It Actually Stands

The community has been brutally honest about comparisons. Against Nemotron-30B, GLM-4.7-Flash “crushes it” for most use cases, but Nemotron still wins for “data analysis, scientific problem solving, technical concept adherence, and disparate concept synthesis.” For pure coding and tool use, GLM-4.7-Flash is the clear winner.

The Qwen3 comparison is more nuanced. Qwen3-30B-A3B scores 66% on LCB v6 vs GLM-4.7’s 64%, but GLM wins on SWE-bench (59.2% vs 22%) and τ²-Bench (79.5% vs 49%). The difference? GLM’s architecture seems optimized for sustained agentic workflows, while Qwen3 focuses on single-turn reasoning.

The Bottom Line

GLM-4.7-Flash represents something rare: a model that delivers on its core promise. It actually works for local agentic development, which is revolutionary in a space where “agentic” usually means “works for five minutes then hallucinates a tool call that crashes your system.”

But the deployment friction is a reality check. You need:

– Latest llama.cpp from source (post-PR #18936)

– BF16 format for stability (71GB+)

– Flash Attention disabled

– Specific sampling parameters to avoid loops

– Patience for quantization issues

For developers willing to navigate these quirks, the reward is a local agent that can handle complex, multi-file software engineering tasks at speeds that won’t make you want to switch back to cloud APIs. For the ecosystem, it’s proof that community-driven support can beat official implementations, if you’re willing to endure the growing pains.

The model’s success also raises questions about MoE efficiency. With only 3 billion active parameters achieving this performance, it makes dense models look wasteful. But as one developer noted, “these super sparse small MoE’s are just mildly useful idiots” compared to the depth of dense models for specific tasks. GLM-4.7-Flash might be the exception that proves the rule.

For now, the verdict is clear: if you need a reliable local agent and can spare the VRAM, GLM-4.7-Flash is worth the setup hassle. Just don’t expect it to be plug-and-play. The community has done the heavy lifting, but the finish line is still a moving target.